46 Deep Learning tips for Classification and Regression

- Datasets:

spiral.csv,grid.csv,covtype.full.csv - Algorithms:

- Deep Learning with

h2o

- Deep Learning with

- Techniques:

- Decision Boundaries

- Hyper-parameter Tuning with Grid Search

- Checkpointing

- Cross-Validation

46.1 Introduction

Source: http://docs.h2o.ai/h2o-tutorials/latest-stable/tutorials/deeplearning/index.html

Repo: https://github.com/h2oai/h2o-tutorials

This tutorial shows how a H2O Deep Learning model can be used to do supervised classification and regression. A great tutorial about Deep Learning is given by Quoc Le here and here. This tutorial covers usage of H2O from R. A python version of this tutorial will be available as well in a separate document. This file is available in plain R, R markdown and regular markdown formats, and the plots are available as PDF files. All documents are available on Github.

If run from plain R, execute R in the directory of this script. If run from RStudio, be sure to setwd() to the location of this script.h2o.init() starts H2O in R’s current working directory. h2o.importFile() looks for files from the perspective of where H2O was started.

More examples and explanations can be found in our H2O Deep Learning booklet and on our H2O Github Repository. The PDF slide deck can be found on Github.

46.2 H2O R Package

Load the H2O R package:

Source: http://docs.h2o.ai/h2o-tutorials/latest-stable/tutorials/deeplearning/index.html

## R installation instructions are at http://h2o.ai/download

library(h2o)

#>

#> ----------------------------------------------------------------------

#>

#> Your next step is to start H2O:

#> > h2o.init()

#>

#> For H2O package documentation, ask for help:

#> > ??h2o

#>

#> After starting H2O, you can use the Web UI at http://localhost:54321

#> For more information visit http://docs.h2o.ai

#>

#> ----------------------------------------------------------------------

#>

#> Attaching package: 'h2o'

#> The following objects are masked from 'package:stats':

#>

#> cor, sd, var

#> The following objects are masked from 'package:base':

#>

#> &&, %*%, %in%, ||, apply, as.factor, as.numeric, colnames,

#> colnames<-, ifelse, is.character, is.factor, is.numeric, log,

#> log10, log1p, log2, round, signif, trunc46.3 Start H2O

Start up a 1-node H2O server on your local machine, and allow it to use all CPU cores and up to 2GB of memory:

h2o.init(nthreads=-1, max_mem_size="2G")

#> Connection successful!

#>

#> R is connected to the H2O cluster:

#> H2O cluster uptime: 38 minutes 44 seconds

#> H2O cluster timezone: Etc/UTC

#> H2O data parsing timezone: UTC

#> H2O cluster version: 3.30.0.1

#> H2O cluster version age: 7 months and 16 days !!!

#> H2O cluster name: H2O_started_from_R_root_mwl453

#> H2O cluster total nodes: 1

#> H2O cluster total memory: 7.07 GB

#> H2O cluster total cores: 8

#> H2O cluster allowed cores: 8

#> H2O cluster healthy: TRUE

#> H2O Connection ip: localhost

#> H2O Connection port: 54321

#> H2O Connection proxy: NA

#> H2O Internal Security: FALSE

#> H2O API Extensions: Amazon S3, XGBoost, Algos, AutoML, Core V3, TargetEncoder, Core V4

#> R Version: R version 3.6.3 (2020-02-29)

#> Warning in h2o.clusterInfo():

#> Your H2O cluster version is too old (7 months and 16 days)!

#> Please download and install the latest version from http://h2o.ai/download/

h2o.removeAll() ## clean slate - just in case the cluster was already runningThe h2o.deeplearning function fits H2O’s Deep Learning models from within R. We can run the example from the man page using the example function, or run a longer demonstration from the h2o package using the demo function::

args(h2o.deeplearning)

#> function (x, y, training_frame, model_id = NULL, validation_frame = NULL,

#> nfolds = 0, keep_cross_validation_models = TRUE, keep_cross_validation_predictions = FALSE,

#> keep_cross_validation_fold_assignment = FALSE, fold_assignment = c("AUTO",

#> "Random", "Modulo", "Stratified"), fold_column = NULL,

#> ignore_const_cols = TRUE, score_each_iteration = FALSE, weights_column = NULL,

#> offset_column = NULL, balance_classes = FALSE, class_sampling_factors = NULL,

#> max_after_balance_size = 5, max_hit_ratio_k = 0, checkpoint = NULL,

#> pretrained_autoencoder = NULL, overwrite_with_best_model = TRUE,

#> use_all_factor_levels = TRUE, standardize = TRUE, activation = c("Tanh",

#> "TanhWithDropout", "Rectifier", "RectifierWithDropout",

#> "Maxout", "MaxoutWithDropout"), hidden = c(200, 200),

#> epochs = 10, train_samples_per_iteration = -2, target_ratio_comm_to_comp = 0.05,

#> seed = -1, adaptive_rate = TRUE, rho = 0.99, epsilon = 1e-08,

#> rate = 0.005, rate_annealing = 1e-06, rate_decay = 1, momentum_start = 0,

#> momentum_ramp = 1e+06, momentum_stable = 0, nesterov_accelerated_gradient = TRUE,

#> input_dropout_ratio = 0, hidden_dropout_ratios = NULL, l1 = 0,

#> l2 = 0, max_w2 = 3.4028235e+38, initial_weight_distribution = c("UniformAdaptive",

#> "Uniform", "Normal"), initial_weight_scale = 1, initial_weights = NULL,

#> initial_biases = NULL, loss = c("Automatic", "CrossEntropy",

#> "Quadratic", "Huber", "Absolute", "Quantile"), distribution = c("AUTO",

#> "bernoulli", "multinomial", "gaussian", "poisson", "gamma",

#> "tweedie", "laplace", "quantile", "huber"), quantile_alpha = 0.5,

#> tweedie_power = 1.5, huber_alpha = 0.9, score_interval = 5,

#> score_training_samples = 10000, score_validation_samples = 0,

#> score_duty_cycle = 0.1, classification_stop = 0, regression_stop = 1e-06,

#> stopping_rounds = 5, stopping_metric = c("AUTO", "deviance",

#> "logloss", "MSE", "RMSE", "MAE", "RMSLE", "AUC", "AUCPR",

#> "lift_top_group", "misclassification", "mean_per_class_error",

#> "custom", "custom_increasing"), stopping_tolerance = 0,

#> max_runtime_secs = 0, score_validation_sampling = c("Uniform",

#> "Stratified"), diagnostics = TRUE, fast_mode = TRUE,

#> force_load_balance = TRUE, variable_importances = TRUE, replicate_training_data = TRUE,

#> single_node_mode = FALSE, shuffle_training_data = FALSE,

#> missing_values_handling = c("MeanImputation", "Skip"), quiet_mode = FALSE,

#> autoencoder = FALSE, sparse = FALSE, col_major = FALSE, average_activation = 0,

#> sparsity_beta = 0, max_categorical_features = 2147483647,

#> reproducible = FALSE, export_weights_and_biases = FALSE,

#> mini_batch_size = 1, categorical_encoding = c("AUTO", "Enum",

#> "OneHotInternal", "OneHotExplicit", "Binary", "Eigen",

#> "LabelEncoder", "SortByResponse", "EnumLimited"), elastic_averaging = FALSE,

#> elastic_averaging_moving_rate = 0.9, elastic_averaging_regularization = 0.001,

#> export_checkpoints_dir = NULL, verbose = FALSE)

#> NULL

if (interactive()) help(h2o.deeplearning)

example(h2o.deeplearning)

#>

#> h2.dpl> ## Not run:

#> h2.dpl> ##D library(h2o)

#> h2.dpl> ##D h2o.init()

#> h2.dpl> ##D iris_hf <- as.h2o(iris)

#> h2.dpl> ##D iris_dl <- h2o.deeplearning(x = 1:4, y = 5, training_frame = iris_hf, seed=123456)

#> h2.dpl> ##D

#> h2.dpl> ##D # now make a prediction

#> h2.dpl> ##D predictions <- h2o.predict(iris_dl, iris_hf)

#> h2.dpl> ## End(Not run)

#> h2.dpl>

#> h2.dpl>

#> h2.dpl>

if (interactive()) demo(h2o.deeplearning) #requires user interactionWhile H2O Deep Learning has many parameters, it was designed to be just as easy to use as the other supervised training methods in H2O. Early stopping, automatic data standardization and handling of categorical variables and missing values and adaptive learning rates (per weight) reduce the amount of parameters the user has to specify. Often, it’s just the number and sizes of hidden layers, the number of epochs and the activation function and maybe some regularization techniques.

46.4 Let’s have some fun first: Decision Boundaries

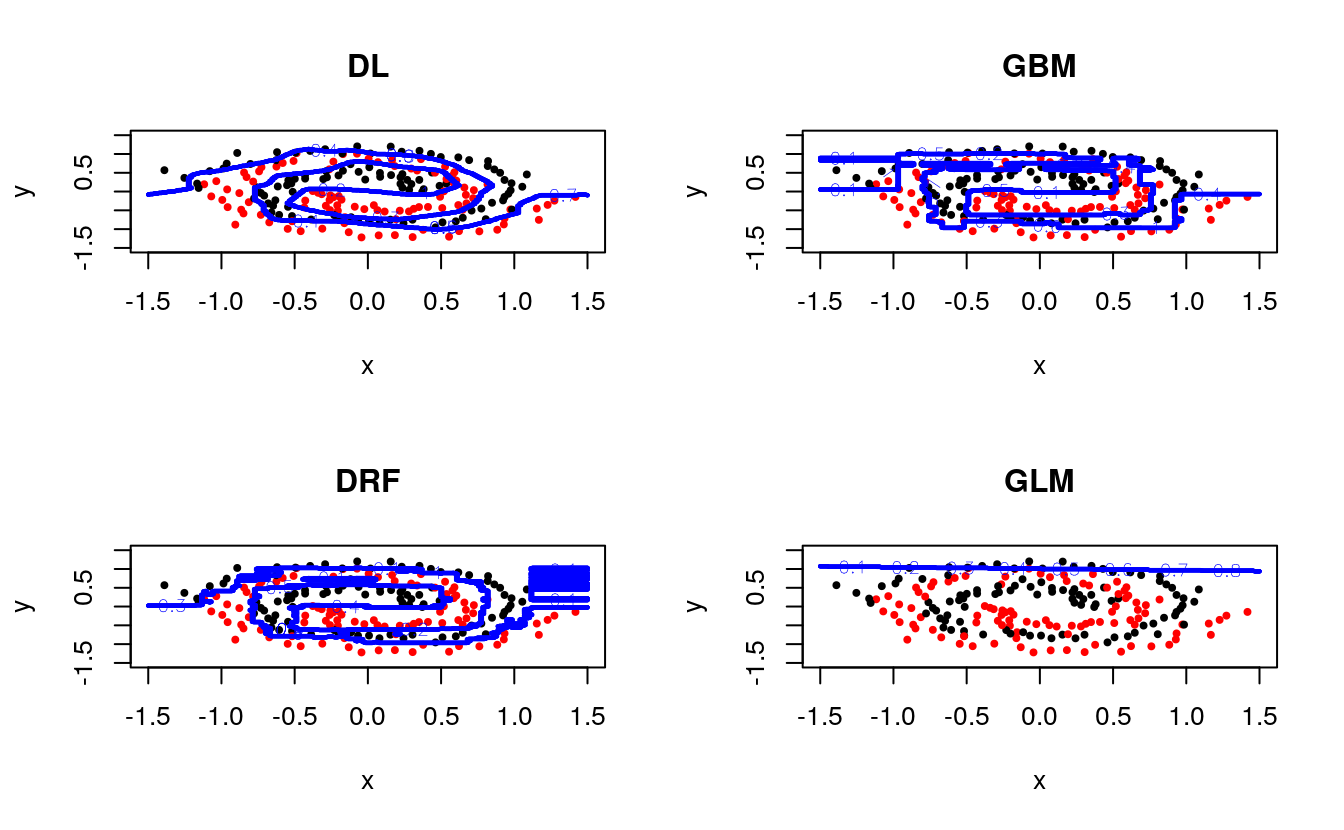

We start with a small dataset representing red and black dots on a plane, arranged in the shape of two nested spirals. Then we task H2O’s machine learning methods to separate the red and black dots, i.e., recognize each spiral as such by assigning each point in the plane to one of the two spirals.

We visualize the nature of H2O Deep Learning (DL), H2O’s tree methods (GBM/DRF) and H2O’s generalized linear modeling (GLM) by plotting the decision boundary between the red and black spirals:

# setwd("~/h2o-tutorials/tutorials/deeplearning") ##For RStudio

spiral <- h2o.importFile(path = normalizePath(file.path(data_raw_dir, "spiral.csv")))

#>

|

| | 0%

|

|======================================================================| 100%

grid <- h2o.importFile(path = normalizePath(file.path(data_raw_dir, "grid.csv")))

#>

|

| | 0%

|

|=============== | 22%

|

|======================================================================| 100%

# Define helper to plot contours

plotC <- function(name, model, data=spiral, g=grid) {

data <- as.data.frame(data) #get data from into R

pred <- as.data.frame(h2o.predict(model, g))

n=0.5*(sqrt(nrow(g))-1); d <- 1.5; h <- d*(-n:n)/n

plot(data[,-3],pch=19,col=data[,3],cex=0.5,

xlim=c(-d,d),ylim=c(-d,d),main=name)

contour(h,h,z=array(ifelse(pred[,1]=="Red",0,1),

dim=c(2*n+1,2*n+1)),col="blue",lwd=2,add=T)

}We build a few different models:

#dev.new(noRStudioGD=FALSE) #direct plotting output to a new window

par(mfrow=c(2,2)) #set up the canvas for 2x2 plots

plotC( "DL", h2o.deeplearning(1:2,3,spiral,epochs=1e3))

plotC("GBM", h2o.gbm (1:2,3,spiral))

plotC("DRF", h2o.randomForest(1:2,3,spiral))

plotC("GLM", h2o.glm (1:2,3,spiral,family="binomial")) Let’s investigate some more Deep Learning models. First, we explore the evolution over training time (number of passes over the data), and we use checkpointing to continue training the same model:

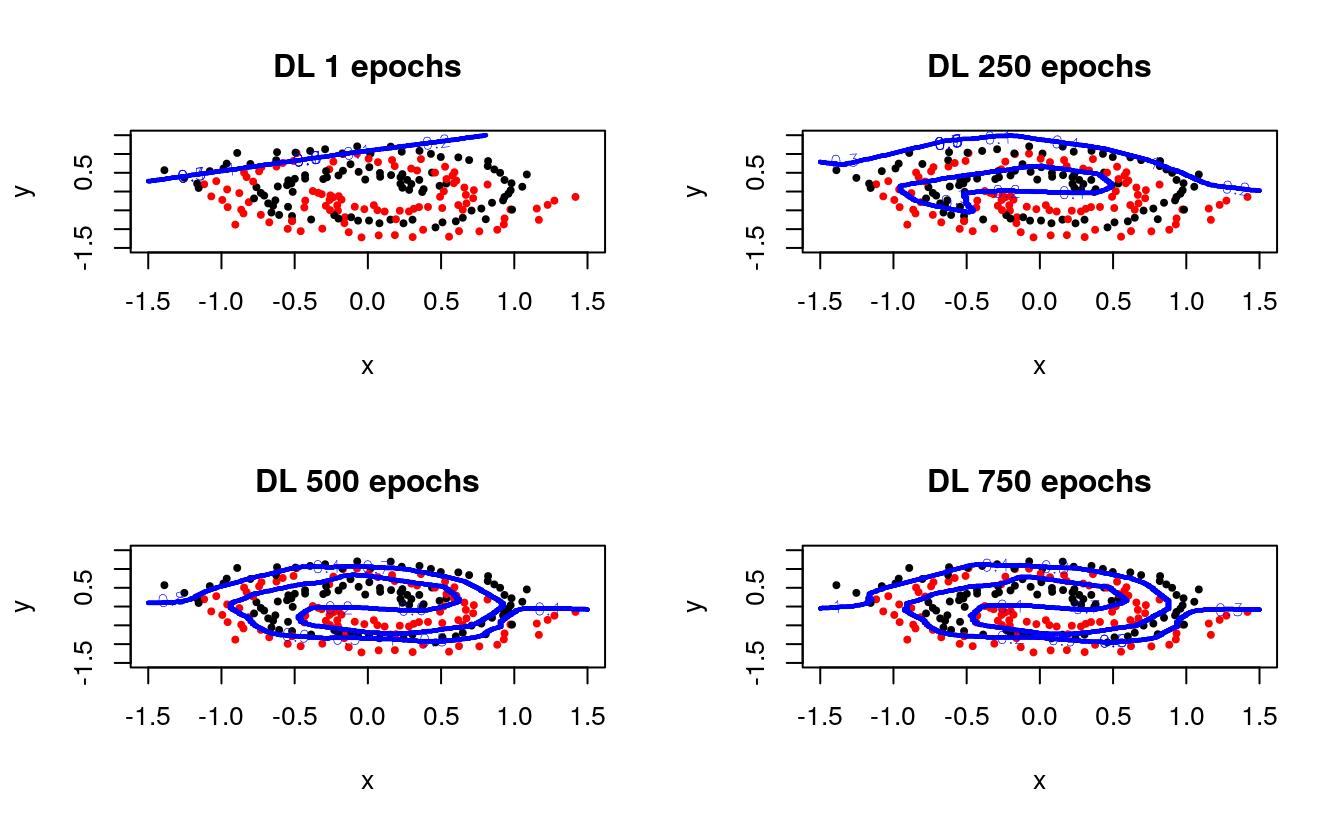

Let’s investigate some more Deep Learning models. First, we explore the evolution over training time (number of passes over the data), and we use checkpointing to continue training the same model:

#dev.new(noRStudioGD=FALSE) #direct plotting output to a new window

par(mfrow=c(2,2)) #set up the canvas for 2x2 plots

ep <- c(1,250,500,750)

plotC(paste0("DL ",ep[1]," epochs"),

h2o.deeplearning(1:2,3,spiral,epochs=ep[1],

model_id="dl_1"))

plotC(paste0("DL ",ep[2]," epochs"),

h2o.deeplearning(1:2,3,spiral,epochs=ep[2],

checkpoint="dl_1",model_id="dl_2"))

plotC(paste0("DL ",ep[3]," epochs"),

h2o.deeplearning(1:2,3,spiral,epochs=ep[3],

checkpoint="dl_2",model_id="dl_3"))

plotC(paste0("DL ",ep[4]," epochs"),

h2o.deeplearning(1:2,3,spiral,epochs=ep[4],

checkpoint="dl_3",model_id="dl_4"))

You can see how the network learns the structure of the spirals with enough training time. We explore different network architectures next:

#dev.new(noRStudioGD=FALSE) #direct plotting output to a new window

par(mfrow=c(2,2)) #set up the canvas for 2x2 plots

for (hidden in list(c(11,13,17,19),c(42,42,42),c(200,200),c(1000))) {

plotC(paste0("DL hidden=",paste0(hidden, collapse="x")),

h2o.deeplearning(1:2,3 ,spiral, hidden=hidden, epochs=500))

}

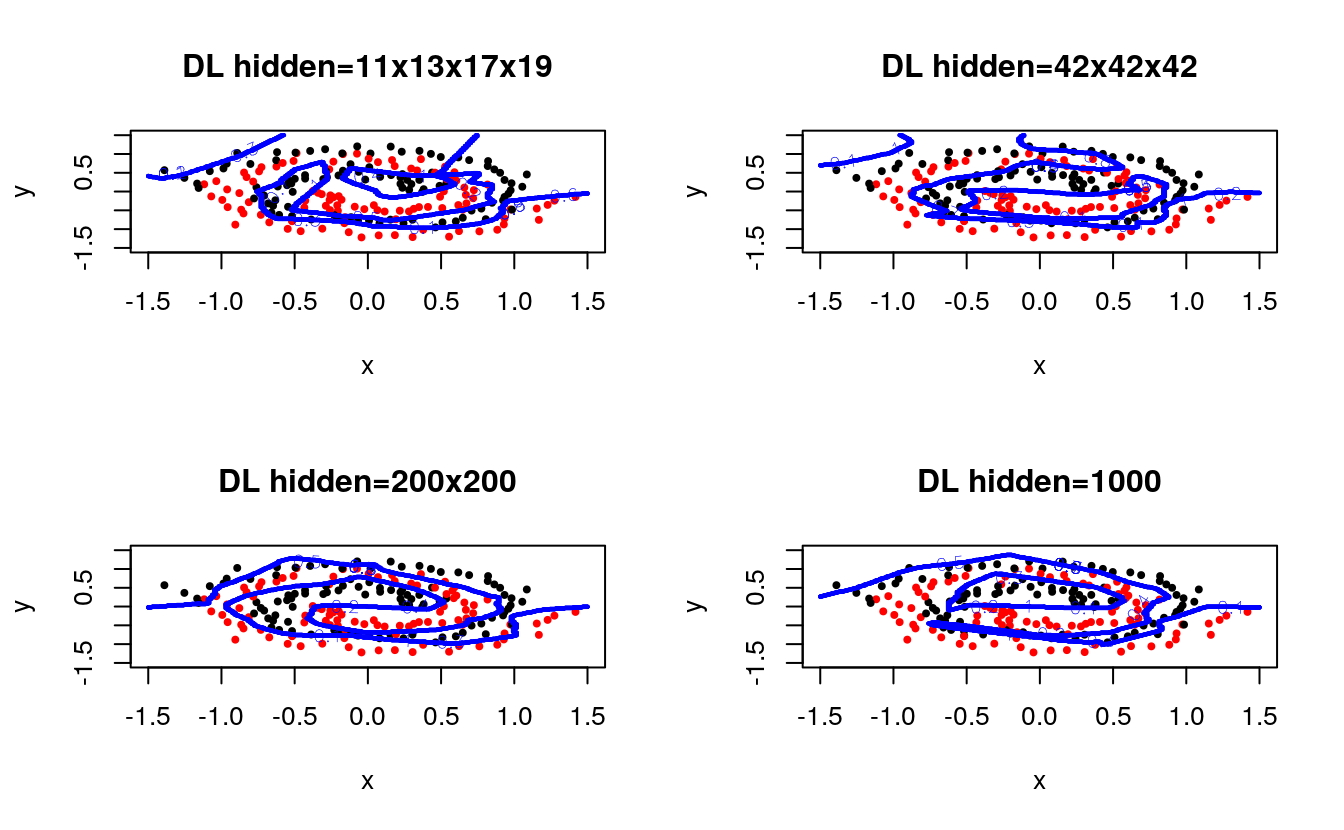

It is clear that different configurations can achieve similar performance, and that tuning will be required for optimal performance. Next, we compare between different activation functions, including one with 50% dropout regularization in the hidden layers:

#dev.new(noRStudioGD=FALSE) #direct plotting output to a new window

par(mfrow=c(2,2)) #set up the canvas for 2x2 plots

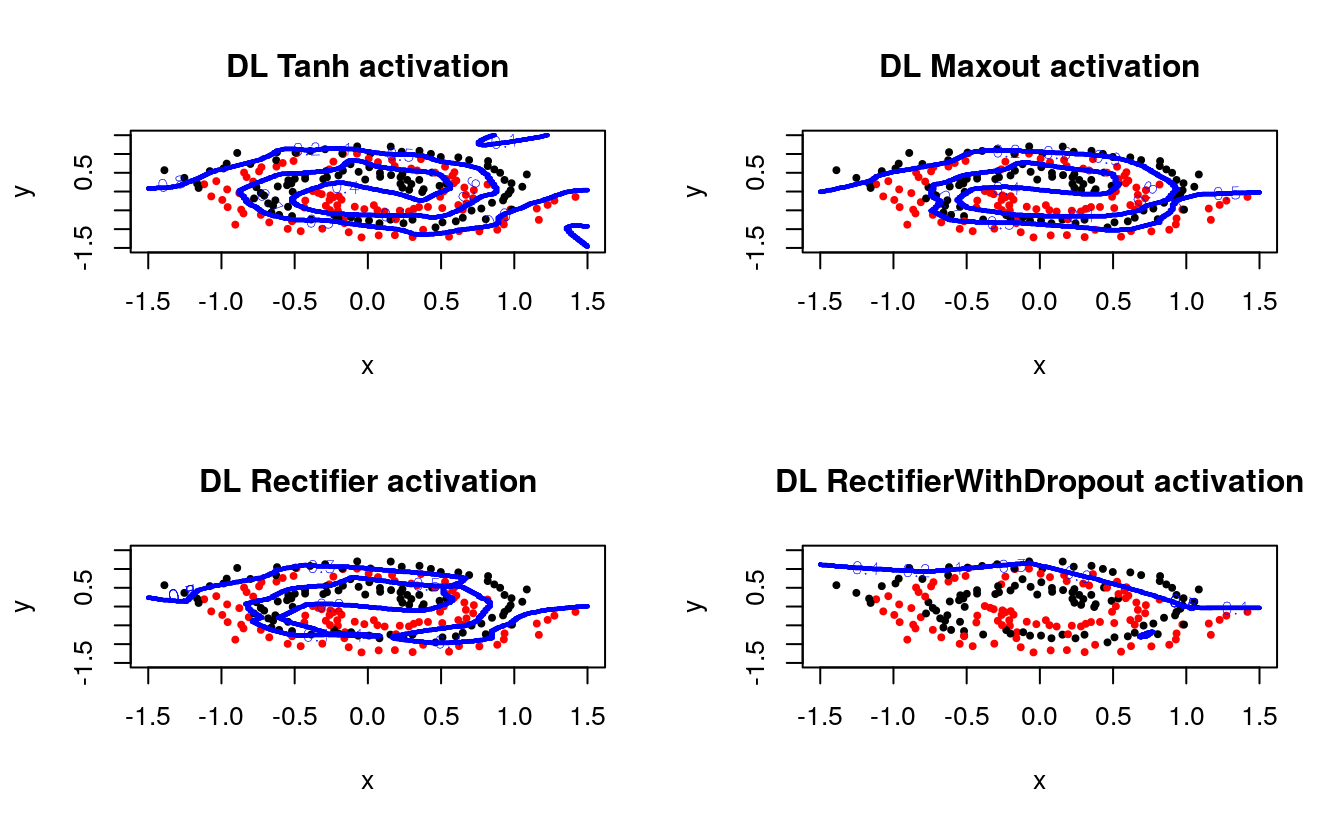

for (act in c("Tanh", "Maxout", "Rectifier", "RectifierWithDropout")) {

plotC(paste0("DL ",act," activation"),

h2o.deeplearning(1:2,3, spiral,

activation = act,

hidden = c(100,100),

epochs = 1000))

}

Clearly, the dropout rate was too high or the number of epochs was too low for the last configuration, which often ends up performing the best on larger datasets where generalization is important.

More information about the parameters can be found in the H2O Deep Learning booklet.

46.5 Cover Type Dataset

We important the full cover type dataset (581k rows, 13 columns, 10 numerical, 3 categorical). We also split the data 3 ways: 60% for training, 20% for validation (hyper parameter tuning) and 20% for final testing.

df <- h2o.importFile(path = normalizePath(file.path(data_raw_dir, "covtype.full.csv")))

#>

|

| | 0%

|

|======================================================================| 100%

dim(df)

#> [1] 581012 13

df

#> Elevation Aspect Slope Horizontal_Distance_To_Hydrology

#> 1 3066 124 5 0

#> 2 3136 32 20 450

#> 3 2655 28 14 42

#> 4 3191 45 19 323

#> 5 3217 80 13 30

#> 6 3119 293 13 30

#> Vertical_Distance_To_Hydrology Horizontal_Distance_To_Roadways Hillshade_9am

#> 1 0 1533 229

#> 2 -38 1290 211

#> 3 8 1890 214

#> 4 88 3932 221

#> 5 1 3901 237

#> 6 10 4810 182

#> Hillshade_Noon Hillshade_3pm Horizontal_Distance_To_Fire_Points

#> 1 236 141 459

#> 2 193 111 1112

#> 3 209 128 1001

#> 4 195 100 2919

#> 5 217 109 2859

#> 6 237 194 1200

#> Wilderness_Area Soil_Type Cover_Type

#> 1 area_0 type_22 class_1

#> 2 area_0 type_28 class_1

#> 3 area_2 type_9 class_2

#> 4 area_0 type_39 class_2

#> 5 area_0 type_22 class_7

#> 6 area_0 type_21 class_1

#>

#> [581012 rows x 13 columns]

splits <- h2o.splitFrame(df, c(0.6, 0.2), seed=1234)

train <- h2o.assign(splits[[1]], "train.hex") # 60%

valid <- h2o.assign(splits[[2]], "valid.hex") # 20%

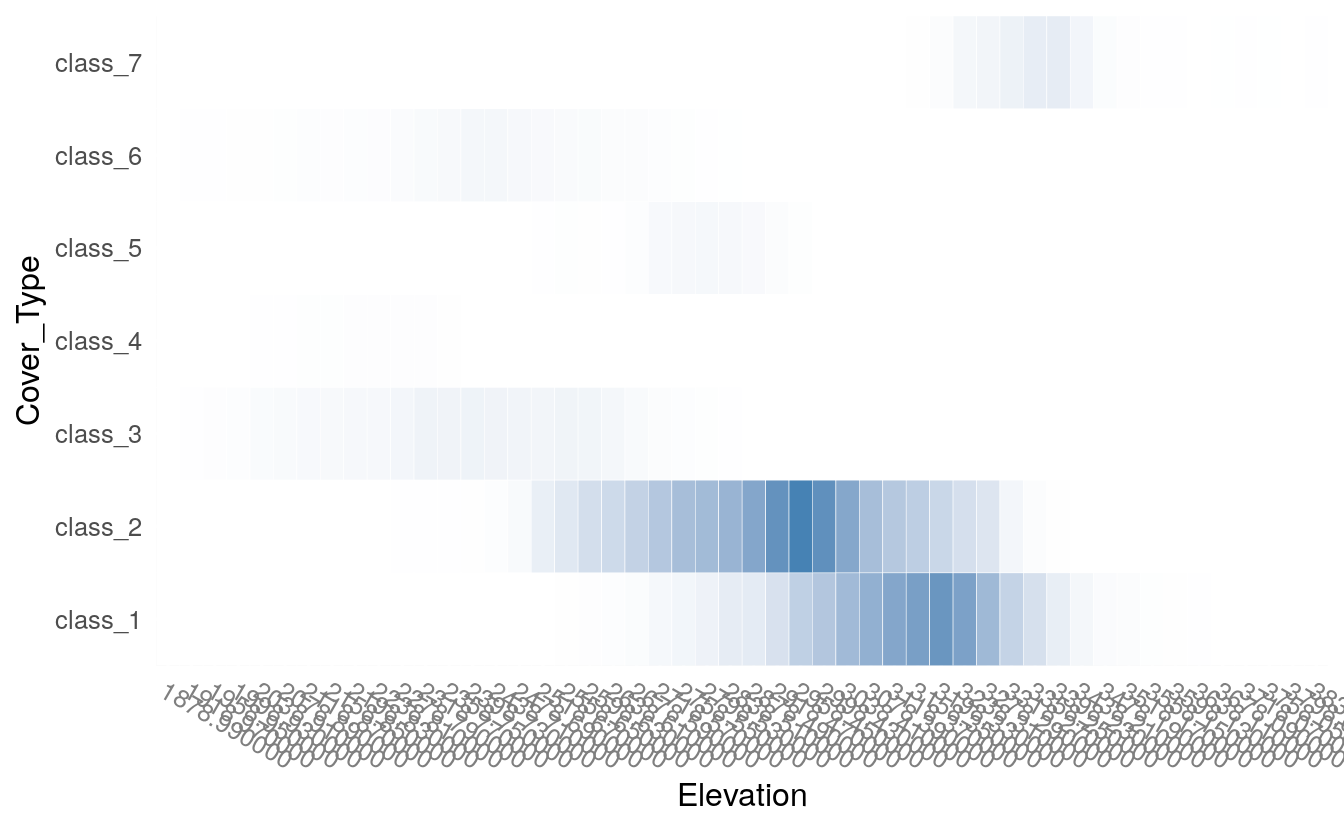

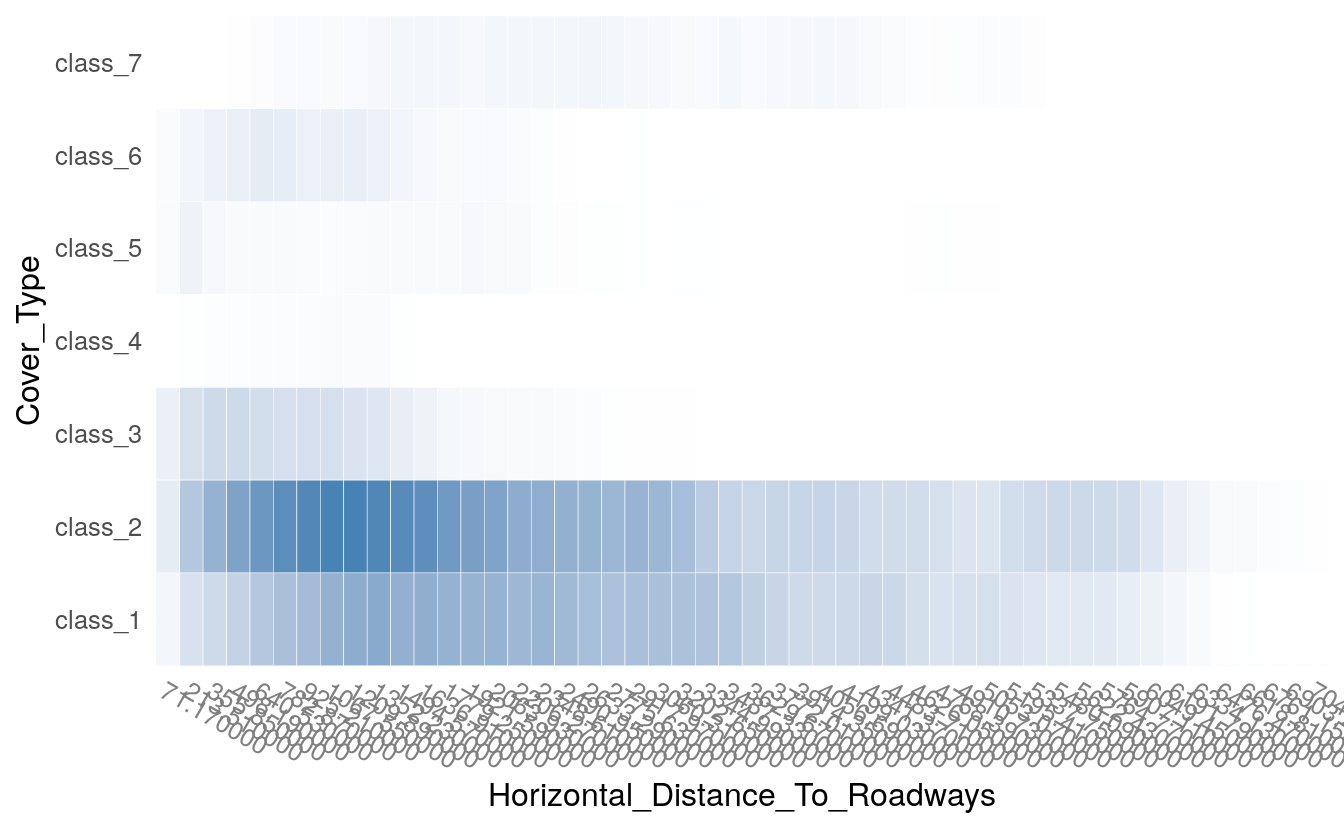

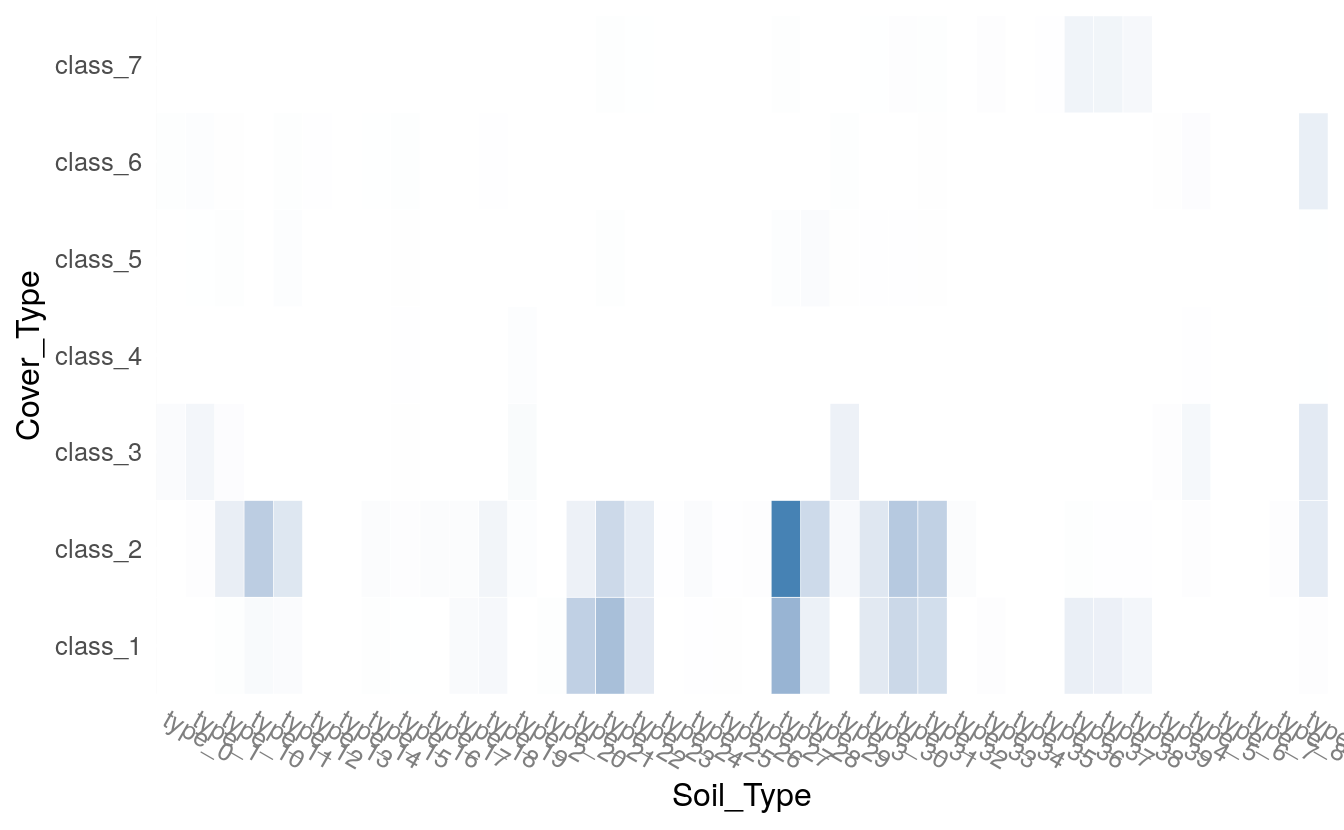

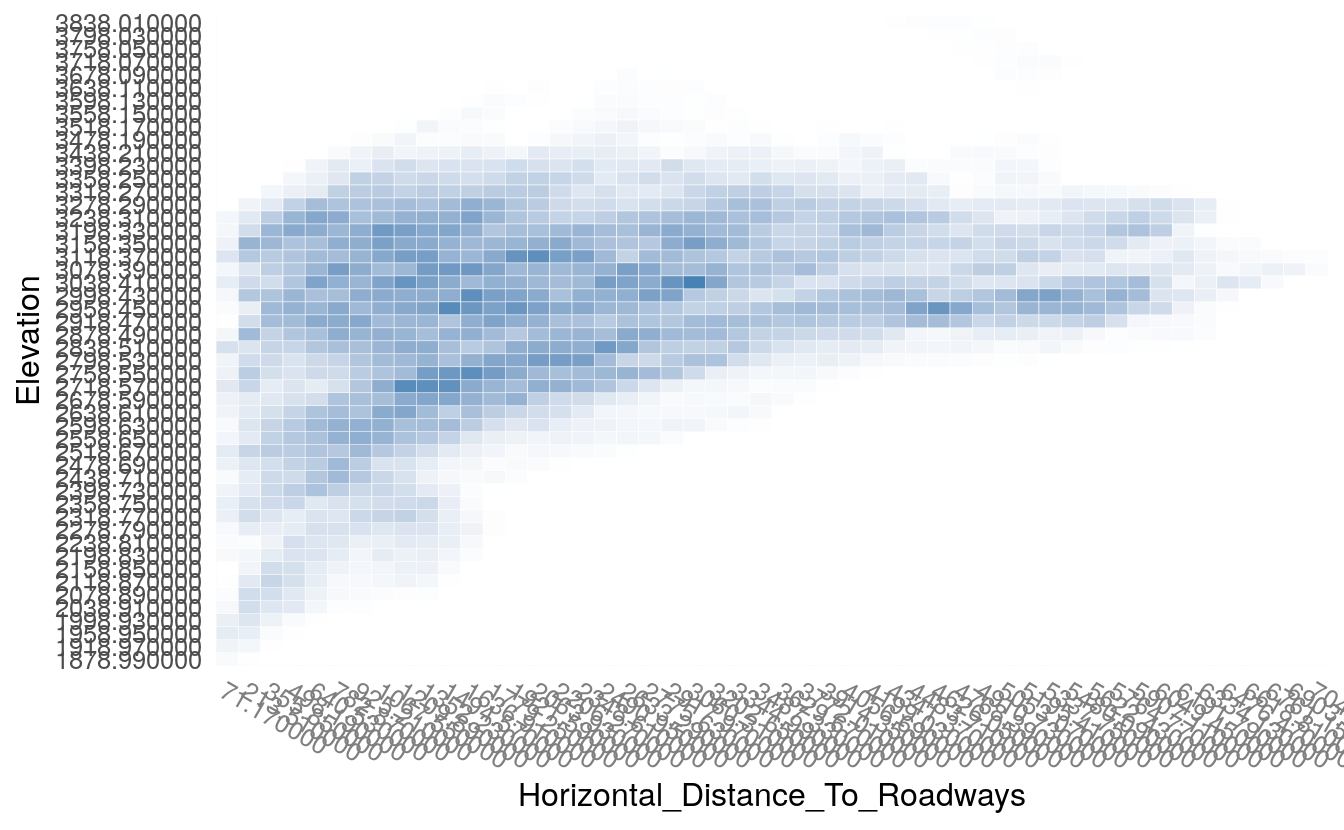

test <- h2o.assign(splits[[3]], "test.hex") # 20%Here’s a scalable way to do scatter plots via binning (works for categorical and numeric columns) to get more familiar with the dataset.

#dev.new(noRStudioGD=FALSE) #direct plotting output to a new window

par(mfrow=c(1,1)) # reset canvas

plot(h2o.tabulate(df, "Elevation", "Cover_Type"))

plot(h2o.tabulate(df, "Horizontal_Distance_To_Roadways", "Cover_Type"))

plot(h2o.tabulate(df, "Soil_Type", "Cover_Type"))

plot(h2o.tabulate(df, "Horizontal_Distance_To_Roadways", "Elevation" ))

46.5.1 First Run of H2O Deep Learning

Let’s run our first Deep Learning model on the covtype dataset. We want to predict the Cover_Type column, a categorical feature with 7 levels, and the Deep Learning model will be tasked to perform (multi-class) classification. It uses the other 12 predictors of the dataset, of which 10 are numerical, and 2 are categorical with a total of 44 levels. We can expect the Deep Learning model to have 56 input neurons (after automatic one-hot encoding).

response <- "Cover_Type"

predictors <- setdiff(names(df), response)

predictors

#> [1] "Elevation" "Aspect"

#> [3] "Slope" "Horizontal_Distance_To_Hydrology"

#> [5] "Vertical_Distance_To_Hydrology" "Horizontal_Distance_To_Roadways"

#> [7] "Hillshade_9am" "Hillshade_Noon"

#> [9] "Hillshade_3pm" "Horizontal_Distance_To_Fire_Points"

#> [11] "Wilderness_Area" "Soil_Type"

train_df <- as.data.frame(train)

str(train_df)

#> 'data.frame': 349015 obs. of 13 variables:

#> $ Elevation : int 3136 3217 3119 2679 3261 2885 3227 2843 2853 2883 ...

#> $ Aspect : int 32 80 293 48 322 26 32 12 124 177 ...

#> $ Slope : int 20 13 13 7 13 9 6 18 12 9 ...

#> $ Horizontal_Distance_To_Hydrology : int 450 30 30 150 30 192 108 335 30 426 ...

#> $ Vertical_Distance_To_Hydrology : int -38 1 10 24 5 38 13 50 -5 126 ...

#> $ Horizontal_Distance_To_Roadways : int 1290 3901 4810 1588 5701 3271 5542 2642 1485 2139 ...

#> $ Hillshade_9am : int 211 237 182 223 186 216 219 199 240 225 ...

#> $ Hillshade_Noon : int 193 217 237 224 226 220 227 201 231 246 ...

#> $ Hillshade_3pm : int 111 109 194 136 180 140 145 135 119 153 ...

#> $ Horizontal_Distance_To_Fire_Points: int 1112 2859 1200 6265 769 2643 765 1719 2497 713 ...

#> $ Wilderness_Area : Factor w/ 4 levels "area_0","area_1",..: 1 1 1 1 1 1 1 3 3 3 ...

#> $ Soil_Type : Factor w/ 40 levels "type_0","type_1",..: 22 16 15 4 15 22 15 27 12 25 ...

#> $ Cover_Type : Factor w/ 7 levels "class_1","class_2",..: 1 7 1 2 1 2 1 2 1 2 ...

valid_df <- as.data.frame(valid)

str(valid_df)

#> 'data.frame': 116018 obs. of 13 variables:

#> $ Elevation : int 3066 2655 2902 2994 2697 2990 3237 2884 2972 2696 ...

#> $ Aspect : int 124 28 304 61 93 59 135 71 100 169 ...

#> $ Slope : int 5 14 22 9 9 12 14 9 4 10 ...

#> $ Horizontal_Distance_To_Hydrology : int 0 42 511 391 306 108 240 459 175 323 ...

#> $ Vertical_Distance_To_Hydrology : int 0 8 18 57 -2 10 -11 141 13 149 ...

#> $ Horizontal_Distance_To_Roadways : int 1533 1890 1273 4286 553 2190 1189 1214 5031 2452 ...

#> $ Hillshade_9am : int 229 214 155 227 234 229 241 231 227 228 ...

#> $ Hillshade_Noon : int 236 209 223 222 227 215 233 222 234 244 ...

#> $ Hillshade_3pm : int 141 128 206 128 125 117 118 124 142 148 ...

#> $ Horizontal_Distance_To_Fire_Points: int 459 1001 1347 1928 1716 1048 2748 1355 6198 1044 ...

#> $ Wilderness_Area : Factor w/ 4 levels "area_0","area_1",..: 1 3 3 1 1 3 1 3 1 3 ...

#> $ Soil_Type : Factor w/ 39 levels "type_0","type_1",..: 15 39 25 4 4 25 14 25 11 23 ...

#> $ Cover_Type : Factor w/ 7 levels "class_1","class_2",..: 1 2 2 2 2 2 1 2 1 3 ...To keep it fast, we only run for one epoch (one pass over the training data).

m1 <- h2o.deeplearning(

model_id="dl_model_first",

training_frame = train,

validation_frame = valid, ## validation dataset: used for scoring and early stopping

x = predictors,

y = response,

#activation="Rectifier", ## default

#hidden=c(200,200), ## default: 2 hidden layers with 200 neurons each

epochs = 1,

variable_importances=T ## not enabled by default

)

#>

|

| | 0%

|

|======= | 10%

|

|============== | 20%

|

|===================== | 30%

|

|============================ | 40%

|

|=================================== | 50%

|

|========================================== | 60%

|

|================================================= | 70%

|

|======================================================== | 80%

|

|=============================================================== | 90%

|

|======================================================================| 100%

summary(m1)

#> Model Details:

#> ==============

#>

#> H2OMultinomialModel: deeplearning

#> Model Key: dl_model_first

#> Status of Neuron Layers: predicting Cover_Type, 7-class classification, multinomial distribution, CrossEntropy loss, 53,007 weights/biases, 633.2 KB, 383,519 training samples, mini-batch size 1

#> layer units type dropout l1 l2 mean_rate rate_rms momentum

#> 1 1 56 Input 0.00 % NA NA NA NA NA

#> 2 2 200 Rectifier 0.00 % 0.000000 0.000000 0.049043 0.209607 0.000000

#> 3 3 200 Rectifier 0.00 % 0.000000 0.000000 0.010094 0.009352 0.000000

#> 4 4 7 Softmax NA 0.000000 0.000000 0.123164 0.300241 0.000000

#> mean_weight weight_rms mean_bias bias_rms

#> 1 NA NA NA NA

#> 2 -0.010410 0.118736 0.006004 0.115481

#> 3 -0.024505 0.118881 0.696468 0.402678

#> 4 -0.401315 0.506471 -0.529662 0.127334

#>

#> H2OMultinomialMetrics: deeplearning

#> ** Reported on training data. **

#> ** Metrics reported on temporary training frame with 9917 samples **

#>

#> Training Set Metrics:

#> =====================

#>

#> MSE: (Extract with `h2o.mse`) 0.126

#> RMSE: (Extract with `h2o.rmse`) 0.355

#> Logloss: (Extract with `h2o.logloss`) 0.406

#> Mean Per-Class Error: 0.338

#> Confusion Matrix: Extract with `h2o.confusionMatrix(<model>,train = TRUE)`)

#> =========================================================================

#> Confusion Matrix: Row labels: Actual class; Column labels: Predicted class

#> class_1 class_2 class_3 class_4 class_5 class_6 class_7 Error

#> class_1 3067 539 6 0 2 1 41 0.1611

#> class_2 580 4069 49 0 14 50 10 0.1473

#> class_3 0 28 502 1 1 68 0 0.1633

#> class_4 0 0 31 15 0 2 0 0.6875

#> class_5 6 76 8 0 66 0 0 0.5769

#> class_6 3 33 95 0 0 155 0 0.4580

#> class_7 69 0 0 0 0 0 330 0.1729

#> Totals 3725 4745 691 16 83 276 381 0.1727

#> Rate

#> class_1 = 589 / 3,656

#> class_2 = 703 / 4,772

#> class_3 = 98 / 600

#> class_4 = 33 / 48

#> class_5 = 90 / 156

#> class_6 = 131 / 286

#> class_7 = 69 / 399

#> Totals = 1,713 / 9,917

#>

#> Hit Ratio Table: Extract with `h2o.hit_ratio_table(<model>,train = TRUE)`

#> =======================================================================

#> Top-7 Hit Ratios:

#> k hit_ratio

#> 1 1 0.827266

#> 2 2 0.983059

#> 3 3 0.997882

#> 4 4 0.999496

#> 5 5 1.000000

#> 6 6 1.000000

#> 7 7 1.000000

#>

#>

#> H2OMultinomialMetrics: deeplearning

#> ** Reported on validation data. **

#> ** Metrics reported on full validation frame **

#>

#> Validation Set Metrics:

#> =====================

#>

#> Extract validation frame with `h2o.getFrame("valid.hex")`

#> MSE: (Extract with `h2o.mse`) 0.129

#> RMSE: (Extract with `h2o.rmse`) 0.359

#> Logloss: (Extract with `h2o.logloss`) 0.418

#> Mean Per-Class Error: 0.332

#> Confusion Matrix: Extract with `h2o.confusionMatrix(<model>,valid = TRUE)`)

#> =========================================================================

#> Confusion Matrix: Row labels: Actual class; Column labels: Predicted class

#> class_1 class_2 class_3 class_4 class_5 class_6 class_7 Error

#> class_1 35220 6644 15 0 28 9 584 0.1713

#> class_2 6936 48033 663 0 191 465 92 0.1480

#> class_3 0 261 6077 19 1 785 0 0.1492

#> class_4 0 0 312 204 0 46 0 0.6370

#> class_5 98 969 72 0 721 10 0 0.6144

#> class_6 14 353 1112 14 4 1967 0 0.4322

#> class_7 655 49 0 0 0 0 3395 0.1717

#> Totals 42923 56309 8251 237 945 3282 4071 0.1758

#> Rate

#> class_1 = 7,280 / 42,500

#> class_2 = 8,347 / 56,380

#> class_3 = 1,066 / 7,143

#> class_4 = 358 / 562

#> class_5 = 1,149 / 1,870

#> class_6 = 1,497 / 3,464

#> class_7 = 704 / 4,099

#> Totals = 20,401 / 116,018

#>

#> Hit Ratio Table: Extract with `h2o.hit_ratio_table(<model>,valid = TRUE)`

#> =======================================================================

#> Top-7 Hit Ratios:

#> k hit_ratio

#> 1 1 0.824157

#> 2 2 0.983140

#> 3 3 0.998181

#> 4 4 0.999578

#> 5 5 0.999991

#> 6 6 1.000000

#> 7 7 1.000000

#>

#>

#>

#>

#> Scoring History:

#> timestamp duration training_speed epochs iterations

#> 1 2020-11-20 00:45:27 0.000 sec NA 0.00000 0

#> 2 2020-11-20 00:45:32 6.317 sec 7614 obs/sec 0.09999 1

#> 3 2020-11-20 00:45:48 22.762 sec 10809 obs/sec 0.59900 6

#> 4 2020-11-20 00:46:03 37.179 sec 11913 obs/sec 1.09886 11

#> samples training_rmse training_logloss training_r2

#> 1 0.000000 NA NA NA

#> 2 34899.000000 0.46850 0.70860 0.89309

#> 3 209061.000000 0.39074 0.48955 0.92564

#> 4 383519.000000 0.35509 0.40628 0.93859

#> training_classification_error validation_rmse validation_logloss

#> 1 NA NA NA

#> 2 0.29001 0.46797 0.70318

#> 3 0.20067 0.39502 0.49726

#> 4 0.17273 0.35949 0.41816

#> validation_r2 validation_classification_error

#> 1 NA NA

#> 2 0.88775 0.28983

#> 3 0.92002 0.20923

#> 4 0.93376 0.17584

#>

#> Variable Importances: (Extract with `h2o.varimp`)

#> =================================================

#>

#> Variable Importances:

#> variable relative_importance scaled_importance

#> 1 Wilderness_Area.area_0 1.000000 1.000000

#> 2 Horizontal_Distance_To_Roadways 0.931456 0.931456

#> 3 Elevation 0.861825 0.861825

#> 4 Horizontal_Distance_To_Fire_Points 0.848471 0.848471

#> 5 Wilderness_Area.area_2 0.789438 0.789438

#> percentage

#> 1 0.033344

#> 2 0.031058

#> 3 0.028736

#> 4 0.028291

#> 5 0.026323

#>

#> ---

#> variable relative_importance scaled_importance percentage

#> 51 Hillshade_9am 0.416170 0.416170 0.013877

#> 52 Slope 0.376747 0.376747 0.012562

#> 53 Hillshade_3pm 0.354328 0.354328 0.011815

#> 54 Aspect 0.273095 0.273095 0.009106

#> 55 Soil_Type.missing(NA) 0.000000 0.000000 0.000000

#> 56 Wilderness_Area.missing(NA) 0.000000 0.000000 0.000000Inspect the model in Flow for more information about model building etc. by issuing a cell with the content getModel “dl_model_first”, and pressing Ctrl-Enter.

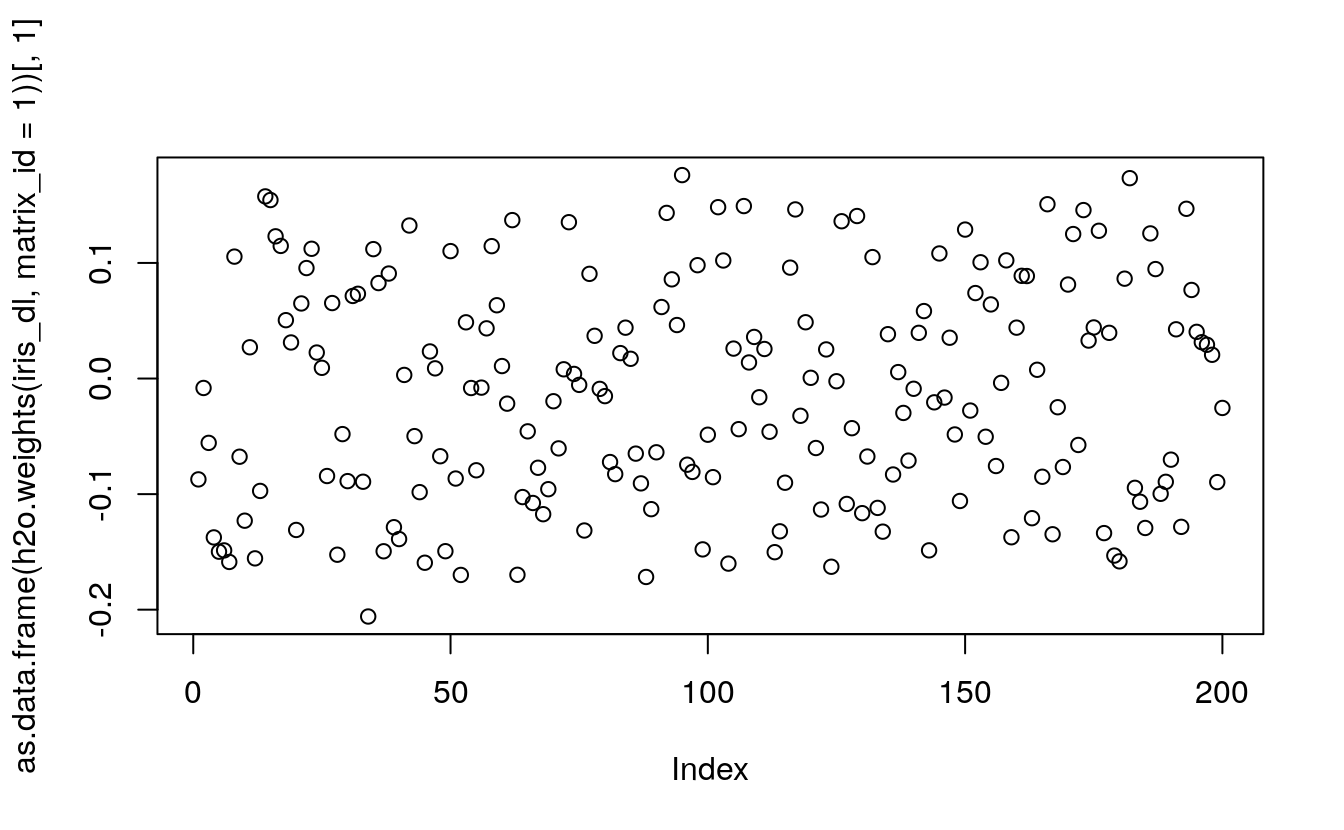

46.5.2 Variable Importances

Variable importances for Neural Network models are notoriously difficult to compute, and there are many pitfalls. H2O Deep Learning has implemented the method of Gedeon, and returns relative variable importances in descending order of importance.

head(as.data.frame(h2o.varimp(m1)))

#> variable relative_importance scaled_importance

#> 1 Wilderness_Area.area_0 1.000 1.000

#> 2 Horizontal_Distance_To_Roadways 0.931 0.931

#> 3 Elevation 0.862 0.862

#> 4 Horizontal_Distance_To_Fire_Points 0.848 0.848

#> 5 Wilderness_Area.area_2 0.789 0.789

#> 6 Wilderness_Area.area_1 0.762 0.762

#> percentage

#> 1 0.0333

#> 2 0.0311

#> 3 0.0287

#> 4 0.0283

#> 5 0.0263

#> 6 0.025446.5.3 Early Stopping

Now we run another, smaller network, and we let it stop automatically once the misclassification rate converges (specifically, if the moving average of length 2 does not improve by at least 1% for 2 consecutive scoring events). We also sample the validation set to 10,000 rows for faster scoring.

m2 <- h2o.deeplearning(

model_id="dl_model_faster",

training_frame=train,

validation_frame=valid,

x=predictors,

y=response,

hidden=c(32,32,32), ## small network, runs faster

epochs=1000000, ## hopefully converges earlier...

score_validation_samples=10000, ## sample the validation dataset (faster)

stopping_rounds=2,

stopping_metric="misclassification", ## could be "MSE","logloss","r2"

stopping_tolerance=0.01

)

#>

|

| | 0%

|

|======================================================================| 100%

summary(m2)

#> Model Details:

#> ==============

#>

#> H2OMultinomialModel: deeplearning

#> Model Key: dl_model_faster

#> Status of Neuron Layers: predicting Cover_Type, 7-class classification, multinomial distribution, CrossEntropy loss, 4,167 weights/biases, 57.9 KB, 6,997,636 training samples, mini-batch size 1

#> layer units type dropout l1 l2 mean_rate rate_rms momentum

#> 1 1 56 Input 0.00 % NA NA NA NA NA

#> 2 2 32 Rectifier 0.00 % 0.000000 0.000000 0.044750 0.205196 0.000000

#> 3 3 32 Rectifier 0.00 % 0.000000 0.000000 0.000365 0.000203 0.000000

#> 4 4 32 Rectifier 0.00 % 0.000000 0.000000 0.000650 0.000469 0.000000

#> 5 5 7 Softmax NA 0.000000 0.000000 0.081947 0.251862 0.000000

#> mean_weight weight_rms mean_bias bias_rms

#> 1 NA NA NA NA

#> 2 0.002544 0.302103 0.183948 0.343792

#> 3 -0.042678 0.401781 0.630921 0.764434

#> 4 0.018843 0.577631 0.723080 0.573754

#> 5 -3.421288 3.540264 -4.262941 2.090440

#>

#> H2OMultinomialMetrics: deeplearning

#> ** Reported on training data. **

#> ** Metrics reported on temporary training frame with 9899 samples **

#>

#> Training Set Metrics:

#> =====================

#>

#> MSE: (Extract with `h2o.mse`) 0.108

#> RMSE: (Extract with `h2o.rmse`) 0.329

#> Logloss: (Extract with `h2o.logloss`) 0.359

#> Mean Per-Class Error: 0.209

#> Confusion Matrix: Extract with `h2o.confusionMatrix(<model>,train = TRUE)`)

#> =========================================================================

#> Confusion Matrix: Row labels: Actual class; Column labels: Predicted class

#> class_1 class_2 class_3 class_4 class_5 class_6 class_7 Error

#> class_1 3041 513 0 0 2 1 43 0.1553

#> class_2 453 4303 40 0 36 35 10 0.1177

#> class_3 0 25 495 17 1 52 0 0.1610

#> class_4 0 0 11 38 0 4 0 0.2830

#> class_5 9 43 2 0 116 1 0 0.3216

#> class_6 2 29 62 4 2 200 0 0.3311

#> class_7 28 1 0 0 0 0 280 0.0939

#> Totals 3533 4914 610 59 157 293 333 0.1441

#> Rate

#> class_1 = 559 / 3,600

#> class_2 = 574 / 4,877

#> class_3 = 95 / 590

#> class_4 = 15 / 53

#> class_5 = 55 / 171

#> class_6 = 99 / 299

#> class_7 = 29 / 309

#> Totals = 1,426 / 9,899

#>

#> Hit Ratio Table: Extract with `h2o.hit_ratio_table(<model>,train = TRUE)`

#> =======================================================================

#> Top-7 Hit Ratios:

#> k hit_ratio

#> 1 1 0.855945

#> 2 2 0.986463

#> 3 3 0.998485

#> 4 4 0.999596

#> 5 5 1.000000

#> 6 6 1.000000

#> 7 7 1.000000

#>

#>

#> H2OMultinomialMetrics: deeplearning

#> ** Reported on validation data. **

#> ** Metrics reported on temporary validation frame with 9964 samples **

#>

#> Validation Set Metrics:

#> =====================

#>

#> MSE: (Extract with `h2o.mse`) 0.112

#> RMSE: (Extract with `h2o.rmse`) 0.334

#> Logloss: (Extract with `h2o.logloss`) 0.376

#> Mean Per-Class Error: 0.245

#> Confusion Matrix: Extract with `h2o.confusionMatrix(<model>,valid = TRUE)`)

#> =========================================================================

#> Confusion Matrix: Row labels: Actual class; Column labels: Predicted class

#> class_1 class_2 class_3 class_4 class_5 class_6 class_7 Error

#> class_1 3093 507 0 0 4 1 36 0.1505

#> class_2 457 4221 36 0 44 33 6 0.1201

#> class_3 0 25 529 20 0 69 0 0.1773

#> class_4 0 0 12 33 0 4 0 0.3265

#> class_5 8 64 11 0 98 0 0 0.4586

#> class_6 2 25 72 2 2 198 0 0.3422

#> class_7 47 1 0 0 0 0 304 0.1364

#> Totals 3607 4843 660 55 148 305 346 0.1493

#> Rate

#> class_1 = 548 / 3,641

#> class_2 = 576 / 4,797

#> class_3 = 114 / 643

#> class_4 = 16 / 49

#> class_5 = 83 / 181

#> class_6 = 103 / 301

#> class_7 = 48 / 352

#> Totals = 1,488 / 9,964

#>

#> Hit Ratio Table: Extract with `h2o.hit_ratio_table(<model>,valid = TRUE)`

#> =======================================================================

#> Top-7 Hit Ratios:

#> k hit_ratio

#> 1 1 0.850662

#> 2 2 0.986451

#> 3 3 0.997290

#> 4 4 0.999498

#> 5 5 1.000000

#> 6 6 1.000000

#> 7 7 1.000000

#>

#>

#>

#>

#> Scoring History:

#> timestamp duration training_speed epochs iterations

#> 1 2020-11-20 00:46:05 0.000 sec NA 0.00000 0

#> 2 2020-11-20 00:46:06 1.165 sec 90270 obs/sec 0.28580 1

#> 3 2020-11-20 00:46:11 6.524 sec 108717 obs/sec 2.00480 7

#> 4 2020-11-20 00:46:16 11.558 sec 113586 obs/sec 3.72313 13

#> 5 2020-11-20 00:46:22 17.093 sec 117990 obs/sec 5.72920 20

#> 6 2020-11-20 00:46:27 22.467 sec 121073 obs/sec 7.73277 27

#> 7 2020-11-20 00:46:33 27.777 sec 123275 obs/sec 9.73939 34

#> 8 2020-11-20 00:46:38 32.886 sec 125556 obs/sec 11.73989 41

#> 9 2020-11-20 00:46:43 37.997 sec 127220 obs/sec 13.74839 48

#> 10 2020-11-20 00:46:49 43.645 sec 129171 obs/sec 16.03798 56

#> 11 2020-11-20 00:46:54 48.693 sec 130235 obs/sec 18.04370 63

#> 12 2020-11-20 00:46:59 53.711 sec 131172 obs/sec 20.04967 70

#> 13 2020-11-20 00:46:59 53.740 sec 131167 obs/sec 20.04967 70

#> samples training_rmse training_logloss training_r2

#> 1 0.000000 NA NA NA

#> 2 99749.000000 0.43749 0.59375 0.89724

#> 3 699706.000000 0.37934 0.45952 0.92274

#> 4 1299427.000000 0.36669 0.43114 0.92781

#> 5 1999578.000000 0.35577 0.41289 0.93205

#> 6 2698851.000000 0.35258 0.40475 0.93326

#> 7 3399193.000000 0.34105 0.38773 0.93755

#> 8 4097398.000000 0.33517 0.37329 0.93968

#> 9 4798393.000000 0.33161 0.36779 0.94096

#> 10 5597496.000000 0.32921 0.35920 0.94181

#> 11 6297522.000000 0.32682 0.35087 0.94265

#> 12 6997636.000000 0.32666 0.35614 0.94271

#> 13 6997636.000000 0.32921 0.35920 0.94181

#> training_classification_error validation_rmse validation_logloss

#> 1 NA NA NA

#> 2 0.25366 0.43677 0.59368

#> 3 0.19376 0.38519 0.47204

#> 4 0.18032 0.37263 0.44703

#> 5 0.16971 0.36044 0.42456

#> 6 0.16365 0.35549 0.41467

#> 7 0.15719 0.34788 0.40580

#> 8 0.15173 0.33894 0.38546

#> 9 0.14426 0.33504 0.38034

#> 10 0.14405 0.33441 0.37603

#> 11 0.14304 0.33181 0.36860

#> 12 0.14143 0.33281 0.37346

#> 13 0.14405 0.33441 0.37603

#> validation_r2 validation_classification_error

#> 1 NA NA

#> 2 0.90345 0.25161

#> 3 0.92491 0.19791

#> 4 0.92973 0.18446

#> 5 0.93425 0.17021

#> 6 0.93604 0.16831

#> 7 0.93875 0.16038

#> 8 0.94186 0.15295

#> 9 0.94319 0.14954

#> 10 0.94340 0.14934

#> 11 0.94428 0.14934

#> 12 0.94394 0.15004

#> 13 0.94340 0.14934

#>

#> Variable Importances: (Extract with `h2o.varimp`)

#> =================================================

#>

#> Variable Importances:

#> variable relative_importance scaled_importance

#> 1 Horizontal_Distance_To_Roadways 1.000000 1.000000

#> 2 Wilderness_Area.area_0 0.987520 0.987520

#> 3 Elevation 0.977226 0.977226

#> 4 Wilderness_Area.area_1 0.936496 0.936496

#> 5 Soil_Type.type_21 0.839471 0.839471

#> percentage

#> 1 0.034230

#> 2 0.033803

#> 3 0.033451

#> 4 0.032056

#> 5 0.028735

#>

#> ---

#> variable relative_importance scaled_importance

#> 51 Soil_Type.type_14 0.272257 0.272257

#> 52 Vertical_Distance_To_Hydrology 0.246618 0.246618

#> 53 Slope 0.165276 0.165276

#> 54 Aspect 0.049482 0.049482

#> 55 Soil_Type.missing(NA) 0.000000 0.000000

#> 56 Wilderness_Area.missing(NA) 0.000000 0.000000

#> percentage

#> 51 0.009319

#> 52 0.008442

#> 53 0.005657

#> 54 0.001694

#> 55 0.000000

#> 56 0.000000

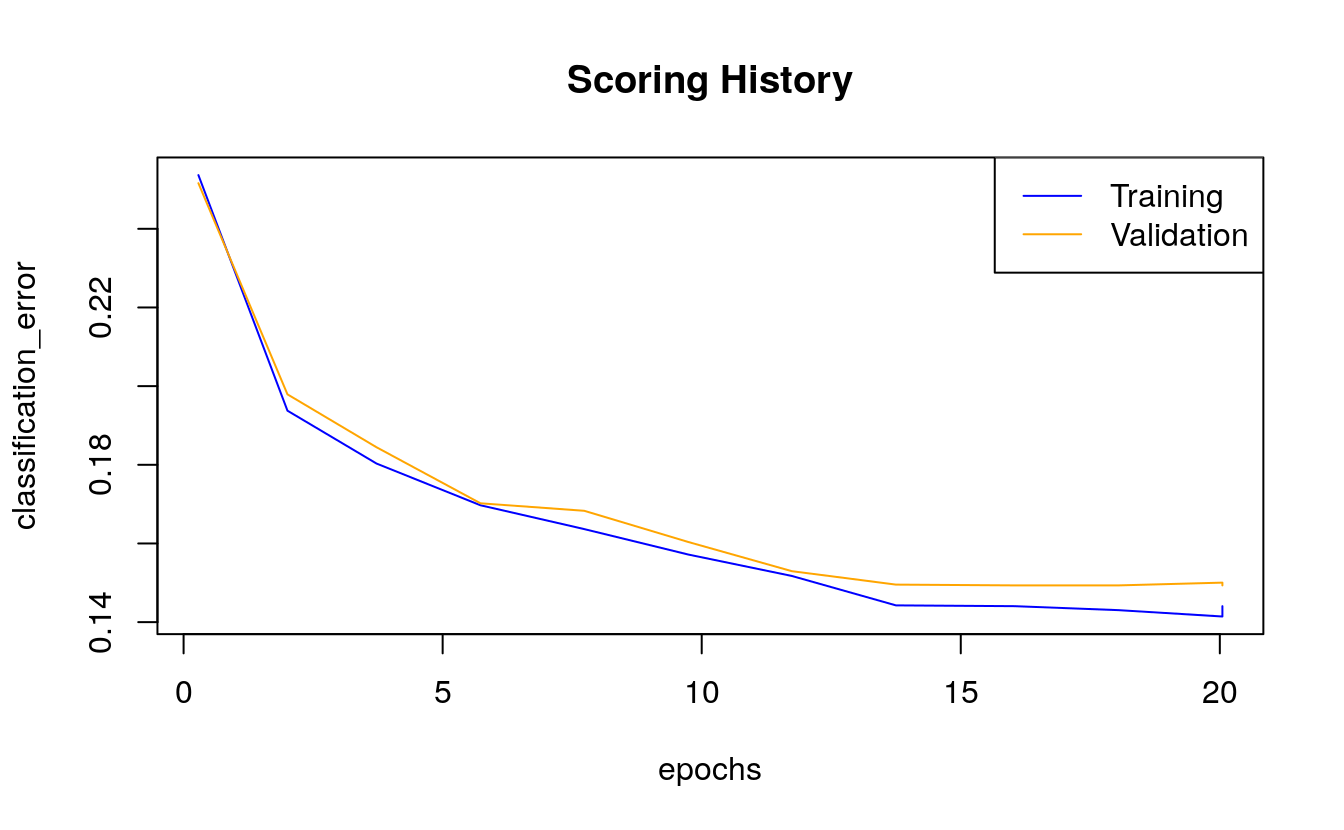

plot(m2)

46.5.4 Adaptive Learning Rate

By default, H2O Deep Learning uses an adaptive learning rate (ADADELTA) for its stochastic gradient descent optimization. There are only two tuning parameters for this method: rho and epsilon, which balance the global and local search efficiencies. rho is the similarity to prior weight updates (similar to momentum), and epsilon is a parameter that prevents the optimization to get stuck in local optima. Defaults are rho=0.99 and epsilon=1e-8. For cases where convergence speed is very important, it might make sense to perform a few runs to optimize these two parameters (e.g., with rho in c(0.9,0.95,0.99,0.999) and epsilon in c(1e-10,1e-8,1e-6,1e-4)). Of course, as always with grid searches, caution has to be applied when extrapolating grid search results to a different parameter regime (e.g., for more epochs or different layer topologies or activation functions, etc.).

If adaptive_rate is disabled, several manual learning rate parameters become important: rate, rate_annealing, rate_decay, momentum_start, momentum_ramp, momentum_stable and nesterov_accelerated_gradient, the discussion of which we leave to H2O Deep Learning booklet.

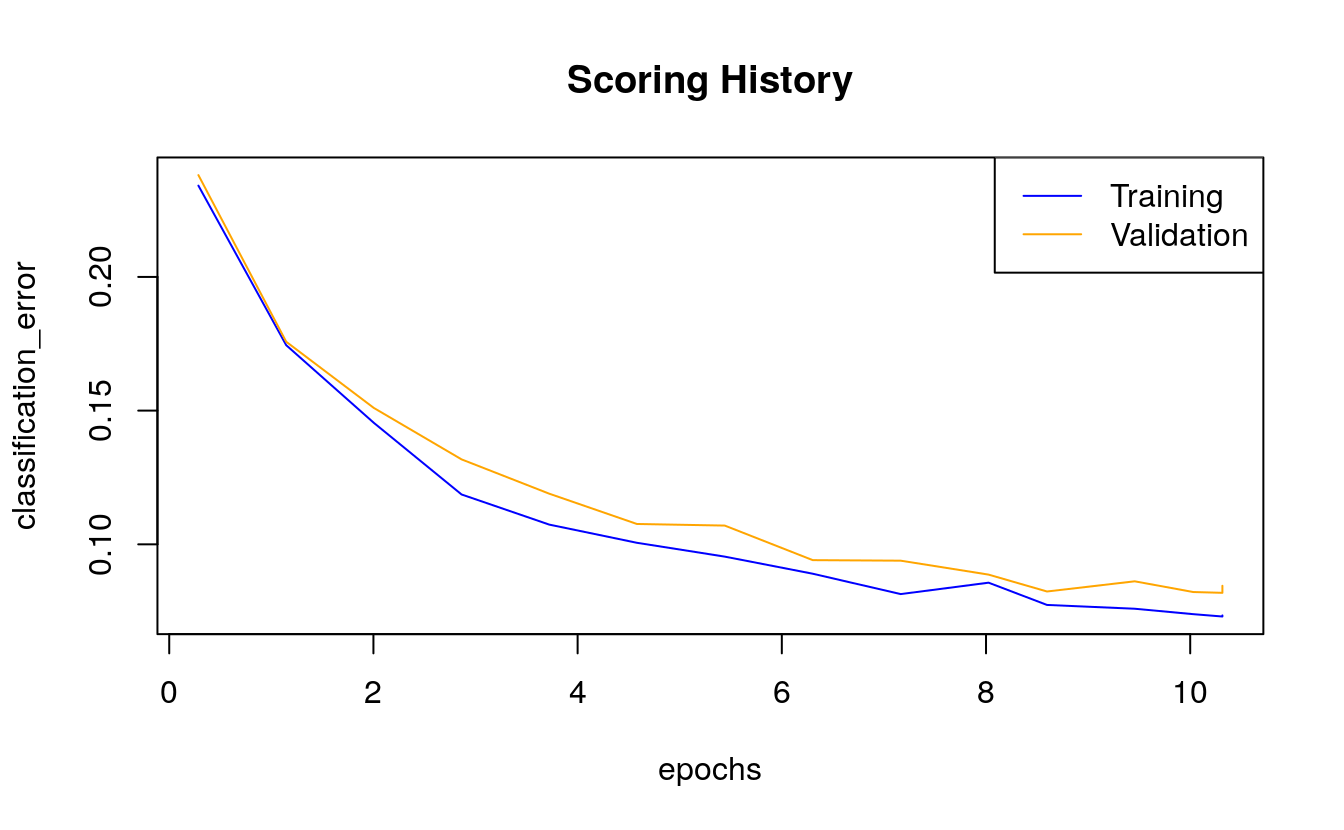

46.5.5 Tuning

With some tuning, it is possible to obtain less than 10% test set error rate in about one minute. Error rates of below 5% are possible with larger models. Note that deep tree methods can be more effective for this dataset than Deep Learning, as they directly partition the space into sectors, which seems to be needed here.

m3 <- h2o.deeplearning(

model_id="dl_model_tuned",

training_frame=train,

validation_frame=valid,

x=predictors,

y=response,

overwrite_with_best_model=F, ## Return final model after 10 epochs, even if not the best

hidden=c(128,128,128), ## more hidden layers -> more complex interactions

epochs=10, ## to keep it short enough

score_validation_samples=10000, ## downsample validation set for faster scoring

score_duty_cycle=0.025, ## don't score more than 2.5% of the wall time

adaptive_rate=F, ## manually tuned learning rate

rate=0.01,

rate_annealing=2e-6,

momentum_start=0.2, ## manually tuned momentum

momentum_stable=0.4,

momentum_ramp=1e7,

l1=1e-5, ## add some L1/L2 regularization

l2=1e-5,

max_w2=10 ## helps stability for Rectifier

)

#>

|

| | 0%

|

|== | 3%

|

|==== | 6%

|

|====== | 9%

|

|======== | 11%

|

|========== | 14%

|

|============ | 17%

|

|============== | 20%

|

|================ | 23%

|

|================== | 26%

|

|==================== | 29%

|

|====================== | 32%

|

|======================== | 34%

|

|========================== | 37%

|

|============================ | 40%

|

|============================== | 43%

|

|================================ | 46%

|

|================================== | 49%

|

|==================================== | 52%

|

|====================================== | 54%

|

|======================================== | 57%

|

|========================================== | 60%

|

|============================================ | 63%

|

|============================================== | 66%

|

|================================================ | 69%

|

|================================================== | 72%

|

|==================================================== | 74%

|

|====================================================== | 77%

|

|======================================================== | 80%

|

|========================================================== | 83%

|

|============================================================ | 86%

|

|============================================================== | 89%

|

|================================================================ | 92%

|

|================================================================== | 95%

|

|==================================================================== | 97%

|

|======================================================================| 100%

summary(m3)

#> Model Details:

#> ==============

#>

#> H2OMultinomialModel: deeplearning

#> Model Key: dl_model_tuned

#> Status of Neuron Layers: predicting Cover_Type, 7-class classification, multinomial distribution, CrossEntropy loss, 41,223 weights/biases, 332.9 KB, 3,500,387 training samples, mini-batch size 1

#> layer units type dropout l1 l2 mean_rate rate_rms momentum

#> 1 1 56 Input 0.00 % NA NA NA NA NA

#> 2 2 128 Rectifier 0.00 % 0.000010 0.000010 0.001250 0.000000 0.270008

#> 3 3 128 Rectifier 0.00 % 0.000010 0.000010 0.001250 0.000000 0.270008

#> 4 4 128 Rectifier 0.00 % 0.000010 0.000010 0.001250 0.000000 0.270008

#> 5 5 7 Softmax NA 0.000010 0.000010 0.001250 0.000000 0.270008

#> mean_weight weight_rms mean_bias bias_rms

#> 1 NA NA NA NA

#> 2 -0.012577 0.312181 0.023897 0.327174

#> 3 -0.058654 0.222585 0.835404 0.353978

#> 4 -0.057502 0.216801 0.801143 0.205884

#> 5 -0.033170 0.269806 0.003331 0.833177

#>

#> H2OMultinomialMetrics: deeplearning

#> ** Reported on training data. **

#> ** Metrics reported on temporary training frame with 9853 samples **

#>

#> Training Set Metrics:

#> =====================

#>

#> MSE: (Extract with `h2o.mse`) 0.0542

#> RMSE: (Extract with `h2o.rmse`) 0.233

#> Logloss: (Extract with `h2o.logloss`) 0.181

#> Mean Per-Class Error: 0.116

#> Confusion Matrix: Extract with `h2o.confusionMatrix(<model>,train = TRUE)`)

#> =========================================================================

#> Confusion Matrix: Row labels: Actual class; Column labels: Predicted class

#> class_1 class_2 class_3 class_4 class_5 class_6 class_7 Error

#> class_1 3414 219 0 0 3 0 18 0.0657

#> class_2 285 4487 7 0 12 4 2 0.0646

#> class_3 0 19 560 8 1 29 0 0.0924

#> class_4 0 0 1 42 0 1 0 0.0455

#> class_5 4 32 0 0 107 1 0 0.2569

#> class_6 0 18 45 0 0 215 0 0.2266

#> class_7 18 1 0 0 0 0 300 0.0596

#> Totals 3721 4776 613 50 123 250 320 0.0739

#> Rate

#> class_1 = 240 / 3,654

#> class_2 = 310 / 4,797

#> class_3 = 57 / 617

#> class_4 = 2 / 44

#> class_5 = 37 / 144

#> class_6 = 63 / 278

#> class_7 = 19 / 319

#> Totals = 728 / 9,853

#>

#> Hit Ratio Table: Extract with `h2o.hit_ratio_table(<model>,train = TRUE)`

#> =======================================================================

#> Top-7 Hit Ratios:

#> k hit_ratio

#> 1 1 0.926114

#> 2 2 0.996549

#> 3 3 0.999696

#> 4 4 1.000000

#> 5 5 1.000000

#> 6 6 1.000000

#> 7 7 1.000000

#>

#>

#> H2OMultinomialMetrics: deeplearning

#> ** Reported on validation data. **

#> ** Metrics reported on temporary validation frame with 9980 samples **

#>

#> Validation Set Metrics:

#> =====================

#>

#> MSE: (Extract with `h2o.mse`) 0.0611

#> RMSE: (Extract with `h2o.rmse`) 0.247

#> Logloss: (Extract with `h2o.logloss`) 0.201

#> Mean Per-Class Error: 0.135

#> Confusion Matrix: Extract with `h2o.confusionMatrix(<model>,valid = TRUE)`)

#> =========================================================================

#> Confusion Matrix: Row labels: Actual class; Column labels: Predicted class

#> class_1 class_2 class_3 class_4 class_5 class_6 class_7 Error

#> class_1 3378 233 0 0 4 1 17 0.0702

#> class_2 307 4451 5 0 30 6 6 0.0737

#> class_3 1 12 547 12 1 34 0 0.0988

#> class_4 0 0 4 41 0 9 0 0.2407

#> class_5 2 22 0 0 142 1 0 0.1497

#> class_6 0 27 52 4 0 281 0 0.2280

#> class_7 29 1 0 0 0 0 320 0.0857

#> Totals 3717 4746 608 57 177 332 343 0.0822

#> Rate

#> class_1 = 255 / 3,633

#> class_2 = 354 / 4,805

#> class_3 = 60 / 607

#> class_4 = 13 / 54

#> class_5 = 25 / 167

#> class_6 = 83 / 364

#> class_7 = 30 / 350

#> Totals = 820 / 9,980

#>

#> Hit Ratio Table: Extract with `h2o.hit_ratio_table(<model>,valid = TRUE)`

#> =======================================================================

#> Top-7 Hit Ratios:

#> k hit_ratio

#> 1 1 0.917836

#> 2 2 0.996293

#> 3 3 0.999800

#> 4 4 1.000000

#> 5 5 1.000000

#> 6 6 1.000000

#> 7 7 1.000000

#>

#>

#>

#>

#> Scoring History:

#> timestamp duration training_speed epochs iterations

#> 1 2020-11-20 00:46:59 0.000 sec NA 0.00000 0

#> 2 2020-11-20 00:47:04 5.374 sec 19667 obs/sec 0.28570 1

#> 3 2020-11-20 00:47:16 17.004 sec 24298 obs/sec 1.14580 4

#> 4 2020-11-20 00:47:27 27.634 sec 26041 obs/sec 2.00380 7

#> 5 2020-11-20 00:47:37 38.130 sec 26919 obs/sec 2.86322 10

#> 6 2020-11-20 00:47:47 48.268 sec 27612 obs/sec 3.72185 13

#> 7 2020-11-20 00:47:58 58.391 sec 28070 obs/sec 4.57999 16

#> 8 2020-11-20 00:48:08 1 min 8.665 sec 28342 obs/sec 5.44094 19

#> 9 2020-11-20 00:48:18 1 min 18.808 sec 28589 obs/sec 6.30286 22

#> 10 2020-11-20 00:48:28 1 min 29.079 sec 28738 obs/sec 7.16387 25

#> 11 2020-11-20 00:48:39 1 min 39.471 sec 28814 obs/sec 8.02484 28

#> 12 2020-11-20 00:48:47 1 min 47.630 sec 28540 obs/sec 8.59717 30

#> 13 2020-11-20 00:48:58 1 min 58.814 sec 28428 obs/sec 9.45631 33

#> 14 2020-11-20 00:49:05 2 min 5.602 sec 28530 obs/sec 10.02933 35

#> samples training_rmse training_logloss training_r2

#> 1 0.000000 NA NA NA

#> 2 99715.000000 0.42091 0.54792 0.90389

#> 3 399903.000000 0.36110 0.40892 0.92926

#> 4 699355.000000 0.32465 0.33522 0.94282

#> 5 999306.000000 0.30337 0.29947 0.95007

#> 6 1298980.000000 0.28769 0.26896 0.95510

#> 7 1598486.000000 0.27389 0.24600 0.95930

#> 8 1898968.000000 0.26872 0.23760 0.96083

#> 9 2199794.000000 0.25677 0.21771 0.96423

#> 10 2500298.000000 0.25132 0.20910 0.96574

#> 11 2800789.000000 0.24956 0.20557 0.96621

#> 12 3000543.000000 0.23992 0.19289 0.96877

#> 13 3300393.000000 0.23879 0.18893 0.96907

#> 14 3500387.000000 0.23290 0.18055 0.97057

#> training_classification_error validation_rmse validation_logloss

#> 1 NA NA NA

#> 2 0.23414 0.42117 0.54686

#> 3 0.17446 0.36241 0.41089

#> 4 0.14544 0.33231 0.34976

#> 5 0.11864 0.31353 0.31560

#> 6 0.10738 0.29535 0.27967

#> 7 0.10058 0.28128 0.25850

#> 8 0.09540 0.27812 0.25019

#> 9 0.08901 0.26701 0.23320

#> 10 0.08140 0.26332 0.22801

#> 11 0.08566 0.25778 0.21911

#> 12 0.07734 0.24912 0.20497

#> 13 0.07592 0.25180 0.20628

#> 14 0.07389 0.24714 0.20143

#> validation_r2 validation_classification_error

#> 1 NA NA

#> 2 0.91352 0.23808

#> 3 0.93597 0.17575

#> 4 0.94616 0.15100

#> 5 0.95208 0.13176

#> 6 0.95747 0.11894

#> 7 0.96143 0.10762

#> 8 0.96229 0.10701

#> 9 0.96524 0.09409

#> 10 0.96620 0.09389

#> 11 0.96760 0.08868

#> 12 0.96974 0.08236

#> 13 0.96909 0.08617

#> 14 0.97022 0.08216

#>

#> Variable Importances: (Extract with `h2o.varimp`)

#> =================================================

#>

#> Variable Importances:

#> variable relative_importance scaled_importance

#> 1 Elevation 1.000000 1.000000

#> 2 Horizontal_Distance_To_Roadways 0.845648 0.845648

#> 3 Horizontal_Distance_To_Fire_Points 0.806406 0.806406

#> 4 Wilderness_Area.area_0 0.613771 0.613771

#> 5 Wilderness_Area.area_2 0.577823 0.577823

#> percentage

#> 1 0.051920

#> 2 0.043906

#> 3 0.041869

#> 4 0.031867

#> 5 0.030001

#>

#> ---

#> variable relative_importance scaled_importance percentage

#> 51 Soil_Type.type_17 0.143857 0.143857 0.007469

#> 52 Soil_Type.type_7 0.143357 0.143357 0.007443

#> 53 Soil_Type.type_14 0.142947 0.142947 0.007422

#> 54 Soil_Type.type_24 0.135752 0.135752 0.007048

#> 55 Soil_Type.missing(NA) 0.000000 0.000000 0.000000

#> 56 Wilderness_Area.missing(NA) 0.000000 0.000000 0.000000Let’s compare the training error with the validation and test set errors

h2o.performance(m3, train=T) ## sampled training data (from model building)

#> H2OMultinomialMetrics: deeplearning

#> ** Reported on training data. **

#> ** Metrics reported on temporary training frame with 9853 samples **

#>

#> Training Set Metrics:

#> =====================

#>

#> MSE: (Extract with `h2o.mse`) 0.0542

#> RMSE: (Extract with `h2o.rmse`) 0.233

#> Logloss: (Extract with `h2o.logloss`) 0.181

#> Mean Per-Class Error: 0.116

#> Confusion Matrix: Extract with `h2o.confusionMatrix(<model>,train = TRUE)`)

#> =========================================================================

#> Confusion Matrix: Row labels: Actual class; Column labels: Predicted class

#> class_1 class_2 class_3 class_4 class_5 class_6 class_7 Error

#> class_1 3414 219 0 0 3 0 18 0.0657

#> class_2 285 4487 7 0 12 4 2 0.0646

#> class_3 0 19 560 8 1 29 0 0.0924

#> class_4 0 0 1 42 0 1 0 0.0455

#> class_5 4 32 0 0 107 1 0 0.2569

#> class_6 0 18 45 0 0 215 0 0.2266

#> class_7 18 1 0 0 0 0 300 0.0596

#> Totals 3721 4776 613 50 123 250 320 0.0739

#> Rate

#> class_1 = 240 / 3,654

#> class_2 = 310 / 4,797

#> class_3 = 57 / 617

#> class_4 = 2 / 44

#> class_5 = 37 / 144

#> class_6 = 63 / 278

#> class_7 = 19 / 319

#> Totals = 728 / 9,853

#>

#> Hit Ratio Table: Extract with `h2o.hit_ratio_table(<model>,train = TRUE)`

#> =======================================================================

#> Top-7 Hit Ratios:

#> k hit_ratio

#> 1 1 0.926114

#> 2 2 0.996549

#> 3 3 0.999696

#> 4 4 1.000000

#> 5 5 1.000000

#> 6 6 1.000000

#> 7 7 1.000000

h2o.performance(m3, valid=T) ## sampled validation data (from model building)

#> H2OMultinomialMetrics: deeplearning

#> ** Reported on validation data. **

#> ** Metrics reported on temporary validation frame with 9980 samples **

#>

#> Validation Set Metrics:

#> =====================

#>

#> MSE: (Extract with `h2o.mse`) 0.0611

#> RMSE: (Extract with `h2o.rmse`) 0.247

#> Logloss: (Extract with `h2o.logloss`) 0.201

#> Mean Per-Class Error: 0.135

#> Confusion Matrix: Extract with `h2o.confusionMatrix(<model>,valid = TRUE)`)

#> =========================================================================

#> Confusion Matrix: Row labels: Actual class; Column labels: Predicted class

#> class_1 class_2 class_3 class_4 class_5 class_6 class_7 Error

#> class_1 3378 233 0 0 4 1 17 0.0702

#> class_2 307 4451 5 0 30 6 6 0.0737

#> class_3 1 12 547 12 1 34 0 0.0988

#> class_4 0 0 4 41 0 9 0 0.2407

#> class_5 2 22 0 0 142 1 0 0.1497

#> class_6 0 27 52 4 0 281 0 0.2280

#> class_7 29 1 0 0 0 0 320 0.0857

#> Totals 3717 4746 608 57 177 332 343 0.0822

#> Rate

#> class_1 = 255 / 3,633

#> class_2 = 354 / 4,805

#> class_3 = 60 / 607

#> class_4 = 13 / 54

#> class_5 = 25 / 167

#> class_6 = 83 / 364

#> class_7 = 30 / 350

#> Totals = 820 / 9,980

#>

#> Hit Ratio Table: Extract with `h2o.hit_ratio_table(<model>,valid = TRUE)`

#> =======================================================================

#> Top-7 Hit Ratios:

#> k hit_ratio

#> 1 1 0.917836

#> 2 2 0.996293

#> 3 3 0.999800

#> 4 4 1.000000

#> 5 5 1.000000

#> 6 6 1.000000

#> 7 7 1.000000

h2o.performance(m3, newdata=train) ## full training data

#> H2OMultinomialMetrics: deeplearning

#>

#> Test Set Metrics:

#> =====================

#>

#> MSE: (Extract with `h2o.mse`) 0.0567

#> RMSE: (Extract with `h2o.rmse`) 0.238

#> Logloss: (Extract with `h2o.logloss`) 0.188

#> Mean Per-Class Error: 0.122

#> Confusion Matrix: Extract with `h2o.confusionMatrix(<model>, <data>)`)

#> =========================================================================

#> Confusion Matrix: Row labels: Actual class; Column labels: Predicted class

#> class_1 class_2 class_3 class_4 class_5 class_6 class_7 Error

#> class_1 118796 7547 1 0 120 58 598 0.0655

#> class_2 10285 158771 251 0 629 316 90 0.0679

#> class_3 2 646 19442 296 47 1009 0 0.0933

#> class_4 0 2 166 1435 0 55 0 0.1345

#> class_5 71 1084 87 0 4451 26 1 0.2219

#> class_6 12 504 1363 104 5 8445 0 0.1905

#> class_7 860 95 0 0 2 0 11343 0.0778

#> Totals 130026 168649 21310 1835 5254 9909 12032 0.0754

#> Rate

#> class_1 = 8,324 / 127,120

#> class_2 = 11,571 / 170,342

#> class_3 = 2,000 / 21,442

#> class_4 = 223 / 1,658

#> class_5 = 1,269 / 5,720

#> class_6 = 1,988 / 10,433

#> class_7 = 957 / 12,300

#> Totals = 26,332 / 349,015

#>

#> Hit Ratio Table: Extract with `h2o.hit_ratio_table(<model>, <data>)`

#> =======================================================================

#> Top-7 Hit Ratios:

#> k hit_ratio

#> 1 1 0.924553

#> 2 2 0.996662

#> 3 3 0.999811

#> 4 4 0.999966

#> 5 5 0.999997

#> 6 6 1.000000

#> 7 7 1.000000

h2o.performance(m3, newdata=valid) ## full validation data

#> H2OMultinomialMetrics: deeplearning

#>

#> Test Set Metrics:

#> =====================

#>

#> MSE: (Extract with `h2o.mse`) 0.0626

#> RMSE: (Extract with `h2o.rmse`) 0.25

#> Logloss: (Extract with `h2o.logloss`) 0.208

#> Mean Per-Class Error: 0.138

#> Confusion Matrix: Extract with `h2o.confusionMatrix(<model>, <data>)`)

#> =========================================================================

#> Confusion Matrix: Row labels: Actual class; Column labels: Predicted class

#> class_1 class_2 class_3 class_4 class_5 class_6 class_7 Error

#> class_1 39405 2809 2 0 55 15 214 0.0728

#> class_2 3684 52186 91 0 256 122 41 0.0744

#> class_3 5 257 6389 117 12 363 0 0.1056

#> class_4 0 0 59 473 0 30 0 0.1584

#> class_5 29 403 40 0 1388 10 0 0.2578

#> class_6 4 223 488 31 2 2716 0 0.2159

#> class_7 321 24 0 0 1 0 3753 0.0844

#> Totals 43448 55902 7069 621 1714 3256 4008 0.0837

#> Rate

#> class_1 = 3,095 / 42,500

#> class_2 = 4,194 / 56,380

#> class_3 = 754 / 7,143

#> class_4 = 89 / 562

#> class_5 = 482 / 1,870

#> class_6 = 748 / 3,464

#> class_7 = 346 / 4,099

#> Totals = 9,708 / 116,018

#>

#> Hit Ratio Table: Extract with `h2o.hit_ratio_table(<model>, <data>)`

#> =======================================================================

#> Top-7 Hit Ratios:

#> k hit_ratio

#> 1 1 0.916323

#> 2 2 0.995716

#> 3 3 0.999690

#> 4 4 0.999974

#> 5 5 0.999991

#> 6 6 1.000000

#> 7 7 1.000000

h2o.performance(m3, newdata=test) ## full test data

#> H2OMultinomialMetrics: deeplearning

#>

#> Test Set Metrics:

#> =====================

#>

#> MSE: (Extract with `h2o.mse`) 0.0623

#> RMSE: (Extract with `h2o.rmse`) 0.25

#> Logloss: (Extract with `h2o.logloss`) 0.207

#> Mean Per-Class Error: 0.132

#> Confusion Matrix: Extract with `h2o.confusionMatrix(<model>, <data>)`)

#> =========================================================================

#> Confusion Matrix: Row labels: Actual class; Column labels: Predicted class

#> class_1 class_2 class_3 class_4 class_5 class_6 class_7 Error

#> class_1 39222 2714 0 0 51 8 225 0.0710

#> class_2 3722 52326 109 1 227 148 46 0.0752

#> class_3 1 272 6437 124 18 317 0 0.1021

#> class_4 0 0 53 452 0 22 0 0.1423

#> class_5 24 397 29 0 1443 10 0 0.2417

#> class_6 2 186 480 35 2 2765 0 0.2032

#> class_7 345 31 0 0 1 0 3734 0.0917

#> Totals 43316 55926 7108 612 1742 3270 4005 0.0828

#> Rate

#> class_1 = 2,998 / 42,220

#> class_2 = 4,253 / 56,579

#> class_3 = 732 / 7,169

#> class_4 = 75 / 527

#> class_5 = 460 / 1,903

#> class_6 = 705 / 3,470

#> class_7 = 377 / 4,111

#> Totals = 9,600 / 115,979

#>

#> Hit Ratio Table: Extract with `h2o.hit_ratio_table(<model>, <data>)`

#> =======================================================================

#> Top-7 Hit Ratios:

#> k hit_ratio

#> 1 1 0.917226

#> 2 2 0.995499

#> 3 3 0.999664

#> 4 4 0.999957

#> 5 5 0.999991

#> 6 6 1.000000

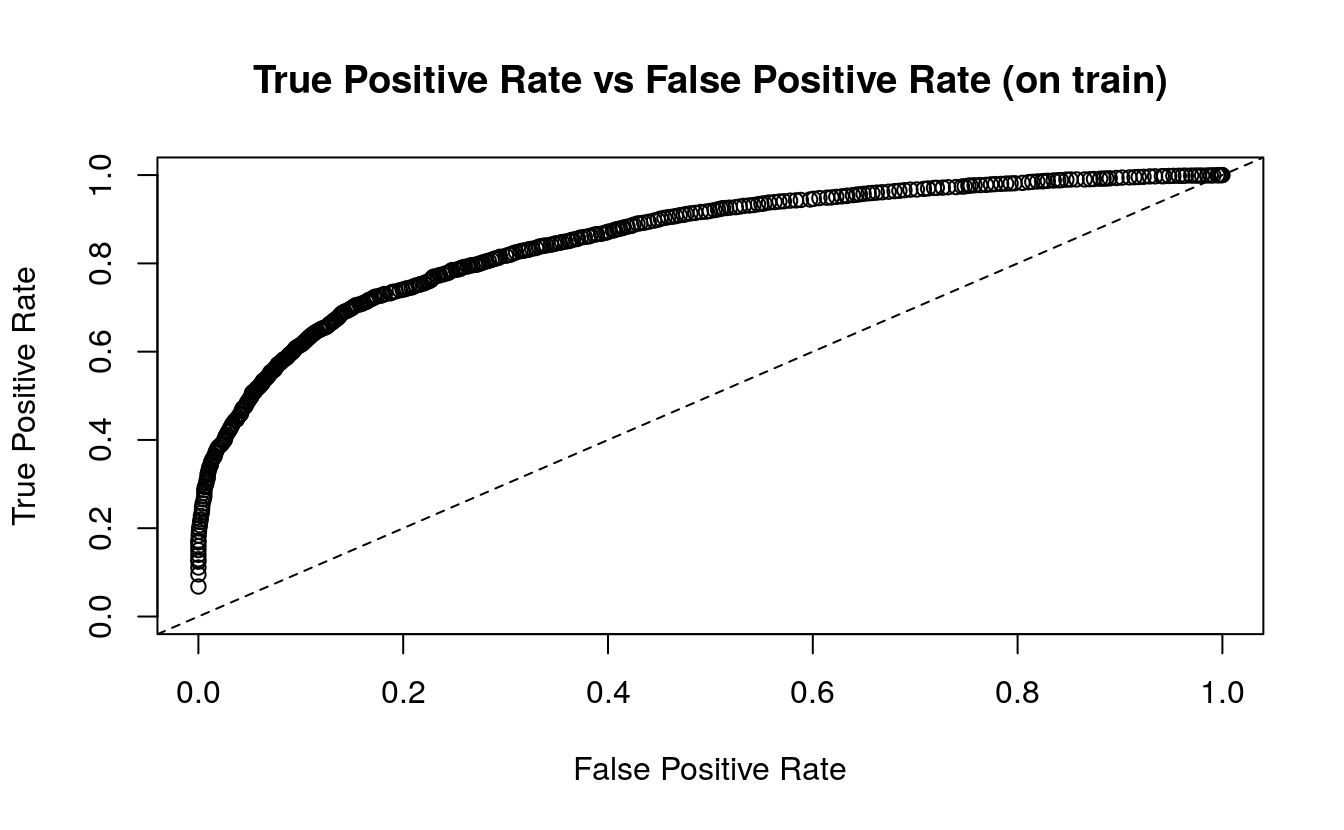

#> 7 7 1.000000To confirm that the reported confusion matrix on the validation set (here, the test set) was correct, we make a prediction on the test set and compare the confusion matrices explicitly:

pred <- h2o.predict(m3, test)

#>

|

| | 0%

|

|======================================================================| 100%

pred

#> predict class_1 class_2 class_3 class_4 class_5 class_6 class_7

#> 1 class_1 7.67e-01 2.32e-01 3.62e-05 2.36e-05 1.01e-04 2.71e-05 4.81e-04

#> 2 class_1 9.99e-01 8.44e-04 2.48e-09 1.06e-08 1.01e-07 1.14e-10 1.74e-08

#> 3 class_1 1.00e+00 1.08e-06 2.41e-11 9.61e-13 3.16e-11 7.83e-09 7.40e-08

#> 4 class_1 9.99e-01 1.27e-03 8.51e-07 5.55e-09 2.91e-10 1.54e-07 2.17e-05

#> 5 class_2 2.58e-02 9.67e-01 2.39e-06 1.80e-07 6.60e-03 4.24e-04 1.70e-05

#> 6 class_2 2.14e-05 5.11e-01 4.43e-05 2.61e-09 4.89e-01 2.17e-07 9.74e-09

#>

#> [115979 rows x 8 columns]

test$Accuracy <- pred$predict == test$Cover_Type

1-mean(test$Accuracy)

#> [1] 0.082846.5.6 Hyper-parameter Tuning with Grid Search

Since there are a lot of parameters that can impact model accuracy, hyper-parameter tuning is especially important for Deep Learning:

For speed, we will only train on the first 10,000 rows of the training dataset:

sampled_train=train[1:10000,]The simplest hyperparameter search method is a brute-force scan of the full Cartesian product of all combinations specified by a grid search:

hyper_params <- list(

hidden=list(c(32,32,32),c(64,64)),

input_dropout_ratio=c(0,0.05),

rate=c(0.01,0.02),

rate_annealing=c(1e-8,1e-7,1e-6)

)

hyper_params

#> $hidden

#> $hidden[[1]]

#> [1] 32 32 32

#>

#> $hidden[[2]]

#> [1] 64 64

#>

#>

#> $input_dropout_ratio

#> [1] 0.00 0.05

#>

#> $rate

#> [1] 0.01 0.02

#>

#> $rate_annealing

#> [1] 1e-08 1e-07 1e-06

grid <- h2o.grid(

algorithm="deeplearning",

grid_id="dl_grid",

training_frame=sampled_train,

validation_frame=valid,

x=predictors,

y=response,

epochs=10,

stopping_metric="misclassification",

stopping_tolerance=1e-2, ## stop when misclassification does not improve by >=1% for 2 scoring events

stopping_rounds=2,

score_validation_samples=10000, ## downsample validation set for faster scoring

score_duty_cycle=0.025, ## don't score more than 2.5% of the wall time

adaptive_rate=F, ## manually tuned learning rate

momentum_start=0.5, ## manually tuned momentum

momentum_stable=0.9,

momentum_ramp=1e7,

l1=1e-5,

l2=1e-5,

activation=c("Rectifier"),

max_w2=10, ## can help improve stability for Rectifier

hyper_params=hyper_params

)

#>

|

| | 0%

|

|======================================================================| 100%

grid

#> H2O Grid Details

#> ================

#>

#> Grid ID: dl_grid

#> Used hyper parameters:

#> - hidden

#> - input_dropout_ratio

#> - rate

#> - rate_annealing

#> Number of models: 24

#> Number of failed models: 0

#>

#> Hyper-Parameter Search Summary: ordered by increasing logloss

#> hidden input_dropout_ratio rate rate_annealing model_ids

#> 1 [64, 64] 0.0 0.01 1.0E-7 dl_grid_model_10

#> 2 [64, 64] 0.0 0.01 1.0E-8 dl_grid_model_2

#> 3 [64, 64] 0.0 0.01 1.0E-6 dl_grid_model_18

#> 4 [64, 64] 0.0 0.02 1.0E-8 dl_grid_model_6

#> 5 [64, 64] 0.05 0.02 1.0E-7 dl_grid_model_16

#> logloss

#> 1 0.5546777038299052

#> 2 0.561723243566788

#> 3 0.5703110009036815

#> 4 0.5766211363933275

#> 5 0.5766301225510833

#>

#> ---

#> hidden input_dropout_ratio rate rate_annealing model_ids

#> 19 [32, 32, 32] 0.05 0.02 1.0E-8 dl_grid_model_7

#> 20 [32, 32, 32] 0.05 0.02 1.0E-6 dl_grid_model_23

#> 21 [64, 64] 0.0 0.02 1.0E-7 dl_grid_model_14

#> 22 [64, 64] 0.05 0.02 1.0E-8 dl_grid_model_8

#> 23 [32, 32, 32] 0.0 0.02 1.0E-8 dl_grid_model_5

#> 24 [32, 32, 32] 0.0 0.01 1.0E-6 dl_grid_model_17

#> logloss

#> 19 0.6212765978773134

#> 20 0.6375004485315976

#> 21 0.6391900652964455

#> 22 0.6442429274125692

#> 23 0.6459711748312551

#> 24 0.6812551946441254Let’s see which model had the lowest validation error:

grid <- h2o.getGrid("dl_grid",sort_by="err",decreasing=FALSE)

grid

#> H2O Grid Details

#> ================

#>

#> Grid ID: dl_grid

#> Used hyper parameters:

#> - hidden

#> - input_dropout_ratio

#> - rate

#> - rate_annealing

#> Number of models: 24

#> Number of failed models: 0

#>

#> Hyper-Parameter Search Summary: ordered by increasing err

#> hidden input_dropout_ratio rate rate_annealing model_ids

#> 1 [64, 64] 0.0 0.01 1.0E-7 dl_grid_model_10

#> 2 [64, 64] 0.0 0.01 1.0E-6 dl_grid_model_18

#> 3 [64, 64] 0.0 0.02 1.0E-8 dl_grid_model_6

#> 4 [64, 64] 0.0 0.01 1.0E-8 dl_grid_model_2

#> 5 [64, 64] 0.0 0.02 1.0E-6 dl_grid_model_22

#> err

#> 1 0.24202739178246527

#> 2 0.2456545765095951

#> 3 0.2458638323473378

#> 4 0.24802717011287584

#> 5 0.24890612569610182

#>

#> ---

#> hidden input_dropout_ratio rate rate_annealing model_ids

#> 19 [64, 64] 0.05 0.02 1.0E-7 dl_grid_model_16

#> 20 [64, 64] 0.05 0.01 1.0E-6 dl_grid_model_20

#> 21 [32, 32, 32] 0.05 0.02 1.0E-6 dl_grid_model_23

#> 22 [32, 32, 32] 0.0 0.02 1.0E-8 dl_grid_model_5

#> 23 [64, 64] 0.05 0.02 1.0E-8 dl_grid_model_8

#> 24 [32, 32, 32] 0.0 0.01 1.0E-6 dl_grid_model_17

#> err

#> 19 0.2705517172324021

#> 20 0.27265469061876246

#> 21 0.27676707426185504

#> 22 0.277966440271674

#> 23 0.2797343529885289

#> 24 0.28279912402946444

## To see what other "sort_by" criteria are allowed

#grid <- h2o.getGrid("dl_grid",sort_by="wrong_thing",decreasing=FALSE)

## Sort by logloss

h2o.getGrid("dl_grid",sort_by="logloss",decreasing=FALSE)

#> H2O Grid Details

#> ================

#>

#> Grid ID: dl_grid

#> Used hyper parameters:

#> - hidden

#> - input_dropout_ratio

#> - rate

#> - rate_annealing

#> Number of models: 24

#> Number of failed models: 0

#>

#> Hyper-Parameter Search Summary: ordered by increasing logloss

#> hidden input_dropout_ratio rate rate_annealing model_ids

#> 1 [64, 64] 0.0 0.01 1.0E-7 dl_grid_model_10

#> 2 [64, 64] 0.0 0.01 1.0E-8 dl_grid_model_2

#> 3 [64, 64] 0.0 0.01 1.0E-6 dl_grid_model_18

#> 4 [64, 64] 0.0 0.02 1.0E-8 dl_grid_model_6

#> 5 [64, 64] 0.05 0.02 1.0E-7 dl_grid_model_16

#> logloss

#> 1 0.5546777038299052

#> 2 0.561723243566788

#> 3 0.5703110009036815

#> 4 0.5766211363933275

#> 5 0.5766301225510833

#>

#> ---

#> hidden input_dropout_ratio rate rate_annealing model_ids

#> 19 [32, 32, 32] 0.05 0.02 1.0E-8 dl_grid_model_7

#> 20 [32, 32, 32] 0.05 0.02 1.0E-6 dl_grid_model_23

#> 21 [64, 64] 0.0 0.02 1.0E-7 dl_grid_model_14

#> 22 [64, 64] 0.05 0.02 1.0E-8 dl_grid_model_8

#> 23 [32, 32, 32] 0.0 0.02 1.0E-8 dl_grid_model_5

#> 24 [32, 32, 32] 0.0 0.01 1.0E-6 dl_grid_model_17

#> logloss

#> 19 0.6212765978773134

#> 20 0.6375004485315976

#> 21 0.6391900652964455

#> 22 0.6442429274125692

#> 23 0.6459711748312551

#> 24 0.6812551946441254

## Find the best model and its full set of parameters

grid@summary_table[1,]

#> Hyper-Parameter Search Summary: ordered by increasing err

#> hidden input_dropout_ratio rate rate_annealing model_ids

#> 1 [64, 64] 0.0 0.01 1.0E-7 dl_grid_model_10

#> err

#> 1 0.24202739178246527

best_model <- h2o.getModel(grid@model_ids[[1]])

best_model

#> Model Details:

#> ==============

#>

#> H2OMultinomialModel: deeplearning

#> Model ID: dl_grid_model_10

#> Status of Neuron Layers: predicting Cover_Type, 7-class classification, multinomial distribution, CrossEntropy loss, 8,263 weights/biases, 72.2 KB, 100,000 training samples, mini-batch size 1

#> layer units type dropout l1 l2 mean_rate rate_rms momentum

#> 1 1 56 Input 0.00 % NA NA NA NA NA

#> 2 2 64 Rectifier 0.00 % 0.000010 0.000010 0.009901 0.000000 0.504000

#> 3 3 64 Rectifier 0.00 % 0.000010 0.000010 0.009901 0.000000 0.504000

#> 4 4 7 Softmax NA 0.000010 0.000010 0.009901 0.000000 0.504000

#> mean_weight weight_rms mean_bias bias_rms

#> 1 NA NA NA NA

#> 2 -0.013262 0.211380 0.184134 0.174737

#> 3 -0.064339 0.188289 0.874184 0.139451

#> 4 -0.007710 0.393877 -0.000827 0.599978

#>

#>

#> H2OMultinomialMetrics: deeplearning

#> ** Reported on training data. **

#> ** Metrics reported on full training frame **

#>

#> Training Set Metrics:

#> =====================

#>

#> Extract training frame with `h2o.getFrame("RTMP_sid_9717_9")`

#> MSE: (Extract with `h2o.mse`) 0.162

#> RMSE: (Extract with `h2o.rmse`) 0.402

#> Logloss: (Extract with `h2o.logloss`) 0.503

#> Mean Per-Class Error: 0.44

#> Confusion Matrix: Extract with `h2o.confusionMatrix(<model>,train = TRUE)`)

#> =========================================================================

#> Confusion Matrix: Row labels: Actual class; Column labels: Predicted class

#> class_1 class_2 class_3 class_4 class_5 class_6 class_7 Error

#> class_1 2718 879 2 0 0 0 89 0.2630

#> class_2 630 4108 75 0 0 17 5 0.1504

#> class_3 1 38 584 1 0 6 0 0.0730

#> class_4 0 0 34 10 0 0 0 0.7727

#> class_5 15 113 6 0 22 0 0 0.8590

#> class_6 0 61 179 0 0 69 0 0.7767

#> class_7 61 1 0 0 0 0 276 0.1834

#> Totals 3425 5200 880 11 22 92 370 0.2213

#> Rate

#> class_1 = 970 / 3,688

#> class_2 = 727 / 4,835

#> class_3 = 46 / 630

#> class_4 = 34 / 44

#> class_5 = 134 / 156

#> class_6 = 240 / 309

#> class_7 = 62 / 338

#> Totals = 2,213 / 10,000

#>

#> Hit Ratio Table: Extract with `h2o.hit_ratio_table(<model>,train = TRUE)`

#> =======================================================================

#> Top-7 Hit Ratios:

#> k hit_ratio

#> 1 1 0.778700

#> 2 2 0.981500

#> 3 3 0.997000

#> 4 4 0.999500

#> 5 5 0.999900

#> 6 6 1.000000

#> 7 7 1.000000

#>

#>

#> H2OMultinomialMetrics: deeplearning

#> ** Reported on validation data. **

#> ** Metrics reported on temporary validation frame with 10003 samples **

#>

#> Validation Set Metrics:

#> =====================

#>

#> MSE: (Extract with `h2o.mse`) 0.177

#> RMSE: (Extract with `h2o.rmse`) 0.421

#> Logloss: (Extract with `h2o.logloss`) 0.555

#> Mean Per-Class Error: 0.479

#> Confusion Matrix: Extract with `h2o.confusionMatrix(<model>,valid = TRUE)`)

#> =========================================================================

#> Confusion Matrix: Row labels: Actual class; Column labels: Predicted class

#> class_1 class_2 class_3 class_4 class_5 class_6 class_7 Error

#> class_1 2569 988 0 0 0 1 85 0.2948

#> class_2 692 4113 54 0 0 22 4 0.1580

#> class_3 0 46 540 1 0 9 0 0.0940

#> class_4 0 2 36 8 0 0 0 0.8261

#> class_5 9 147 0 0 16 1 0 0.9075

#> class_6 0 56 178 0 0 45 0 0.8387

#> class_7 89 1 0 0 0 0 291 0.2362

#> Totals 3359 5353 808 9 16 78 380 0.2420

#> Rate

#> class_1 = 1,074 / 3,643

#> class_2 = 772 / 4,885

#> class_3 = 56 / 596

#> class_4 = 38 / 46

#> class_5 = 157 / 173

#> class_6 = 234 / 279

#> class_7 = 90 / 381

#> Totals = 2,421 / 10,003

#>

#> Hit Ratio Table: Extract with `h2o.hit_ratio_table(<model>,valid = TRUE)`

#> =======================================================================

#> Top-7 Hit Ratios:

#> k hit_ratio

#> 1 1 0.757973

#> 2 2 0.974708

#> 3 3 0.997001

#> 4 4 0.999200

#> 5 5 0.999900

#> 6 6 1.000000

#> 7 7 1.000000

print(best_model@allparameters)

#> $model_id

#> [1] "dl_grid_model_10"

#>

#> $training_frame

#> [1] "RTMP_sid_9717_9"

#>

#> $validation_frame

#> [1] "valid.hex"

#>

#> $nfolds

#> [1] 0

#>

#> $keep_cross_validation_models

#> [1] TRUE

#>

#> $keep_cross_validation_predictions

#> [1] FALSE

#>

#> $keep_cross_validation_fold_assignment

#> [1] FALSE

#>

#> $fold_assignment

#> [1] "AUTO"

#>

#> $ignore_const_cols

#> [1] TRUE

#>

#> $score_each_iteration

#> [1] FALSE

#>

#> $balance_classes

#> [1] FALSE

#>

#> $max_after_balance_size

#> [1] 5

#>

#> $max_confusion_matrix_size

#> [1] 20

#>

#> $max_hit_ratio_k

#> [1] 0

#>

#> $overwrite_with_best_model

#> [1] TRUE

#>

#> $use_all_factor_levels

#> [1] TRUE

#>

#> $standardize

#> [1] TRUE

#>

#> $activation

#> [1] "Rectifier"

#>

#> $hidden

#> [1] 64 64

#>

#> $epochs

#> [1] 10

#>

#> $train_samples_per_iteration

#> [1] -2

#>

#> $target_ratio_comm_to_comp

#> [1] 0.05

#>

#> $seed

#> [1] "1840239639861217278"

#>

#> $adaptive_rate

#> [1] FALSE

#>

#> $rho

#> [1] 0.99

#>

#> $epsilon

#> [1] 1e-08

#>

#> $rate

#> [1] 0.01

#>

#> $rate_annealing

#> [1] 1e-07

#>

#> $rate_decay

#> [1] 1

#>

#> $momentum_start

#> [1] 0.5

#>

#> $momentum_ramp

#> [1] 1e+07

#>

#> $momentum_stable

#> [1] 0.9

#>

#> $nesterov_accelerated_gradient

#> [1] TRUE

#>

#> $input_dropout_ratio

#> [1] 0

#>

#> $l1

#> [1] 1e-05

#>

#> $l2

#> [1] 1e-05

#>

#> $max_w2

#> [1] 10

#>

#> $initial_weight_distribution

#> [1] "UniformAdaptive"

#>

#> $initial_weight_scale

#> [1] 1

#>

#> $loss

#> [1] "Automatic"

#>

#> $distribution

#> [1] "AUTO"

#>

#> $quantile_alpha

#> [1] 0.5

#>

#> $tweedie_power

#> [1] 1.5

#>

#> $huber_alpha

#> [1] 0.9

#>

#> $score_interval

#> [1] 5

#>

#> $score_training_samples

#> [1] 10000

#>

#> $score_validation_samples

#> [1] 10000

#>

#> $score_duty_cycle

#> [1] 0.025

#>

#> $classification_stop

#> [1] 0

#>

#> $regression_stop

#> [1] 1e-06

#>

#> $stopping_rounds

#> [1] 2

#>

#> $stopping_metric

#> [1] "misclassification"

#>

#> $stopping_tolerance

#> [1] 0.01

#>

#> $max_runtime_secs

#> [1] 1.8e+308

#>

#> $score_validation_sampling

#> [1] "Uniform"

#>

#> $diagnostics

#> [1] TRUE

#>

#> $fast_mode

#> [1] TRUE

#>

#> $force_load_balance

#> [1] TRUE

#>

#> $variable_importances

#> [1] TRUE

#>

#> $replicate_training_data

#> [1] TRUE

#>

#> $single_node_mode

#> [1] FALSE

#>

#> $shuffle_training_data

#> [1] FALSE

#>

#> $missing_values_handling

#> [1] "MeanImputation"

#>

#> $quiet_mode

#> [1] FALSE

#>

#> $autoencoder

#> [1] FALSE

#>

#> $sparse

#> [1] FALSE

#>

#> $col_major

#> [1] FALSE

#>

#> $average_activation

#> [1] 0

#>

#> $sparsity_beta

#> [1] 0

#>

#> $max_categorical_features

#> [1] 2147483647

#>

#> $reproducible

#> [1] FALSE

#>

#> $export_weights_and_biases

#> [1] FALSE

#>

#> $mini_batch_size

#> [1] 1

#>

#> $categorical_encoding

#> [1] "AUTO"

#>

#> $elastic_averaging

#> [1] FALSE

#>

#> $elastic_averaging_moving_rate

#> [1] 0.9

#>

#> $elastic_averaging_regularization

#> [1] 0.001

#>

#> $x

#> [1] "Soil_Type" "Wilderness_Area"

#> [3] "Elevation" "Aspect"

#> [5] "Slope" "Horizontal_Distance_To_Hydrology"

#> [7] "Vertical_Distance_To_Hydrology" "Horizontal_Distance_To_Roadways"

#> [9] "Hillshade_9am" "Hillshade_Noon"

#> [11] "Hillshade_3pm" "Horizontal_Distance_To_Fire_Points"

#>

#> $y

#> [1] "Cover_Type"

print(h2o.performance(best_model, valid=T))

#> H2OMultinomialMetrics: deeplearning

#> ** Reported on validation data. **

#> ** Metrics reported on temporary validation frame with 10003 samples **

#>

#> Validation Set Metrics:

#> =====================

#>

#> MSE: (Extract with `h2o.mse`) 0.177

#> RMSE: (Extract with `h2o.rmse`) 0.421

#> Logloss: (Extract with `h2o.logloss`) 0.555

#> Mean Per-Class Error: 0.479

#> Confusion Matrix: Extract with `h2o.confusionMatrix(<model>,valid = TRUE)`)

#> =========================================================================

#> Confusion Matrix: Row labels: Actual class; Column labels: Predicted class

#> class_1 class_2 class_3 class_4 class_5 class_6 class_7 Error

#> class_1 2569 988 0 0 0 1 85 0.2948

#> class_2 692 4113 54 0 0 22 4 0.1580

#> class_3 0 46 540 1 0 9 0 0.0940

#> class_4 0 2 36 8 0 0 0 0.8261

#> class_5 9 147 0 0 16 1 0 0.9075

#> class_6 0 56 178 0 0 45 0 0.8387

#> class_7 89 1 0 0 0 0 291 0.2362

#> Totals 3359 5353 808 9 16 78 380 0.2420

#> Rate

#> class_1 = 1,074 / 3,643

#> class_2 = 772 / 4,885

#> class_3 = 56 / 596

#> class_4 = 38 / 46

#> class_5 = 157 / 173

#> class_6 = 234 / 279

#> class_7 = 90 / 381

#> Totals = 2,421 / 10,003

#>

#> Hit Ratio Table: Extract with `h2o.hit_ratio_table(<model>,valid = TRUE)`

#> =======================================================================

#> Top-7 Hit Ratios:

#> k hit_ratio

#> 1 1 0.757973

#> 2 2 0.974708

#> 3 3 0.997001

#> 4 4 0.999200

#> 5 5 0.999900

#> 6 6 1.000000

#> 7 7 1.000000

print(h2o.logloss(best_model, valid=T))

#> [1] 0.55546.5.7 Random Hyper-Parameter Search

Often, hyper-parameter search for more than 4 parameters can be done more efficiently with random parameter search than with grid search. Basically, chances are good to find one of many good models in less time than performing an exhaustive grid search. We simply build up to max_models models with parameters drawn randomly from user-specified distributions (here, uniform). For this example, we use the adaptive learning rate and focus on tuning the network architecture and the regularization parameters. We also let the grid search stop automatically once the performance at the top of the leaderboard doesn’t change much anymore, i.e., once the search has converged.

hyper_params <- list(

activation=c("Rectifier","Tanh","Maxout","RectifierWithDropout","TanhWithDropout","MaxoutWithDropout"),

hidden=list(c(20,20),c(50,50),c(30,30,30),c(25,25,25,25)),

input_dropout_ratio=c(0,0.05),

l1=seq(0,1e-4,1e-6),

l2=seq(0,1e-4,1e-6)

)

hyper_params

## Stop once the top 5 models are within 1% of each other (i.e., the windowed average varies less than 1%)

search_criteria = list(strategy = "RandomDiscrete", max_runtime_secs = 360, max_models = 100, seed=1234567, stopping_rounds=5, stopping_tolerance=1e-2)

dl_random_grid <- h2o.grid(

algorithm="deeplearning",

grid_id = "dl_grid_random",

training_frame=sampled_train,

validation_frame=valid,

x=predictors,

y=response,

epochs=1,

stopping_metric="logloss",

stopping_tolerance=1e-2, ## stop when logloss does not improve by >=1% for 2 scoring events

stopping_rounds=2,

score_validation_samples=10000, ## downsample validation set for faster scoring

score_duty_cycle=0.025, ## don't score more than 2.5% of the wall time

max_w2=10, ## can help improve stability for Rectifier

hyper_params = hyper_params,

search_criteria = search_criteria

)

grid <- h2o.getGrid("dl_grid_random",sort_by="logloss",decreasing=FALSE)

grid

grid@summary_table[1,]

best_model <- h2o.getModel(grid@model_ids[[1]]) ## model with lowest logloss