mnist_fashion_inference

mnist_fashion_inference.RmdSource: https://www.kaggle.com/ysachit/inference-and-validation-ipynb

Original title: Inference and Validation

nn <- torch$nn

transforms <- torchvision$transforms

datasets <- torchvision$datasets

builtins <- import_builtins()

torch$manual_seed(123)

#> <torch._C.Generator>Load datasets

As usual, let’s start by loading the dataset through torchvision.

local_folder <- "../datasets/mnist_fashion"

# Define a transform to normalize the data

transform = transforms$Compose(list(transforms$ToTensor(),

transforms$Normalize(list(0.5), list(0.5) )))

# Download and load the training data

trainset = datasets$FashionMNIST(local_folder, download=TRUE, train=TRUE,

transform=transform)

train_loader = torch$utils$data$DataLoader(trainset, batch_size=64L, shuffle=TRUE)

# Download and load the test data

testset = datasets$FashionMNIST(local_folder, download=TRUE, train=FALSE,

transform=transform)

test_loader = torch$utils$data$DataLoader(testset, batch_size=64L, shuffle=TRUE)

py_len(trainset)

#> [1] 60000Model

optim <- torch$optim

F <- torch$nn$functional

main <- py_run_string("

from torch import nn, optim

import torch.nn.functional as F

class Classifier(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = nn.Linear(784, 256)

self.fc2 = nn.Linear(256, 128)

self.fc3 = nn.Linear(128, 64)

self.fc4 = nn.Linear(64, 10)

def forward(self, x):

# make sure input tensor is flattened

x = x.view(x.shape[0], -1)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = F.relu(self.fc3(x))

x = F.log_softmax(self.fc4(x), dim=1)

return x

")

model = main$Classifier()

model

#> Classifier(

#> (fc1): Linear(in_features=784, out_features=256, bias=True)

#> (fc2): Linear(in_features=256, out_features=128, bias=True)

#> (fc3): Linear(in_features=128, out_features=64, bias=True)

#> (fc4): Linear(in_features=64, out_features=10, bias=True)

#> )Instrospection

random <- import("random")

# number of elements in test

py_len(test_loader) # len: 157

#> [1] 157

# get random object from the test_loader

i_rand = random$randrange(0, py_len(test_loader)-1) # get a random data point

i_rand

#> [1] 71enum_test_examples = builtins$enumerate(test_loader)

enum_test_examples

#> <enumerate>

# <enumerate object at 0x7f793ce486c0>

iter_test_examples <- iterate(enum_test_examples)

# iter_test_examples[[3]]

iter_test_examples[[i_rand]][[1]] # index number

#> [1] 70

iter_test_examples[[i_rand]][[2]][[1]]$size() # image tensor .Size([64,1,28,28])

#> torch.Size([64, 1, 28, 28])

iter_test_examples[[i_rand]][[2]][[2]]$shape # labels torch.Size([64])

#> torch.Size([64])

idx <- iter_test_examples[[i_rand]][[1]]

images <- iter_test_examples[[i_rand]][[2]][[1]]

labels <- iter_test_examples[[i_rand]][[2]][[2]]

# Get the class probabilities

ps = torch$exp(model(images))

# Make sure the shape is appropriate, we should get 10 class probabilities for 64 examples

print(ps$shape)

#> torch.Size([64, 10])

# torch.Size([64, 10])Most likely class

With the probabilities, we can get the most likely class using the ps.topk method. This returns the k highest values. Since we just want the most likely class, we can use ps.topk(1). This returns a tuple of the top-k values and the top-k indices. If the highest value is the fifth element, we’ll get back 4 as the index.

top_ = ps$topk(1L, dim=1L)

top_p <- top_[0]

top_class <- top_[1]

top_p$shape

#> torch.Size([64, 1])

top_class$shape

#> torch.Size([64, 1])

# Look at the most likely classes for the first 10 examples

print(top_class[1:10,])

#> tensor([[4],

#> [4],

#> [9],

#> [9],

#> [4],

#> [9],

#> [9],

#> [5],

#> [4],

#> [9]])Compare predicted vs true labels

Now we can check if the predicted classes match the labels. This is simple to do by equating top_class and labels, but we have to be careful of the shapes. Here top_class is a 2D tensor with shape (64, 1) while labels is 1D with shape (64). To get the equality to work out the way we want, top_class and labels must have the same shape.

equals will have shape (64, 64), try it yourself. What it’s doing is comparing the one element in each row of top_class with each element in labels which returns 64 True/False boolean values for each row.

equals = top_class == labels$view(top_class$shape)

equals

#> tensor([[False],

#> [False],

#> [False],

#> [ True],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [ True],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [ True],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [ True],

#> [False],

#> [False],

#> [False],

#> [ True],

#> [ True],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False],

#> [False]], dtype=torch.bool)Untrained model

Now we need to calculate the percentage of correct predictions. equals has binary values, either 0 or 1. This means that if we just sum up all the values and divide by the number of values, we get the percentage of correct predictions.

we’ll need to convert equals to a float tensor. Note that when we take torch.mean it returns a scalar tensor, to get the actual value as a float we’ll need to do accuracy.item().

accuracy = torch$mean(equals$type(torch$FloatTensor))

cat(sprintf("Accuracy: %f %%", accuracy$item()*100))

#> Accuracy: 9.375000 %The network is untrained so it’s making random guesses and we should see an accuracy around 10%.

Train the model

Now let’s train our network and include our validation pass so we can measure how well the network is performing on the test set. Since we’re not updating our parameters in the validation pass, we can speed up our code by turning off gradients using torch.no_grad():

model <- main$Classifier()

criterion <- nn$NLLLoss()

optimizer <- optim$Adam(model$parameters(), lr = 0.003)

epochs <- 5

steps <- 0

train_losses <- vector("list"); test_losses <- vector("list")

for (e in 1:epochs) {

i <- 1 # counter for training loop

running_loss <- 0

iter_train_dataset <- builtins$enumerate(train_loader) # reset iterator

for (train_obj in iterate(iter_train_dataset)) {

images <- train_obj[[2]][[1]] # extract images

labels <- train_obj[[2]][[2]] # extract labels

optimizer$zero_grad()

log_ps <- model(images)

loss <- criterion(log_ps, labels)

loss$backward()

optimizer$step()

running_loss <- running_loss + loss$item()

}

test_loss <- 0

accuracy <- 0

with(torch$no_grad(), {

iter_test_dataset <- builtins$enumerate(test_loader) # reset iterator

for (test_obj in iterate(iter_test_dataset)) {

images <- test_obj[[2]][[1]] # extract images

labels <- test_obj[[2]][[2]] # extract labels

output <- model(images)

test_loss <- test_loss + criterion(output, labels)

ps <- torch$exp(model(images))

top_ <- ps$topk(1L, dim=1L)

top_p <- top_[0]; top_class <- top_[1]

equals <- top_class == labels$view(top_class$shape)

accuracy <- accuracy + torch$mean(equals$type(torch$FloatTensor))

# Look at the most likely classes for the first 10 examples

}

})

train_losses[[i]] <- running_loss / py_len(train_loader)

test_losses[[i]] <- test_loss / py_len(test_loader)

cat(sprintf("\n Epoch: %3d Training Loss: %8.3f Test Loss: %8.3f Test Accuracy: %8.3f",

e,

running_loss / py_len(train_loader),

test_loss$item() / py_len(test_loader),

accuracy$item() / py_len(test_loader)

))

}

#>

#> Epoch: 1 Training Loss: 0.519 Test Loss: 0.458 Test Accuracy: 0.829

#> Epoch: 2 Training Loss: 0.393 Test Loss: 0.410 Test Accuracy: 0.852

#> Epoch: 3 Training Loss: 0.355 Test Loss: 0.387 Test Accuracy: 0.854

#> Epoch: 4 Training Loss: 0.333 Test Loss: 0.399 Test Accuracy: 0.857

#> Epoch: 5 Training Loss: 0.320 Test Loss: 0.377 Test Accuracy: 0.868Overfitting

If we look at the training and validation losses as we train the network, we can see a phenomenon known as overfitting.

The network learns the training set better and better, resulting in lower training losses. However, it starts having problems generalizing to data outside the training set leading to the validation loss increasing. The ultimate goal of any deep learning model is to make predictions on new data, so we should strive to get the lowest validation loss possible. One option is to use the version of the model with the lowest validation loss, here the one around 8-10 training epochs. This strategy is called early-stopping. In practice, you’d save the model frequently as you’re training then later choose the model with the lowest validation loss.

Model with dropout

The most common method to reduce overfitting (outside of early-stopping) is dropout, where we randomly drop input units. This forces the network to share information between weights, increasing it’s ability to generalize to new data. Adding dropout in PyTorch is straightforward using the nn.Dropout module.

main <- py_run_string("

from torch import nn, optim

import torch.nn.functional as F

class ClassifierDO(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = nn.Linear(784, 256)

self.fc2 = nn.Linear(256, 128)

self.fc3 = nn.Linear(128, 64)

self.fc4 = nn.Linear(64, 10)

# Dropout module with 0.2 drop probability

self.dropout = nn.Dropout(p=0.2)

def forward(self, x):

# make sure input tensor is flattened

x = x.view(x.shape[0], -1)

# Now with dropout

x = self.dropout(F.relu(self.fc1(x)))

x = self.dropout(F.relu(self.fc2(x)))

x = self.dropout(F.relu(self.fc3(x)))

# output so no dropout here

x = F.log_softmax(self.fc4(x), dim=1)

return x

")

ClassifierDO <- main$ClassifierDO()

ClassifierDO

#> ClassifierDO(

#> (fc1): Linear(in_features=784, out_features=256, bias=True)

#> (fc2): Linear(in_features=256, out_features=128, bias=True)

#> (fc3): Linear(in_features=128, out_features=64, bias=True)

#> (fc4): Linear(in_features=64, out_features=10, bias=True)

#> (dropout): Dropout(p=0.2)

#> )Train the model with dropout

During training we want to use dropout to prevent overfitting, but during inference we want to use the entire network. So, we need to turn off dropout during validation, testing, and whenever we’re using the network to make predictions. To do this, you use model.eval(). This sets the model to evaluation mode where the dropout probability is 0. You can turn dropout back on by setting the model to train mode with model.train(). In general, the pattern for the validation loop will look like this, where you turn off gradients, set the model to evaluation mode, calculate the validation loss and metric, then set the model back to train mode.

modelDO <- main$ClassifierDO()

criterion <- nn$NLLLoss()

optimizerDO <- optim$Adam(modelDO$parameters(), lr = 0.003)

epochs <- 5

steps <- 0

train_losses <- vector("list"); test_losses <- vector("list")

for (e in 1:epochs) {

i <- 1 # counter for training loop

running_loss <- 0

iter_train_dataset <- builtins$enumerate(train_loader) # reset iterator

for (train_obj in iterate(iter_train_dataset)) {

images <- train_obj[[2]][[1]] # extract images

labels <- train_obj[[2]][[2]] # extract labels

optimizerDO$zero_grad()

log_ps <- modelDO(images)

loss <- criterion(log_ps, labels)

loss$backward()

optimizerDO$step()

running_loss <- running_loss + loss$item()

}

test_loss <- 0

accuracy <- 0

with(torch$no_grad(), {

iter_test_dataset <- builtins$enumerate(test_loader) # reset iterator

for (test_obj in iterate(iter_test_dataset)) {

images <- test_obj[[2]][[1]] # extract images

labels <- test_obj[[2]][[2]] # extract labels

output <- modelDO(images)

test_loss <- test_loss + criterion(output, labels)

ps <- torch$exp(modelDO(images))

top_ <- ps$topk(1L, dim=1L)

top_p <- top_[0]; top_class <- top_[1]

equals <- top_class == labels$view(top_class$shape)

accuracy <- accuracy + torch$mean(equals$type(torch$FloatTensor))

# Look at the most likely classes for the first 10 examples

}

})

train_losses[[i]] <- running_loss / py_len(train_loader)

test_losses[[i]] <- test_loss / py_len(test_loader)

cat(sprintf("\n Epoch: %3d Training Loss: %8.3f Test Loss: %8.3f Test Accuracy: %8.3f",

e,

running_loss / py_len(train_loader),

test_loss$item() / py_len(test_loader),

accuracy$item() / py_len(test_loader)

))

}

#>

#> Epoch: 1 Training Loss: 0.607 Test Loss: 0.564 Test Accuracy: 0.810

#> Epoch: 2 Training Loss: 0.479 Test Loss: 0.497 Test Accuracy: 0.827

#> Epoch: 3 Training Loss: 0.449 Test Loss: 0.502 Test Accuracy: 0.830

#> Epoch: 4 Training Loss: 0.437 Test Loss: 0.462 Test Accuracy: 0.839

#> Epoch: 5 Training Loss: 0.419 Test Loss: 0.450 Test Accuracy: 0.843Inference on dropout model

Now that the model is trained, we can use it for inference. We’ve done this before, but now we need to remember to set the model in inference mode with model.eval(). You’ll also want to turn off autograd with the torch.no_grad() context.

# Test out your network!

# Switch to evaluation mode

modelDO$eval()

#> ClassifierDO(

#> (fc1): Linear(in_features=784, out_features=256, bias=True)

#> (fc2): Linear(in_features=256, out_features=128, bias=True)

#> (fc3): Linear(in_features=128, out_features=64, bias=True)

#> (fc4): Linear(in_features=64, out_features=10, bias=True)

#> (dropout): Dropout(p=0.2)

#> )

# load test dataset and get one image

# using an iterator

dataiter = builtins$iter(test_loader) # len(test_loader): 157

data_obj = iter_next(dataiter)

images <- data_obj[[1]] # images.shape: torch.Size([64, 1, 28, 28])

labels <- data_obj[[2]] # labels.shape: torch.Size([64])

images$shape

#> torch.Size([64, 1, 28, 28])

labels$shape

#> torch.Size([64])

# take first image

img = images[1L,,,] # shape: torch.Size([1, 28, 28])

# Convert 2D image to 1D vector

img_pick = img$view(1L, 784L) # shape: torch.Size([1, 784])

img_pick$shape

#> torch.Size([1, 784])

# Calculate the class probabilities (softmax) for img

with(torch$no_grad(), {

output = modelDO$forward(img_pick)

})

ps = torch$exp(output)

ps$shape

#> torch.Size([1, 10])

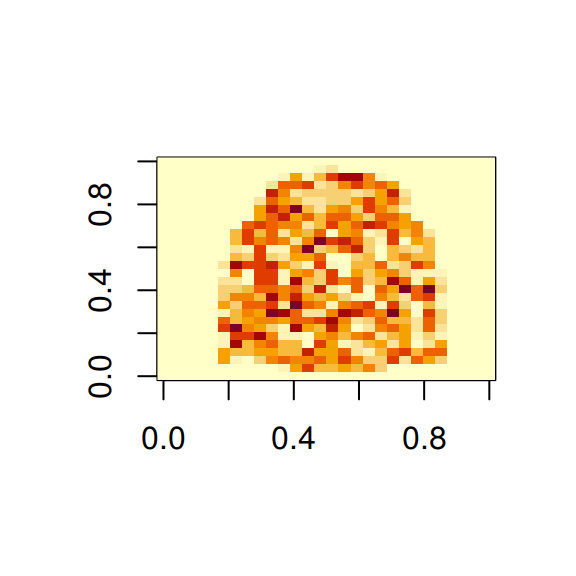

# Plot the image and probabilities

# view_classify(img_pick.view(1, 28, 28), ps, version='Fashion')

# show image

rotate <- function(x) t(apply(x, 2, rev)) # function to rotate the matrix

img_np_rs = np$reshape(img_pick$numpy(), c(28L, 28L))

image(rotate(img_np_rs))