Chapter 11 Monsters and Mixtures

[Of these majestic creatures], we’ll consider two common and useful examples. The first type is the ordered categorical model, useful for categorical outcomes with a fixed ordering. This model is built by merging a categorical likelihood function with a special kind of link function, usually a cumulative link. The second type is a family of zero-inflated and zero-augmented models, each of which mixes a binary event within an ordinary GLM likelihood like a Poisson or binomial.

Both types of models help us transform our modeling to cope with the inconvenient realities of measurement, rather than transforming measurements to cope with the constraints of our models. (p. 331)

11.1 Ordered categorical outcomes

It is very common in the social sciences, and occasional in the natural sciences, to have an outcome variable that is discrete, like a count, but in which the values merely indicate different ordered levels along some dimension. For example, if I were to ask you how much you like to eat fish, on a scale from 1 to 7, you might say 5. If I were to ask 100 people the same question, I’d end up with 100 values between 1 and 7. In modeling each outcome value, I’d have to keep in mind that these values are ordered because 7 is greater than 6, which is greater than 5, and so on. But unlike a count, the differences in values are not necessarily equal.

In principle, an ordered categorical variable is just a multinomial prediction problem (page 323). But the constraint that the categories be ordered demands special treatment…

The conventional solution is to use a cumulative link function. The cumulative probability of a value is the probability of that value or any smaller value. (pp. 331–332, emphasis in the original)

11.1.1 Example: Moral intuition.

Let’s get the Trolley data from rethinking.

library(rethinking)

data(Trolley)

d <- TrolleyUnload rethinking and load brms.

rm(Trolley)

detach(package:rethinking, unload = T)

library(brms)Use the tidyverse to get a sense of the dimensions of the data.

library(tidyverse)

glimpse(d)## Observations: 9,930

## Variables: 12

## $ case <fct> cfaqu, cfbur, cfrub, cibox, cibur, cispe, fkaqu, fkboa, fkbox, fkbur, fkcar, fkspe, fkswi…

## $ response <int> 4, 3, 4, 3, 3, 3, 5, 4, 4, 4, 4, 4, 4, 5, 4, 4, 4, 4, 4, 3, 3, 3, 4, 4, 5, 4, 4, 3, 4, 4,…

## $ order <int> 2, 31, 16, 32, 4, 9, 29, 12, 23, 22, 27, 19, 14, 3, 18, 15, 30, 5, 1, 13, 20, 17, 28, 10,…

## $ id <fct> 96;434, 96;434, 96;434, 96;434, 96;434, 96;434, 96;434, 96;434, 96;434, 96;434, 96;434, 9…

## $ age <int> 14, 14, 14, 14, 14, 14, 14, 14, 14, 14, 14, 14, 14, 14, 14, 14, 14, 14, 14, 14, 14, 14, 1…

## $ male <int> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

## $ edu <fct> Middle School, Middle School, Middle School, Middle School, Middle School, Middle School,…

## $ action <int> 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 1,…

## $ intention <int> 0, 0, 0, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0,…

## $ contact <int> 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

## $ story <fct> aqu, bur, rub, box, bur, spe, aqu, boa, box, bur, car, spe, swi, boa, car, che, sha, swi,…

## $ action2 <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 1,…Though we have 9,930 rows, we only have 331 unique individuals.

d %>%

distinct(id) %>%

count()## # A tibble: 1 x 1

## n

## <int>

## 1 33111.1.2 Describing an ordered distribution with intercepts.

Before we get to plotting, in this chapter we’ll use theme settings and a color palette from the ggthemes package.

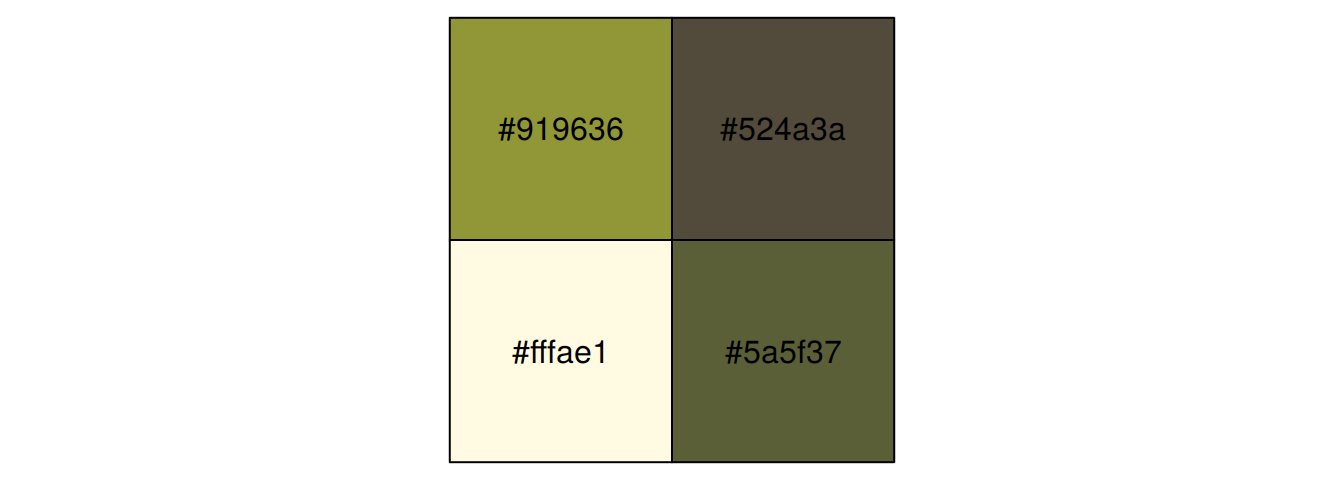

library(ggthemes)We’ll take our basic theme settings from the theme_hc() function. We’ll use the Green fields color palette, which we can inspect with the canva_pal() function and a little help from scales::show_col().

scales::show_col(canva_pal("Green fields")(4))

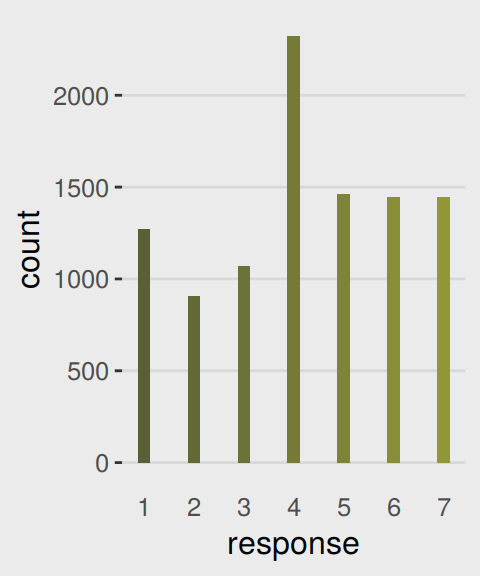

canva_pal("Green fields")(4)## [1] "#919636" "#524a3a" "#fffae1" "#5a5f37"canva_pal("Green fields")(4)[3]## [1] "#fffae1"Now we’re ready to make our ggplot2 version of the simple histogram, Figure 11.1.a.

ggplot(data = d, aes(x = response, fill = ..x..)) +

geom_histogram(binwidth = 1/4, size = 0) +

scale_x_continuous(breaks = 1:7) +

scale_fill_gradient(low = canva_pal("Green fields")(4)[4],

high = canva_pal("Green fields")(4)[1]) +

theme_hc() +

theme(axis.ticks.x = element_blank(),

plot.background = element_rect(fill = "grey92"),

legend.position = "none")

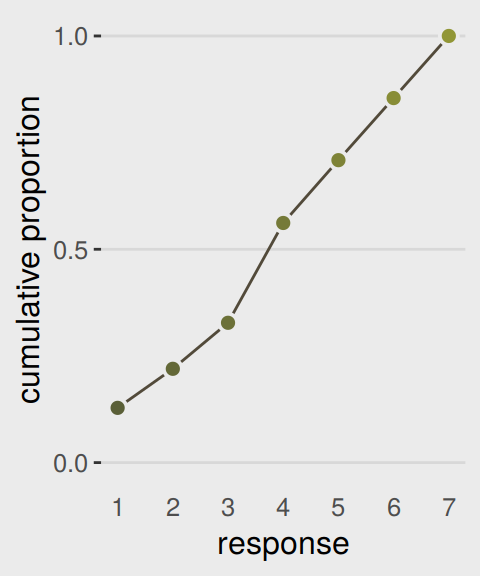

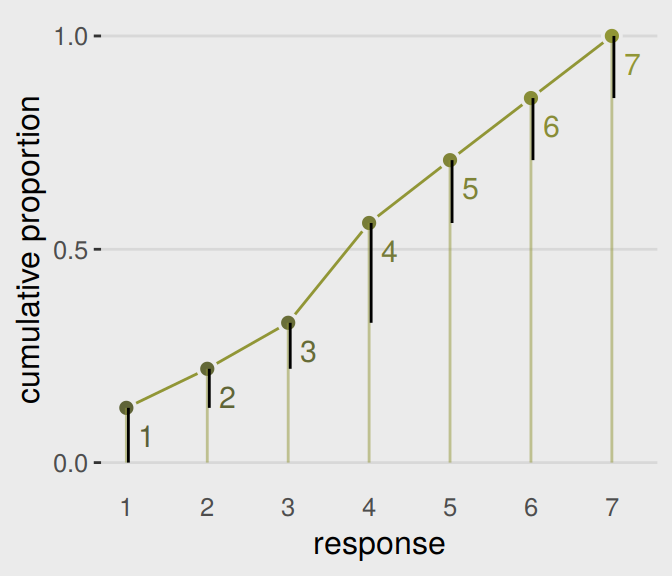

Our cumulative proportion plot, Figure 11.1.b, will require some pre-plot wrangling.

d %>%

group_by(response) %>%

count() %>%

mutate(pr_k = n / nrow(d)) %>%

ungroup() %>%

mutate(cum_pr_k = cumsum(pr_k)) %>%

ggplot(aes(x = response, y = cum_pr_k,

fill = response)) +

geom_line(color = canva_pal("Green fields")(4)[2]) +

geom_point(shape = 21, colour = "grey92",

size = 2.5, stroke = 1) +

scale_x_continuous(breaks = 1:7) +

scale_y_continuous("cumulative proportion", breaks = c(0, .5, 1)) +

scale_fill_gradient(low = canva_pal("Green fields")(4)[4],

high = canva_pal("Green fields")(4)[1]) +

coord_cartesian(ylim = c(0, 1)) +

theme_hc() +

theme(axis.ticks.x = element_blank(),

plot.background = element_rect(fill = "grey92"),

legend.position = "none")

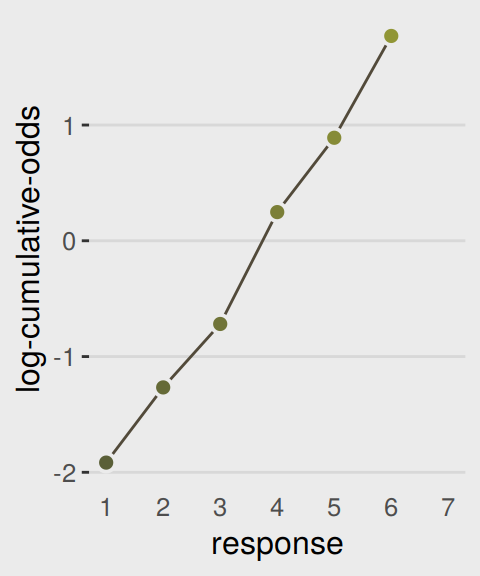

In order to make the next plot, we’ll need McElreath’s logit() function. Here it is, the logarithm of cumulative odds plot, Figure 11.1.c.

# McElreath's convenience function from page 335

logit <- function(x) log(x / (1 - x))

d %>%

group_by(response) %>%

count() %>%

mutate(pr_k = n / nrow(d)) %>%

ungroup() %>%

mutate(cum_pr_k = cumsum(pr_k)) %>%

filter(response < 7) %>%

# we can do the `logit()` conversion right in ggplot2

ggplot(aes(x = response, y = logit(cum_pr_k),

fill = response)) +

geom_line(color = canva_pal("Green fields")(4)[2]) +

geom_point(shape = 21, colour = "grey92",

size = 2.5, stroke = 1) +

scale_x_continuous(breaks = 1:7) +

scale_fill_gradient(low = canva_pal("Green fields")(4)[4],

high = canva_pal("Green fields")(4)[1]) +

coord_cartesian(xlim = c(1, 7)) +

ylab("log-cumulative-odds") +

theme_hc() +

theme(axis.ticks.x = element_blank(),

plot.background = element_rect(fill = "grey92"),

legend.position = "none")

The code for Figure 11.2 is itself something of a monster.

d_plot <-

d %>%

group_by(response) %>%

count() %>%

mutate(pr_k = n / nrow(d)) %>%

ungroup() %>%

mutate(cum_pr_k = cumsum(pr_k))

ggplot(data = d_plot,

aes(x = response, y = cum_pr_k,

color = cum_pr_k, fill = cum_pr_k)) +

geom_line(color = canva_pal("Green fields")(4)[1]) +

geom_point(shape = 21, colour = "grey92",

size = 2.5, stroke = 1) +

geom_linerange(aes(ymin = 0, ymax = cum_pr_k),

alpha = 1/2, color = canva_pal("Green fields")(4)[1]) +

# there must be more elegant ways to do this part

geom_linerange(data = . %>%

mutate(discrete_probability =

ifelse(response == 1, cum_pr_k,

cum_pr_k - pr_k)),

aes(x = response + .025,

ymin = ifelse(response == 1, 0, discrete_probability),

ymax = cum_pr_k),

color = "black") +

geom_text(data = tibble(text = 1:7,

response = seq(from = 1.25, to = 7.25, by = 1),

cum_pr_k = d_plot$cum_pr_k - .065),

aes(label = text),

size = 4) +

scale_x_continuous(breaks = 1:7) +

scale_y_continuous("cumulative proportion", breaks = c(0, .5, 1)) +

scale_fill_gradient(low = canva_pal("Green fields")(4)[4],

high = canva_pal("Green fields")(4)[1]) +

scale_color_gradient(low = canva_pal("Green fields")(4)[4],

high = canva_pal("Green fields")(4)[1]) +

coord_cartesian(ylim = c(0, 1)) +

theme_hc() +

theme(axis.ticks.x = element_blank(),

plot.background = element_rect(fill = "grey92"),

legend.position = "none")

McElreath’s convention for this first type of statistical model is

\[\begin{align*} R_i & \sim \text{Ordered} (\mathbf p) \\ \text{logit} (p_k) & = \alpha_k \\ \alpha_k & \sim \text{Normal} (0, 10) \end{align*}\]

The Ordered distribution is really just a categorical distribution that takes a vector \(\mathbf p = {p_1, p_2, p_3, p_4, p_5, p_6}\) of probabilities of each response value below the maximum response (7 in this example). Each response value \(k\) in this vector is defined by its link to an intercept parameter, \(\alpha_k\). Finally, some weakly regularizing priors are placed on these intercepts. (p. 335)

Whereas in rethinking::map() you indicate the likelihood by <criterion> ~ dordlogit(phi , c(<the thresholds>), in brms::brm() you code family = cumulative. Here’s the intercepts-only model:

# define the start values

inits <- list(`Intercept[1]` = -2,

`Intercept[2]` = -1,

`Intercept[3]` = 0,

`Intercept[4]` = 1,

`Intercept[5]` = 2,

`Intercept[6]` = 2.5)

inits_list <- list(inits, inits)

b11.1 <-

brm(data = d, family = cumulative,

response ~ 1,

prior(normal(0, 10), class = Intercept),

iter = 2000, warmup = 1000, cores = 2, chains = 2,

inits = inits_list, # here we add our start values

seed = 11)McElreath needed to include the depth=2 argument in the rethinking::precis() function to show the threshold parameters from his m11.1stan model. With a brm() fit, we just use print() or summary() as usual.

print(b11.1)## Family: cumulative

## Links: mu = logit; disc = identity

## Formula: response ~ 1

## Data: d (Number of observations: 9930)

## Samples: 2 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup samples = 2000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## Intercept[1] -1.92 0.03 -1.97 -1.86 1357 1.00

## Intercept[2] -1.27 0.02 -1.31 -1.22 1903 1.00

## Intercept[3] -0.72 0.02 -0.76 -0.68 2250 1.00

## Intercept[4] 0.25 0.02 0.21 0.29 2135 1.00

## Intercept[5] 0.89 0.02 0.85 0.93 1997 1.00

## Intercept[6] 1.77 0.03 1.71 1.82 2222 1.00

##

## Samples were drawn using sampling(NUTS). For each parameter, Eff.Sample

## is a crude measure of effective sample size, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).What McElreath’s m11.1stan summary termed cutpoints[k], ours termed Intercept[k]. In both cases, these are the \(\alpha_k\) parameters from the equations, above. The summaries look like those in the text, number of effective samples are high, and the \(\hat{R}\) values are great. The model looks good.

Recall we use the brms::inv_logit_scaled() function in place of McElreath’s logistic() function to get these into the probability metric.

b11.1 %>%

fixef() %>%

inv_logit_scaled()## Estimate Est.Error Q2.5 Q97.5

## Intercept[1] 0.1283109 0.5072655 0.1221261 0.1348106

## Intercept[2] 0.2198662 0.5060348 0.2117014 0.2278310

## Intercept[3] 0.3277413 0.5052444 0.3188163 0.3367289

## Intercept[4] 0.5617740 0.5050106 0.5522171 0.5714665

## Intercept[5] 0.7089573 0.5054599 0.6997932 0.7175630

## Intercept[6] 0.8544500 0.5071209 0.8470937 0.8610863But recall that the posterior \(SD\) (i.e., the ‘Est.Error’ values) are not valid using that approach. If you really care about them, you’ll need to work with the posterior_samples().

posterior_samples(b11.1) %>%

select(starts_with("b_")) %>%

mutate_all(inv_logit_scaled) %>%

gather() %>%

group_by(key) %>%

summarise(mean = mean(value),

sd = sd(value),

ll = quantile(value, probs = .025),

ul = quantile(value, probs = .975))## # A tibble: 6 x 5

## key mean sd ll ul

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 b_Intercept[1] 0.128 0.00325 0.122 0.135

## 2 b_Intercept[2] 0.220 0.00414 0.212 0.228

## 3 b_Intercept[3] 0.328 0.00462 0.319 0.337

## 4 b_Intercept[4] 0.562 0.00493 0.552 0.571

## 5 b_Intercept[5] 0.709 0.00451 0.700 0.718

## 6 b_Intercept[6] 0.854 0.00354 0.847 0.86111.1.3 Adding predictor variables.

Now we define the linear model as \(\phi_i = \beta x_i\). Accordingly, the formula for our cumulative logit model becomes

\[\begin{align*} \text{log} \frac{\text{Pr} (y_i \leq k)}{1 - \text{Pr} (y_i \leq k)} & = \alpha_k - \phi_i \\ \phi_i & = \beta x_i \end{align*}\]

I’m not aware that brms has an equivalent to the rethinking::dordlogit() function. So here we’ll make it by hand. The code comes from McElreath’s GitHub page.

# first, we needed to specify the `logistic()` function, which is apart of the `dordlogit()` function

logistic <- function(x) {

p <- 1 / (1 + exp(-x))

p <- ifelse(x == Inf, 1, p)

p

}

# now we get down to it

dordlogit <-

function(x, phi, a, log = FALSE) {

a <- c(as.numeric(a), Inf)

p <- logistic(a[x] - phi)

na <- c(-Inf, a)

np <- logistic(na[x] - phi)

p <- p - np

if (log == TRUE) p <- log(p)

p

}The dordlogit() function works like this:

(pk <- dordlogit(1:7, 0, fixef(b11.1)[, 1]))## [1] 0.12831095 0.09155524 0.10787506 0.23403279 0.14718324 0.14549271 0.14555001Note the slight difference in how we used dordlogit() with a brm() fit summarized by fixef() than the way McElreath did with a map2stan() fit summarized by coef(). McElreath just put coef(m11.1) into dordlogit(). We, however, more specifically placed fixef(b11.1)[, 1] into the function. With the [, 1] part, we specified that we were working with the posterior means (i.e., Estimate) and neglecting the other summaries (i.e., the posterior SDs and 95% intervals). If you forget to subset, chaos ensues.

Next, as McElreath further noted in the text, “these probabilities imply an average outcome of:”

sum(pk * (1:7))## [1] 4.1989I found that a bit abstract. Here’s the thing in a more elaborate tibble format.

(

explicit_example <-

tibble(probability_of_a_response = pk) %>%

mutate(the_response = 1:7) %>%

mutate(their_product = probability_of_a_response * the_response)

)## # A tibble: 7 x 3

## probability_of_a_response the_response their_product

## <dbl> <int> <dbl>

## 1 0.128 1 0.128

## 2 0.0916 2 0.183

## 3 0.108 3 0.324

## 4 0.234 4 0.936

## 5 0.147 5 0.736

## 6 0.145 6 0.873

## 7 0.146 7 1.02explicit_example %>%

summarise(average_outcome_value = sum(their_product))## # A tibble: 1 x 1

## average_outcome_value

## <dbl>

## 1 4.20Aside

This made me wonder how this would compare if we were lazy and ignored the categorical nature of the response. Here we refit the model with the typical Gaussian likelihood.

brm(data = d, family = gaussian,

response ~ 1,

# in this case, 4 (i.e., the middle response) seems to be the conservative place to put the mean

prior = c(prior(normal(4, 10), class = Intercept),

prior(cauchy(0, 1), class = sigma)),

iter = 2000, warmup = 1000, cores = 4, chains = 4,

seed = 11) %>%

print()## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: response ~ 1

## Data: d (Number of observations: 9930)

## Samples: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup samples = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## Intercept 4.20 0.02 4.16 4.24 2652 1.00

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## sigma 1.90 0.01 1.88 1.93 3412 1.00

##

## Samples were drawn using sampling(NUTS). For each parameter, Eff.Sample

## is a crude measure of effective sample size, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Happily, this yielded a mean estimate of 4.2, much like our average_outcome_value, above.

End aside

Now we’ll try it by subtracting .5 from each.

# the probabilities of a given response

(pk <- dordlogit(1:7, 0, fixef(b11.1)[, 1] - .5))## [1] 0.08196252 0.06402230 0.08223000 0.20920630 0.15893987 0.18437118 0.21926783# the average rating

sum(pk * (1:7))## [1] 4.729324So the rule is we subtract the linear model from each interecept. Let’s fit our multivariable models.

# start values for b11.2

inits <- list(`Intercept[1]` = -1.9,

`Intercept[2]` = -1.2,

`Intercept[3]` = -0.7,

`Intercept[4]` = 0.2,

`Intercept[5]` = 0.9,

`Intercept[6]` = 1.8,

action = 0,

intention = 0,

contact = 0)

b11.2 <-

brm(data = d, family = cumulative,

response ~ 1 + action + intention + contact,

prior = c(prior(normal(0, 10), class = Intercept),

prior(normal(0, 10), class = b)),

iter = 2000, warmup = 1000, cores = 2, chains = 2,

inits = list(inits, inits),

seed = 11)

# start values for b11.3

inits <- list(`Intercept[1]` = -1.9,

`Intercept[2]` = -1.2,

`Intercept[3]` = -0.7,

`Intercept[4]` = 0.2,

`Intercept[5]` = 0.9,

`Intercept[6]` = 1.8,

action = 0,

intention = 0,

contact = 0,

`action:intention` = 0,

`contact:intention` = 0)

b11.3 <-

update(b11.2,

formula = response ~ 1 + action + intention + contact + action:intention + contact:intention,

inits = list(inits, inits))We don’t have a coeftab() function in brms like for rethinking. But as we did for Chapter 6, we can reproduce it with help from the broom package and a bit of data wrangling.

library(broom)

tibble(model = str_c("b11.", 1:3)) %>%

mutate(fit = purrr::map(model, get)) %>%

mutate(tidy = purrr::map(fit, tidy)) %>%

unnest(tidy) %>%

select(model, term, estimate) %>%

filter(term != "lp__") %>%

complete(term = distinct(., term), model) %>%

mutate(estimate = round(estimate, digits = 2)) %>%

spread(key = model, value = estimate) %>%

# this last step isn't necessary, but it orders the rows to match the text

slice(c(6:11, 1, 4, 3, 2, 5))## # A tibble: 11 x 4

## term b11.1 b11.2 b11.3

## <chr> <dbl> <dbl> <dbl>

## 1 b_Intercept[1] -1.92 -2.84 -2.64

## 2 b_Intercept[2] -1.27 -2.16 -1.94

## 3 b_Intercept[3] -0.72 -1.58 -1.34

## 4 b_Intercept[4] 0.25 -0.55 -0.31

## 5 b_Intercept[5] 0.89 0.12 0.36

## 6 b_Intercept[6] 1.77 1.02 1.27

## 7 b_action NA -0.71 -0.47

## 8 b_intention NA -0.72 -0.28

## 9 b_contact NA -0.96 -0.33

## 10 b_action:intention NA NA -0.45

## 11 b_intention:contact NA NA -1.27If you really wanted that last nobs row at the bottom, you could elaborate on this code: b11.1$data %>% count(). Also, if you want a proper coeftab() function for brms, McElreath’s code lives here. Give it a whirl.

Here we compute the WAIC. Caution: This took some time to compute.

b11.1 <- add_criterion(b11.1, "waic")

b11.2 <- add_criterion(b11.2, "waic")

b11.3 <- add_criterion(b11.3, "waic")Now compare the models.

loo_compare(b11.1, b11.2, b11.3, criterion = "waic") %>%

print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## b11.3 0.0 0.0 -18464.5 40.7 10.9 0.1 36929.0 81.3

## b11.2 -80.3 12.9 -18544.8 38.2 8.9 0.0 37089.6 76.4

## b11.1 -462.7 31.4 -18927.2 28.8 5.9 0.0 37854.3 57.7Here are the WAIC weights.

model_weights(b11.1, b11.2, b11.3,

weights = "waic") %>%

round(digits = 3)## b11.1 b11.2 b11.3

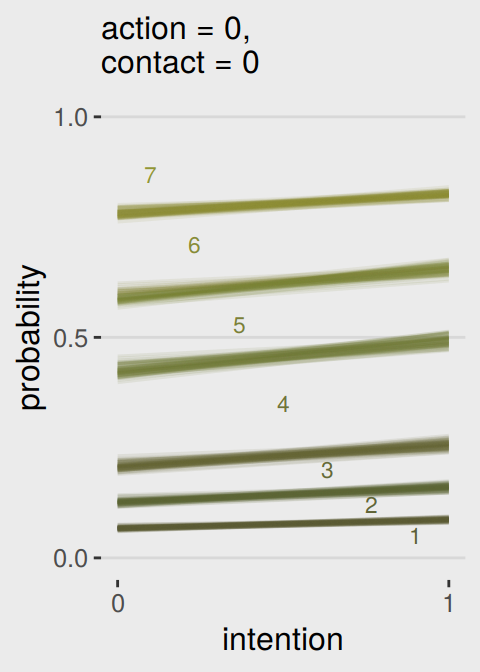

## 0 0 1McElreath made Figure 11.3 by extracting the samples of his m11.3, saving them as post, and working some hairy base R plot() code. We’ll take a different route and use brms::fitted(). This will take substantial data wrangling, but hopefully it’ll be instructive. Let’s first take a look at the initial fitted() output for the beginnings of Figure 11.3.a.

nd <-

tibble(action = 0,

contact = 0,

intention = 0:1)

max_iter <- 100

fitted(b11.3,

newdata = nd,

subset = 1:max_iter,

summary = F) %>%

as_tibble() %>%

glimpse()## Observations: 100

## Variables: 14

## $ `1.1` <dbl> 0.06829540, 0.06935237, 0.06181907, 0.06458662, 0.06342674, 0.06398108, 0.06457421, 0.0697354…

## $ `2.1` <dbl> 0.08912081, 0.08575198, 0.08107204, 0.08447806, 0.08213552, 0.08289504, 0.08489850, 0.0918686…

## $ `1.2` <dbl> 0.06126422, 0.06071582, 0.05218200, 0.05383354, 0.05205933, 0.05864133, 0.05692265, 0.0593070…

## $ `2.2` <dbl> 0.07662233, 0.07262976, 0.06588789, 0.06771568, 0.06500267, 0.07308790, 0.07183675, 0.0747587…

## $ `1.3` <dbl> 0.07839242, 0.08109606, 0.07492128, 0.07873677, 0.07623616, 0.07811790, 0.07907304, 0.0806364…

## $ `2.3` <dbl> 0.09375887, 0.09363517, 0.09076986, 0.09489860, 0.09149364, 0.09333144, 0.09542420, 0.0970133…

## $ `1.4` <dbl> 0.2216617, 0.2113044, 0.2204816, 0.2173166, 0.2134680, 0.2196718, 0.2197378, 0.2134873, 0.209…

## $ `2.4` <dbl> 0.2418302, 0.2273424, 0.2436367, 0.2390304, 0.2350870, 0.2402670, 0.2413653, 0.2338448, 0.230…

## $ `1.5` <dbl> 0.1660660, 0.1730582, 0.1688438, 0.1701783, 0.1683491, 0.1658178, 0.1654624, 0.1632896, 0.172…

## $ `2.5` <dbl> 0.1615694, 0.1701536, 0.1659914, 0.1667925, 0.1661902, 0.1624098, 0.1617065, 0.1593140, 0.168…

## $ `1.6` <dbl> 0.1889677, 0.1854993, 0.1965720, 0.1982124, 0.2044757, 0.1925115, 0.1870113, 0.1919342, 0.190…

## $ `2.6` <dbl> 0.1665455, 0.1683141, 0.1742956, 0.1752124, 0.1825064, 0.1711461, 0.1652618, 0.1689849, 0.167…

## $ `1.7` <dbl> 0.2153525, 0.2189739, 0.2251802, 0.2171357, 0.2219850, 0.2212585, 0.2272187, 0.2216099, 0.221…

## $ `2.7` <dbl> 0.1705528, 0.1821730, 0.1783465, 0.1718723, 0.1775845, 0.1768628, 0.1795070, 0.1742156, 0.174…Hopefully by now it’s clear why we needed the nd tibble, which we made use of in the newdata = nd argument. Because we set summary = F, we get draws from the posterior instead of summaries. With max_iter, we controlled how many of those posterior draws we wanted. McElreath used 100, which he indicated at the top of page 341, so we followed suit. It took me a minute to wrap my head around the meaning of the 14 vectors, which were named by brms::fitted() default. Notice how each column is named by two numerals, separated by a period. That first numeral indicates which if the two intention values the draw is based on (i.e., 1 stands for intention == 0, 2, stands for intention == 1). The numbers on the right of the decimals are the seven response options for response. For each posterior draw, you get one of those for each value of intention. Finally, it might not be immediately apparent, but the values are in the probability scale, just like pk on page 338.

Now we know what we have in hand, it’s just a matter of careful wrangling to get those probabilities into a more useful format to feed into ggplot2. I’ve extensively annotated the code, below. If you lose track of happens in a given step, just run the code up till that point. Go step by step.

nd <-

tibble(action = 0,

contact = 0,

intention = 0:1)

max_iter <- 100

fitted(b11.3,

newdata = nd,

subset = 1:max_iter,

summary = F) %>%

as_tibble() %>%

# we convert the data to the long format

gather() %>%

# we need an variable to index which posterior iteration we're working with

mutate(iter = rep(1:max_iter, times = 14)) %>%

# this step isn’t technically necessary, but I prefer my `iter` index at the far left.

select(iter, everything()) %>%

# here we extract the `intention` and `response` information out of the `key` vector and

# spread it into two vectors.

separate(key, into = c("intention", "rating")) %>%

# that step produced two character vectors. they’ll be more useful as numbers

mutate(intention = intention %>% as.double(),

rating = rating %>% as.double()) %>%

# here we convert `intention` into its proper 0:1 metric

mutate(intention = intention -1) %>%

# this isn't necessary, but it helps me understand exactly what metric the values are currently in

rename(pk = value) %>%

# this step is based on McElreath's R code 11.10 on page 338

mutate(`pk:rating` = pk * rating) %>%

# I’m not sure how to succinctly explain this. you’re just going to have to trust me

group_by(iter, intention) %>%

# this is very important for the next step.

arrange(iter, intention, rating) %>%

# here we take our `pk` values and make culmulative sums. why? take a long hard look at Figure 11.2.

mutate(probability = cumsum(pk)) %>%

# `rating == 7` is unnecessary. these `probability` values are by definition 1

filter(rating < 7) %>%

ggplot(aes(x = intention,

y = probability,

color = probability)) +

geom_line(aes(group = interaction(iter, rating)),

alpha = 1/10) +

# note how we made a new data object for `geom_text()`

geom_text(data = tibble(text = 1:7,

intention = seq(from = .9, to = .1, length.out = 7),

probability = c(.05, .12, .20, .35, .53, .71, .87)),

aes(label = text),

size = 3) +

scale_x_continuous(breaks = 0:1) +

scale_y_continuous(breaks = c(0, .5, 1)) +

coord_cartesian(ylim = 0:1) +

scale_color_gradient(low = canva_pal("Green fields")(4)[4],

high = canva_pal("Green fields")(4)[1]) +

labs(subtitle = "action = 0,\ncontact = 0",

x = "intention") +

theme_hc() +

theme(plot.background = element_rect(fill = "grey92"),

legend.position = "none")

Boom!

Okay, that pile of code is a bit of a mess and you’re not going to want to repeatedly cut and paste all that. Let’s condense it into a homemade function, make_Figure_11.3_data().

make_Figure_11.3_data <- function(action, contact, max_iter){

nd <-

tibble(action = action,

contact = contact,

intention = 0:1)

max_iter <- max_iter

fitted(b11.3,

newdata = nd,

subset = 1:max_iter,

summary = F) %>%

as_tibble() %>%

gather() %>%

mutate(iter = rep(1:max_iter, times = 14)) %>%

select(iter, everything()) %>%

separate(key, into = c("intention", "rating")) %>%

mutate(intention = intention %>% as.double(),

rating = rating %>% as.double()) %>%

mutate(intention = intention -1) %>%

rename(pk = value) %>%

mutate(`pk:rating` = pk * rating) %>%

group_by(iter, intention) %>%

arrange(iter, intention, rating) %>%

mutate(probability = cumsum(pk)) %>%

filter(rating < 7)

}Now we’ll use our sweet homemade function to make our plots.

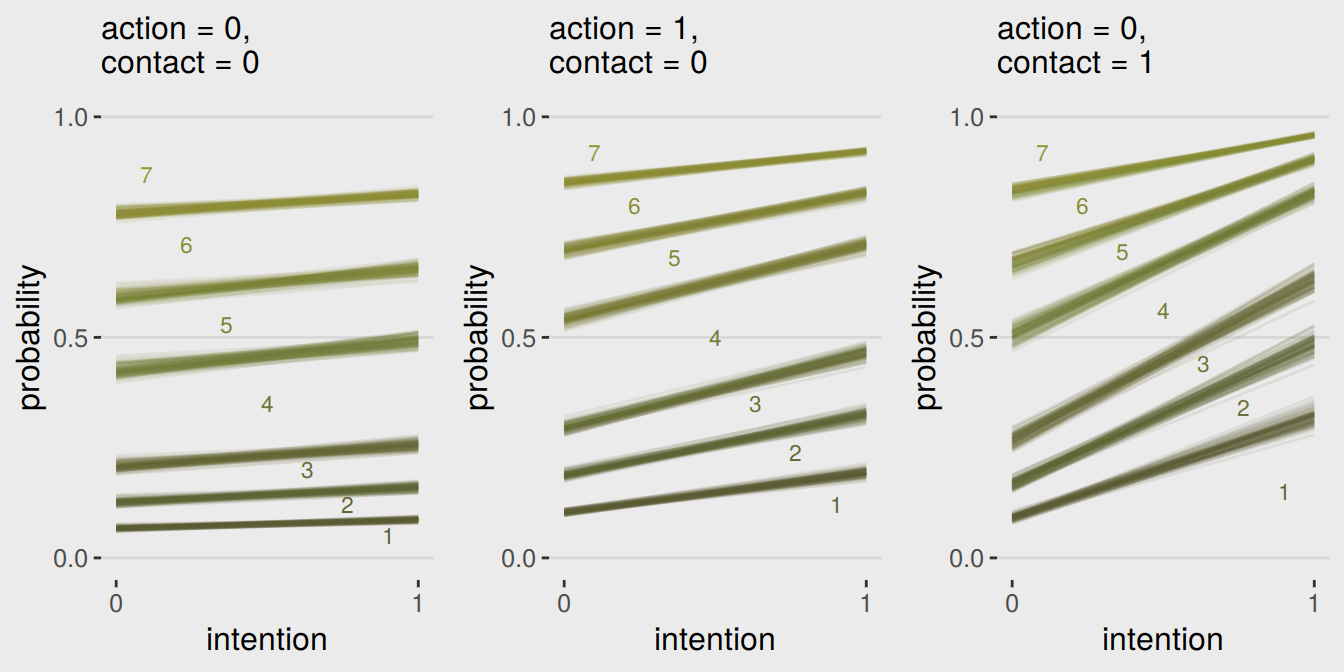

# Figure 11.3.a

p1 <-

make_Figure_11.3_data(action = 0,

contact = 0,

max_iter = 100) %>%

ggplot(aes(x = intention,

y = probability,

color = probability)) +

geom_line(aes(group = interaction(iter, rating)),

alpha = 1/10) +

geom_text(data = tibble(text = 1:7,

intention = seq(from = .9, to = .1, length.out = 7),

probability = c(.05, .12, .20, .35, .53, .71, .87)),

aes(label = text),

size = 3) +

scale_x_continuous(breaks = 0:1) +

scale_y_continuous(breaks = c(0, .5, 1)) +

scale_color_gradient(low = canva_pal("Green fields")(4)[4],

high = canva_pal("Green fields")(4)[1]) +

coord_cartesian(ylim = 0:1) +

labs(subtitle = "action = 0,\ncontact = 0",

x = "intention") +

theme_hc() +

theme(plot.background = element_rect(fill = "grey92"),

legend.position = "none")

# Figure 11.3.b

p2 <-

make_Figure_11.3_data(action = 1,

contact = 0,

max_iter = 100) %>%

ggplot(aes(x = intention,

y = probability,

color = probability)) +

geom_line(aes(group = interaction(iter, rating)),

alpha = 1/10) +

geom_text(data = tibble(text = 1:7,

intention = seq(from = .9, to = .1, length.out = 7),

probability = c(.12, .24, .35, .50, .68, .80, .92)),

aes(label = text),

size = 3) +

scale_x_continuous(breaks = 0:1) +

scale_y_continuous(breaks = c(0, .5, 1)) +

scale_color_gradient(low = canva_pal("Green fields")(4)[4],

high = canva_pal("Green fields")(4)[1]) +

coord_cartesian(ylim = 0:1) +

labs(subtitle = "action = 1,\ncontact = 0",

x = "intention") +

theme_hc() +

theme(plot.background = element_rect(fill = "grey92"),

legend.position = "none")

# Figure 11.3.c

p3 <-

make_Figure_11.3_data(action = 0,

contact = 1,

max_iter = 100) %>%

ggplot(aes(x = intention,

y = probability,

color = probability)) +

geom_line(aes(group = interaction(iter, rating)),

alpha = 1/10) +

geom_text(data = tibble(text = 1:7,

intention = seq(from = .9, to = .1, length.out = 7),

probability = c(.15, .34, .44, .56, .695, .8, .92)),

aes(label = text),

size = 3) +

scale_x_continuous(breaks = 0:1) +

scale_y_continuous(breaks = c(0, .5, 1)) +

scale_color_gradient(low = canva_pal("Green fields")(4)[4],

high = canva_pal("Green fields")(4)[1]) +

coord_cartesian(ylim = 0:1) +

labs(subtitle = "action = 0,\ncontact = 1",

x = "intention") +

theme_hc() +

theme(plot.background = element_rect(fill = "grey92"),

legend.position = "none")

# here we stitch them together with `grid.arrange()`

library(gridExtra)

grid.arrange(p1, p2, p3, ncol = 3)

If you’d like to learn more about these kinds of models and how to fit them in brms, check out Bürkner and Vuorre’s Ordinal Regression Models in Psychology: A Tutorial.

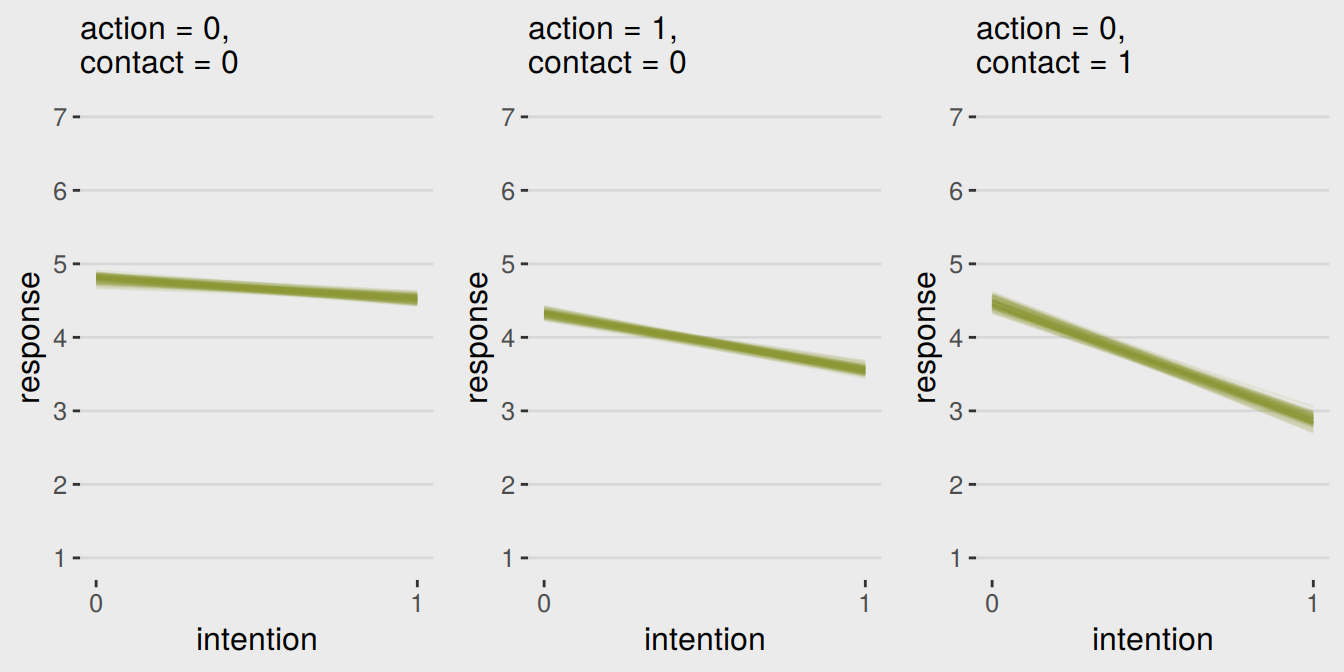

11.1.4 Bonus: Figure 11.3 alternative.

I have a lot of respect for McElreath. But man, Figure 11.3 is the worst. I’m in clinical psychology and there’s no way a working therapist is going to look at a figure like that and have any sense of what’s going on. Nobody’s got time for that. We’ve have clients to serve! Happily, we can go further. Look back at McElreath’s R code 11.10 on page 338. See how he multiplied the elements of pk by their respective response values and then just summed them up to get an average outcome value? With just a little amendment to our custom make_Figure_11.3_data() function, we can wrangle our fitted() output to express average response values for each of our conditions of interest. Here’s the adjusted function:

make_data_for_an_alternative_fiture <- function(action, contact, max_iter){

nd <-

tibble(action = action,

contact = contact,

intention = 0:1)

max_iter <- max_iter

fitted(b11.3,

newdata = nd,

subset = 1:max_iter,

summary = F) %>%

as_tibble() %>%

gather() %>%

mutate(iter = rep(1:max_iter, times = 14)) %>%

select(iter, everything()) %>%

separate(key, into = c("intention", "rating")) %>%

mutate(intention = intention %>% as.double(),

rating = rating %>% as.double()) %>%

mutate(intention = intention -1) %>%

rename(pk = value) %>%

mutate(`pk:rating` = pk * rating) %>%

group_by(iter, intention) %>%

# everything above this point is identical to the previous custom function.

# all we do is replace the last few lines with this one line of code.

summarise(mean_rating = sum(`pk:rating`))

}Our handy homemade but monstrously-named make_data_for_an_alternative_fiture() function works very much like its predecessor. You’ll see.

# alternative to Figure 11.3.a

p1 <-

make_data_for_an_alternative_fiture(action = 0,

contact = 0,

max_iter = 100) %>%

ggplot(aes(x = intention, y = mean_rating, group = iter)) +

geom_line(alpha = 1/10, color = canva_pal("Green fields")(4)[1]) +

scale_x_continuous("intention", breaks = 0:1) +

scale_y_continuous("response", breaks = 1:7) +

coord_cartesian(ylim = 1:7) +

labs(subtitle = "action = 0,\ncontact = 0") +

theme_hc() +

theme(plot.background = element_rect(fill = "grey92"),

legend.position = "none")

# alternative to Figure 11.3.b

p2 <-

make_data_for_an_alternative_fiture(action = 1,

contact = 0,

max_iter = 100) %>%

ggplot(aes(x = intention, y = mean_rating, group = iter)) +

geom_line(alpha = 1/10, color = canva_pal("Green fields")(4)[1]) +

scale_x_continuous("intention", breaks = 0:1) +

scale_y_continuous("response", breaks = 1:7) +

coord_cartesian(ylim = 1:7) +

labs(subtitle = "action = 1,\ncontact = 0") +

theme_hc() +

theme(plot.background = element_rect(fill = "grey92"),

legend.position = "none")

# alternative to Figure 11.3.c

p3 <-

make_data_for_an_alternative_fiture(action = 0,

contact = 1,

max_iter = 100) %>%

ggplot(aes(x = intention, y = mean_rating, group = iter)) +

geom_line(alpha = 1/10, color = canva_pal("Green fields")(4)[1]) +

scale_x_continuous("intention", breaks = 0:1) +

scale_y_continuous("response", breaks = 1:7) +

coord_cartesian(ylim = 1:7) +

labs(subtitle = "action = 0,\ncontact = 1") +

theme_hc() +

theme(plot.background = element_rect(fill = "grey92"),

legend.position = "none")

grid.arrange(p1, p2, p3, ncol = 3)

Finally; now those are plots I can sell in a clinical psychology journal!

11.2 Zero-inflated outcomes

Very often, the things we can measure are not emissions from any pure process. Instead, they are mixtures of multiple processes. Whenever there are different causes for the same observation, then a mixture model may be useful. A mixture model uses more than one simple probability distribution to model a mixture of causes. In effect, these models use more than one likelihood for the same outcome variable.

Count variables are especially prone to needing a mixture treatment. The reason is that a count of zero can often arise more than one way. A “zero” means that nothing happened, and nothing can happen either because the rate of events is low or rather because the process that generates events failed to get started. (p. 342, emphasis in the original)

In his Rethinking: Breaking the law box, McElreath discussed how advances in computing have made it possible for working scientists to define their own data generating models. If you’d like to dive deeper into the topic, check out Bürkner’s vignette, Define Custom Response Distributions with brms. We’ll even make use of it a little further down.

11.2.1 Example: Zero-inflated Poisson.

Here we simulate our drunk monk data.

# define parameters

prob_drink <- 0.2 # 20% of days

rate_work <- 1 # average 1 manuscript per day

# sample one year of production

n <- 365

# simulate days monks drink

set.seed(11)

drink <- rbinom(n, 1, prob_drink)

# simulate manuscripts completed

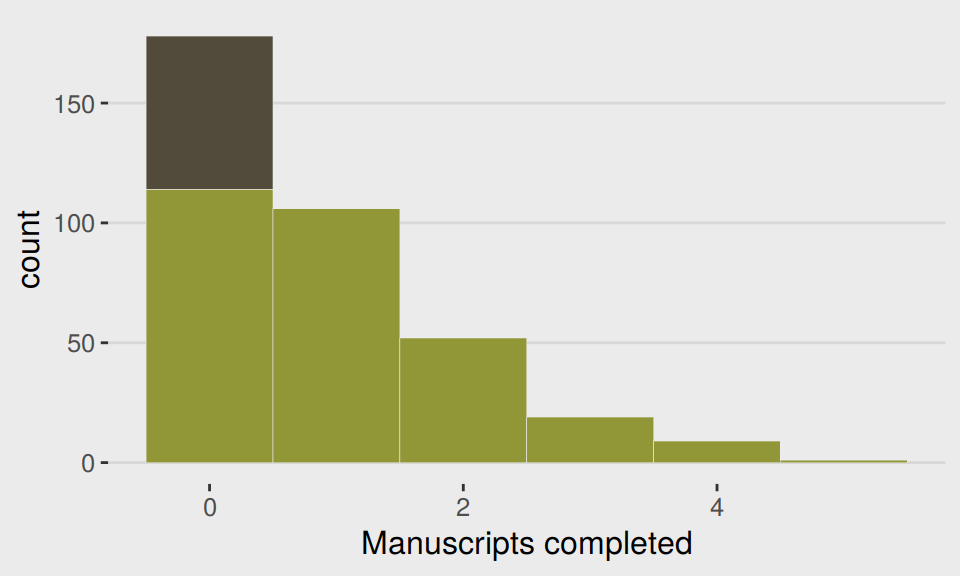

y <- (1 - drink) * rpois(n, rate_work)We’ll put those data in a tidy tibble before plotting.

d <-

tibble(Y = y) %>%

arrange(Y) %>%

mutate(zeros = c(rep("zeros_drink", times = sum(drink)),

rep("zeros_work", times = sum(y == 0 & drink == 0)),

rep("nope", times = n - sum(y == 0))

))

ggplot(data = d, aes(x = Y)) +

geom_histogram(aes(fill = zeros),

binwidth = 1, size = 1/10, color = "grey92") +

scale_fill_manual(values = c(canva_pal("Green fields")(4)[1],

canva_pal("Green fields")(4)[2],

canva_pal("Green fields")(4)[1])) +

xlab("Manuscripts completed") +

theme_hc() +

theme(plot.background = element_rect(fill = "grey92"),

legend.position = "none")

With these data, the likelihood of observing zero on y, (i.e., the likelihood zero manuscripts were completed on a given occasion) is

\[\begin{align*} \text{Pr} (0 | p, \lambda) & = \text{Pr} (\text{drink} | p) + \text{Pr} (\text{work} | p) \times \text{Pr} (0 | \lambda) \\ & = p + (1 - p) \text{ exp} (- \lambda) \end{align*}\]

And

since the Poisson likelihood of \(y\) is \(\text{Pr} (y | \lambda) = \lambda^y \text{exp} (- \lambda) / y!\), the likelihood of \(y = 0\) is just \(\text{exp} (- \lambda)\). The above is just the mathematics for:

The probability of observing a zero is the probability that the monks didn’t drink OR (\(+\)) the probability that the monks worked AND (\(\times\)) failed to finish anything.

And the likelihood of a non-zero value \(y\) is:

\[\begin{align*} \text{Pr} (y | p, \lambda) & = \text{Pr} (\text{drink} | p) (0) + \text{Pr} (\text{work} | p) \text{Pr} (y | \lambda) \\ & = (1 - p) \frac {\lambda^y \text{ exp} (- \lambda)}{y!} \end{align*}\]

Since drinking monks never produce \(y > 0\), the expression above is just the chance the monks both work \(1 - p\), and finish \(y\) manuscripts. (p. 344, emphasis in the original)

So letting \(p\) be the probability \(y\) is zero and \(\lambda\) be the shape of the distribution, the zero-inflated Poisson (ZIPoisson) regression model takes the basic form

\[\begin{align*} y_i & \sim \text{ZIPoisson} (p_i, \lambda_i)\\ \text{logit} (p_i) & = \alpha_p + \beta_p x_i \\ \text{log} (\lambda_i) & = \alpha_\lambda + \beta_\lambda x_i \end{align*}\]

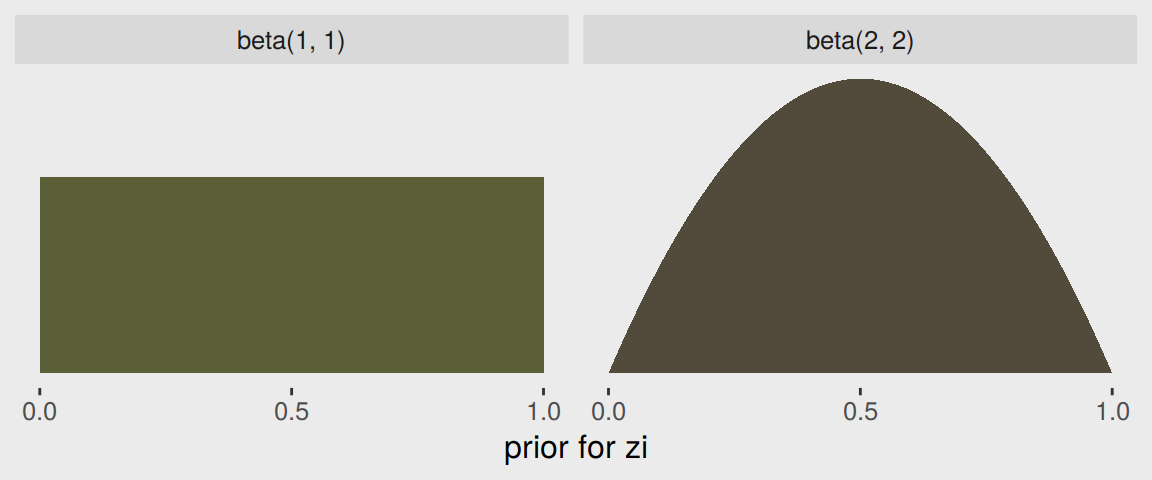

One last thing to note is that in brms, \(p_i\) is denoted zi. So the intercept [and zi] only zero-inflated Poisson model in brms looks like this.

b11.4 <-

brm(data = d, family = zero_inflated_poisson,

Y ~ 1,

prior = c(prior(normal(0, 10), class = Intercept),

prior(beta(2, 2), class = zi)), # the brms default is beta(1, 1)

cores = 4,

seed = 11) print(b11.4)## Family: zero_inflated_poisson

## Links: mu = log; zi = identity

## Formula: Y ~ 1

## Data: d (Number of observations: 365)

## Samples: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup samples = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## Intercept 0.09 0.08 -0.07 0.26 1404 1.00

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## zi 0.23 0.05 0.13 0.33 1470 1.00

##

## Samples were drawn using sampling(NUTS). For each parameter, Eff.Sample

## is a crude measure of effective sample size, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).The zero-inflated Poisson is parameterized in brms a little differently than it is in rethinking. The different parameterization did not influence the estimate for the Intercept, \(\lambda\). In both here and in the text, \(\lambda\) was about zero. However, it did influence the summary of zi. Note how McElreath’s logistic(-1.39) yielded 0.1994078. Seems rather close to our zi estimate of 0.235. First off, because he didn’t set his seed in the text before simulating, we couldn’t exactly reproduce his simulated drunk monk data. So our results will vary a little due to that alone. But after accounting for simulation variance, hopefully it’s clear that zi in brms is already in the probability metric. There’s no need to convert it.

In the prior argument, we used beta(2, 2) for zi and also mentioned in the margin that the brms default is beta(1, 1). To give you a sense of the priors, let’s plot them.

tibble(`zi prior`= seq(from = 0, to = 1, length.out = 50)) %>%

mutate(`beta(1, 1)` = dbeta(`zi prior`, 1, 1),

`beta(2, 2)` = dbeta(`zi prior`, 2, 2)) %>%

gather(prior, density, -`zi prior`) %>%

ggplot(aes(x = `zi prior`,

ymin = 0,

ymax = density)) +

geom_ribbon(aes(fill = prior)) +

scale_fill_manual(values = c(canva_pal("Green fields")(4)[4],

canva_pal("Green fields")(4)[2])) +

scale_x_continuous("prior for zi", breaks = c(0, .5, 1)) +

scale_y_continuous(NULL, breaks = NULL) +

theme_hc() +

theme(plot.background = element_rect(fill = "grey92"),

legend.position = "none") +

facet_wrap(~prior)

Hopefully this clarifies that the brms default is flat, whereas our prior regularized a bit toward .5. Anyway, here’s that exponentiated \(\lambda\).

fixef(b11.4)[1, ] %>%

exp()## Estimate Est.Error Q2.5 Q97.5

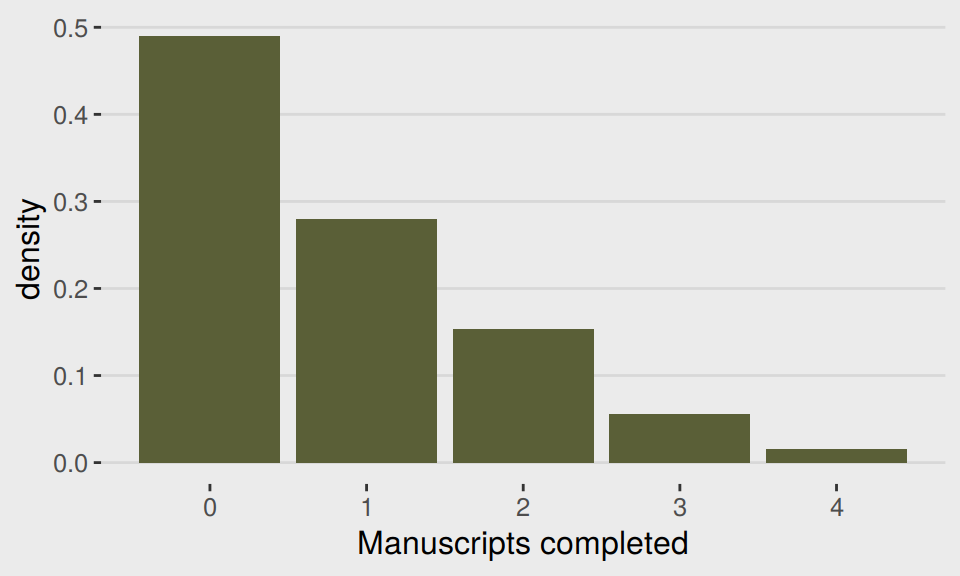

## 1.0975245 1.0869817 0.9302144 1.295733811.2.1.1 Overthinking: Zero-inflated Poisson distribution function.

dzip <- function(x, p, lambda, log = TRUE) {

ll <- ifelse(

x == 0,

p + (1 - p) * exp(-lambda),

(1 - p) * dpois(x, lambda, log = FALSE)

)

if (log == TRUE) ll <- log(ll)

return(ll)

}We can use McElreath’s dzip() to do a posterior predictive check for our model. To work with our estimates for \(p\) and \(\lambda\) directly, we’ll set log = F.

p_b11.4 <- posterior_summary(b11.4)[2, 1]

lambda_b11.4 <- posterior_summary(b11.4)[1, 1] %>% exp()

tibble(x = 0:4) %>%

mutate(density = dzip(x = x,

p = p_b11.4,

lambda = lambda_b11.4,

log = F)) %>%

ggplot(aes(x = x, y = density)) +

geom_col(fill = canva_pal("Green fields")(4)[4]) +

xlab("Manuscripts completed") +

theme_hc() +

theme(plot.background = element_rect(fill = "grey92"))

If you look up to the histogram we made at the beginning of this section, you’ll see this isn’t a terrible approximation.

11.3 Over-dispersed outcomes

All statistical models omit something. The question is only whether that something is necessary for making useful inferences. One symptom that something important has been omitted from a count model is over-dispersion. The variance of a variable is sometimes called its dispersion. For a counting process like a binomial, the variance is a function of the same parameters as the expected value. For example, the expected value of a binomial is \(np\) and its variance is \(np (1 - p)\). When the observed variance exceeds this amount—after conditioning on all the predictor variables—this implies that some omitted variable is producing additional dispersion in the observed counts.

What could go wrong, if we ignore the over-dispersion? Ignoring it can lead to all of the same problems as ignoring any predictor variable. Heterogeneity in counts can be a confound, hiding effects of interest or producing spurious inferences. (p, 346, emphasis in the original)

In this chapter we’ll cope with the problem using continuous mixture models—first the beta-binomial and then the gamma-Poisson (a.k.a. negative binomial).

11.3.1 Beta-binomial.

A beta-binomial model assumes that each binomial count observation has its own probability of success. The model estimates the distribution of probabilities of success across cases, instead of a single probability of success. And predictor variables change the shape of this distribution, instead of directly determining the probability of each success. (p, 347, emphasis in the original)

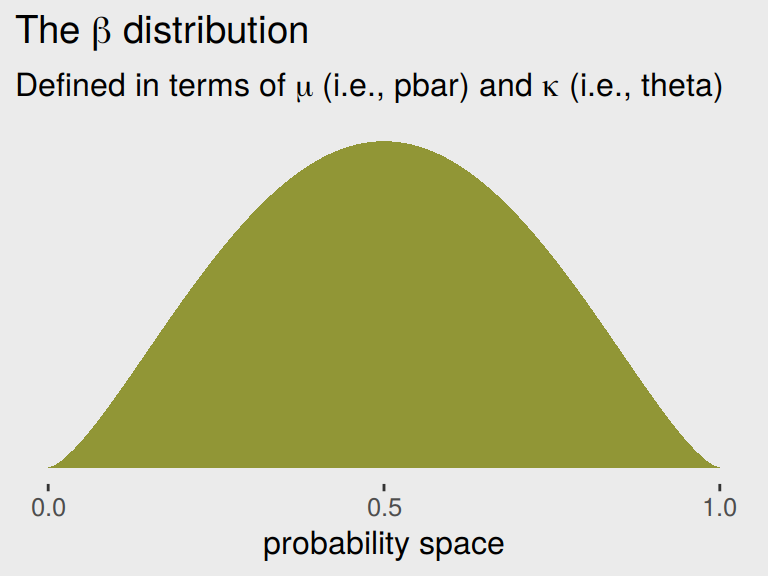

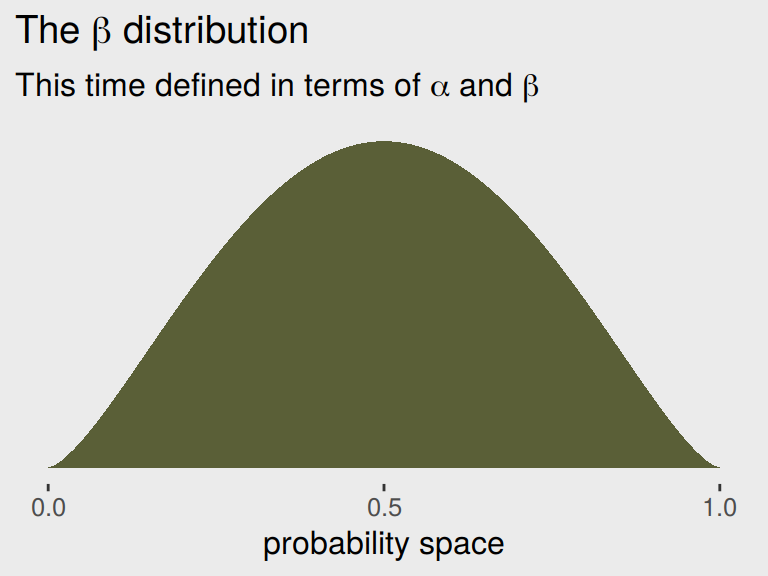

Unfortunately, we need to digress. As it turns out, there are multiple ways to parameterize the beta distribution and we’ve run square into two. In the text, McElreath wrote the beta distribution has two parameters, an average probability \(\overline{p}\) and a shape parameter \(\theta\). In his R code 11.24, which we’ll reproduce in a bit, he demonstrated that parameterization with the rethinking::dbeta2() function. The nice thing about this parameterization is how intuitive the pbar parameter is. If you want a beta with an average of .2, you set pbar <- .2. If you want the distribution to be more or less certain, make the theta argument more or less large, respectively.

However, the beta density is typically defined in terms of \(\alpha\) and \(\beta\). If you denote the data as \(y\), this follows the form

\[\text{Beta} (y | \alpha, \beta) = \frac{y^{\alpha - 1} (1 - y)^{\beta - 1}}{\text B (\alpha, \beta)}\]

which you can verify in the Continuous Distributions on [0, 1] section of the Stan Functions Reference. In the formula, \(\text B\) stands for the Beta function, which computes a normalizing constant, which you can learn about in the Mathematical Functions of the Stan reference manual. This is all important to be aware of because when we defined that beta prior for zi in the last model, it was using this parameterization. Also, if you look at the base R dbeta() function, you’ll learn it takes two parameters, shape1 and shape2. Those uncreatively-named parameters are the same \(\alpha\) and \(\beta\) from the density, above. They do not correspond to the pbar and theta parameters of McEreath’s rethinking::dbeta2().

McElreath had good reason for using dbeta2(). Beta’s typical \(\alpha\) and \(\beta\) parameters aren’t the most intuitive to use; the parameters in McElreath’s dbeta2() are much nicer. But if you’re willing to dive deeper, it turns out you can find the mean of a beta distribution in terms of \(\alpha\) and \(\beta\) like this

\[\mu = \frac{\alpha}{\alpha + \beta}\]

We can talk about the spread of the distribution, sometimes called \(\kappa\), in terms \(\alpha\) and \(\beta\) like this

\[\kappa = \alpha + \beta\]

With \(\mu\) and \(\kappa\) in hand, we can even find the \(SD\) of a beta distribution with

\[\sigma = \sqrt{\mu (1 - \mu) / (\kappa + 1)}\]

I explicate all this because McElreath’s pbar is \(\mu = \frac{\alpha}{\alpha + \beta}\) and his theta is \(\kappa = \alpha + \beta\). This is great news because it means that we can understand what McElreath did with his beta2() function in terms of base R’s dbeta() function. Which also means that we can understand the distribution of the beta parameters used in brms::brm(). To demonstrate, let’s walk through McElreath’s R code 11.25.

pbar <- 0.5

theta <- 5

ggplot(data = tibble(x = seq(from = 0, to = 1, by = .01))) +

geom_ribbon(aes(x = x,

ymin = 0,

ymax = rethinking::dbeta2(x, pbar, theta)),

fill = canva_pal("Green fields")(4)[1]) +

scale_x_continuous("probability space", breaks = c(0, .5, 1)) +

scale_y_continuous(NULL, breaks = NULL) +

ggtitle(expression(paste("The ", beta, " distribution")),

subtitle = expression(paste("Defined in terms of ", mu, " (i.e., pbar) and ", kappa, " (i.e., theta)"))) +

theme_hc() +

theme(plot.background = element_rect(fill = "grey92"))

In his 2014 text, Doing Bayesian Data Analysis, Kruschke provided code for a convenience function that will take pbar and theta as inputs and return the corresponding \(\alpha\) and \(\beta\) values. Here’s the function:

betaABfromMeanKappa <- function(mean, kappa) {

if (mean <= 0 | mean >= 1) stop("must have 0 < mean < 1")

if (kappa <= 0) stop("kappa must be > 0")

a <- mean * kappa

b <- (1.0 - mean) * kappa

return(list(a = a, b = b))

}Now we can use Kruschke’s betaABfromMeanKappa() to find the \(\alpha\) and \(\beta\) values corresponding to pbar and theta.

betaABfromMeanKappa(mean = pbar, kappa = theta)## $a

## [1] 2.5

##

## $b

## [1] 2.5And finally, we can double check that all of this works. Here’s the same distribution but defined in terms of \(\alpha\) and \(\beta\).

ggplot(data = tibble(x = seq(from = 0, to = 1, by = .01))) +

geom_ribbon(aes(x = x,

ymin = 0,

ymax = dbeta(x, 2.5, 2.5)),

fill = canva_pal("Green fields")(4)[4]) +

scale_x_continuous("probability space", breaks = c(0, .5, 1)) +

scale_y_continuous(NULL, breaks = NULL) +

ggtitle(expression(paste("The ", beta, " distribution")),

subtitle = expression(paste("This time defined in terms of ", alpha, " and ", beta))) +

theme_hc() +

theme(plot.background = element_rect(fill = "grey92"))

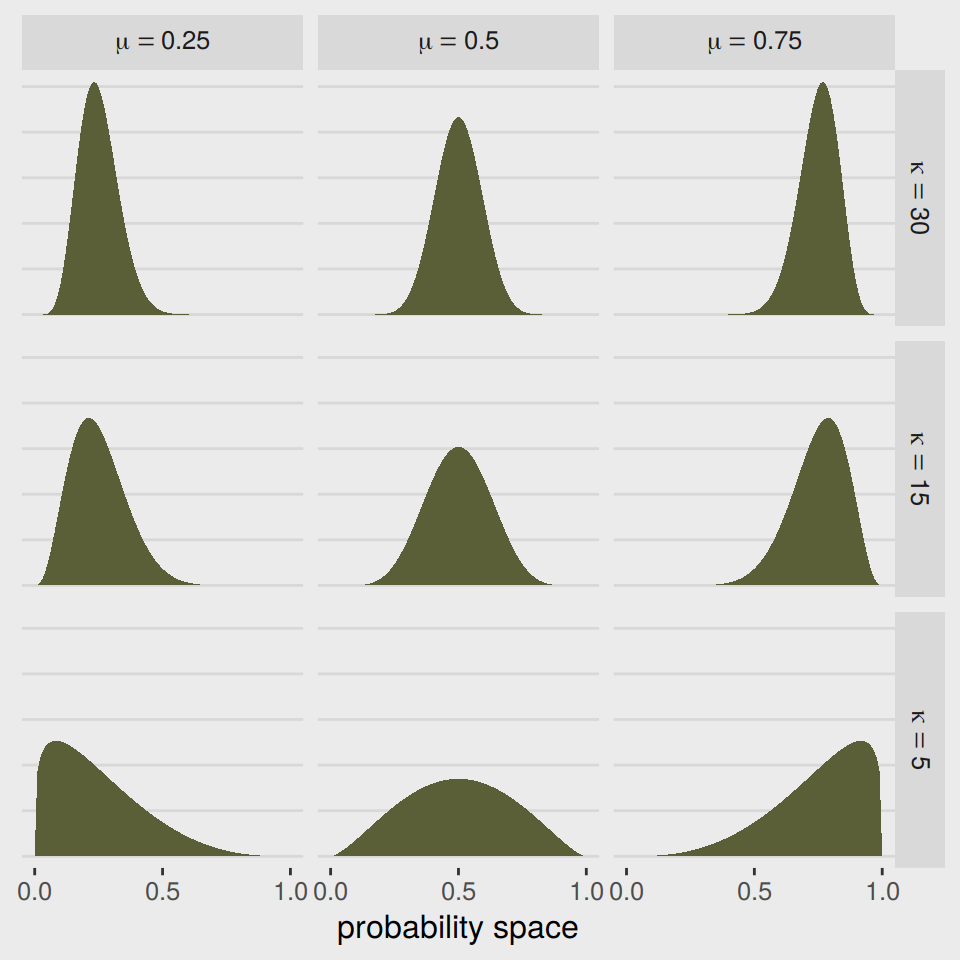

McElreath encouraged us to “explore different values for pbar and theta” (p. 348). Here’s a grid of plots with pbar = c(.25, .5, .75) and theta = c(5, 10, 15)

# data

tibble(pbar = c(.25, .5, .75)) %>%

expand(pbar, theta = c(5, 15, 30)) %>%

expand(nesting(pbar, theta), x = seq(from = 0, to = 1, length.out = 100)) %>%

mutate(density = rethinking::dbeta2(x, pbar, theta),

mu = str_c("mu == ", pbar %>% str_remove(., "0")),

kappa = str_c("kappa == ", theta)) %>%

mutate(kappa = factor(kappa, levels = c("kappa == 30", "kappa == 15", "kappa == 5"))) %>%

# plot

ggplot() +

geom_ribbon(aes(x = x,

ymin = 0,

ymax = density),

fill = canva_pal("Green fields")(4)[4]) +

scale_x_continuous("probability space", breaks = c(0, .5, 1)) +

scale_y_continuous(NULL, labels = NULL) +

theme_hc() +

theme(plot.background = element_rect(fill = "grey92"),

axis.ticks.y = element_blank()) +

facet_grid(kappa ~ mu, labeller = label_parsed)

If you’d like to see how to make a similar plot in terms of \(\alpha\) and \(\beta\), see the chapter 6 document of my project recoding Kruschke’s text into tidyverse and brms code.

But remember, we’re not fitting a beta model. We’re using the beta-binomial. “We’re going to bind our linear model to \(\overline p\), so that changes in predictor variables change the central tendency of the distribution” (p. 348). The statistical model we’ll be fitting follows the form

\[\begin{align*} \text{admit}_i & \sim \text{BetaBinomial} (n_i, \overline p_i, \theta)\\ \text{logit} (\overline p_i) & = \alpha \\ \alpha & \sim \text{Normal} (0, 2) \\ \theta & \sim \text{Exponential} (1) \end{align*}\]

Here the size \(n = \text{applications}\). In case you’re confused, yes, our statistical model is not the one McElreath presented at the top of page 348 in the text. If you look closely, the statistical formula he presented does not match up with the one implied by his R code 11.26. Our statistical formula and the brm() model we’ll be fitting, below, correspond to his R code 11.26.

Before we fit, we have an additional complication. The beta-binomial distribution is not implemented in brms at this time. However, brms versions 2.2.0 and above allow users to define custom distributions. You can find the handy vignette here. Happily, Bürkner even used the beta-binomial distribution as the exemplar in the vignette.

Before we get carried away, let’s load the data.

library(rethinking)

data(UCBadmit)

d <- UCBadmitUnload rethinking and load brms.

rm(UCBadmit)

detach(package:rethinking, unload = T)

library(brms)I’m not going to go into great detail explaining the ins and outs of making custom distributions for brm(). You’ve got Bürkner’s vignette for that. For our purposes, we need two preparatory steps. First, we need to use the custom_family() function to define the name and parameters of the beta-binomial distribution for use in brm(). Second, we have to define some functions for Stan which are not defined in Stan itself. We’ll save them as stan_funs. Third, we’ll make a stanvar() statement which will allow us to pass our stan_funs to brm().

beta_binomial2 <-

custom_family(

"beta_binomial2", dpars = c("mu", "phi"),

links = c("logit", "log"), lb = c(NA, 0),

type = "int", vars = "trials[n]"

)

stan_funs <- "

real beta_binomial2_lpmf(int y, real mu, real phi, int T) {

return beta_binomial_lpmf(y | T, mu * phi, (1 - mu) * phi);

}

int beta_binomial2_rng(real mu, real phi, int T) {

return beta_binomial_rng(T, mu * phi, (1 - mu) * phi);

}

"

stanvars <-

stanvar(scode = stan_funs, block = "functions")With that out of the way, we’re almost ready to test this baby out. Before we do, a point of clarification: What McElreath referred to as the shape parameter, \(\theta\), Bürkner called the precision parameter, \(\phi\). In our exposition, above, we followed Kruschke’s convention and called it \(\kappa\). These are all the same thing: \(\theta\), \(\phi\), and \(\kappa\) are all the same thing. Perhaps less confusingly, what McElreath called the pbar parameter, \(\bar{p}\), Bürkner simply called \(\mu\).

b11.5 <-

brm(data = d,

family = beta_binomial2, # here's our custom likelihood

admit | trials(applications) ~ 1,

prior = c(prior(normal(0, 2), class = Intercept),

prior(exponential(1), class = phi)),

iter = 4000, warmup = 1000, cores = 2, chains = 2,

stanvars = stanvars, # note our `stanvars`

seed = 11)Success, our results look a lot like those in the text!

print(b11.5)## Family: beta_binomial2

## Links: mu = logit; phi = identity

## Formula: admit | trials(applications) ~ 1

## Data: d (Number of observations: 12)

## Samples: 2 chains, each with iter = 4000; warmup = 1000; thin = 1;

## total post-warmup samples = 6000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## Intercept -0.37 0.30 -0.98 0.22 4277 1.00

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## phi 2.77 0.94 1.27 4.93 4191 1.00

##

## Samples were drawn using sampling(NUTS). For each parameter, Eff.Sample

## is a crude measure of effective sample size, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Here’s what the corresponding posterior_samples() data object looks like.

post <- posterior_samples(b11.5)

head(post)## b_Intercept phi lp__

## 1 -0.4526290 2.665758 -70.08453

## 2 -0.5550188 3.701943 -70.49722

## 3 -0.3478843 1.218215 -72.54718

## 4 -0.6537474 1.939885 -71.10193

## 5 -0.1486050 3.944223 -71.14498

## 6 -0.2648813 2.808775 -70.11757Here’s our median and percentile-based 95% interval.

post %>%

tidybayes::median_qi(inv_logit_scaled(b_Intercept)) %>%

mutate_if(is.double, round, digits = 3)## inv_logit_scaled(b_Intercept) .lower .upper .width .point .interval

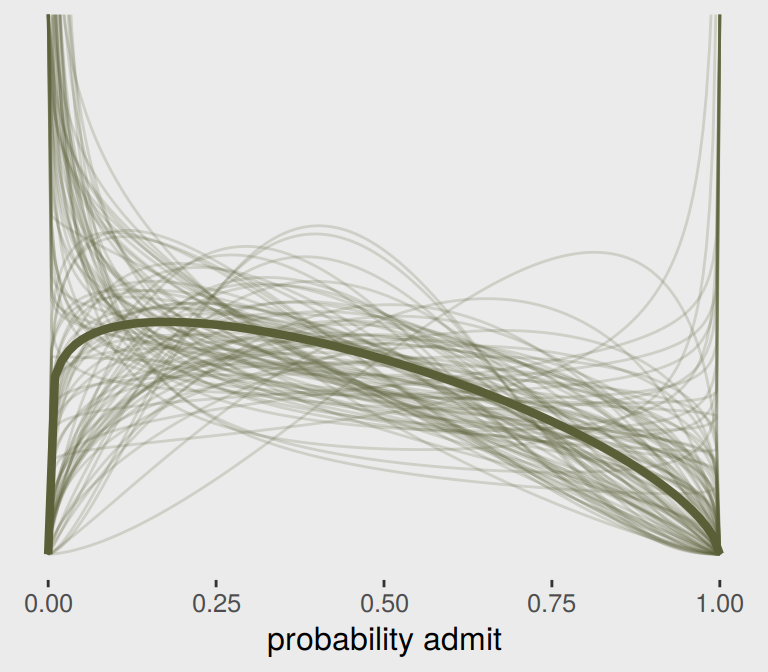

## 1 0.408 0.274 0.554 0.95 median qiTo stay within the tidyverse while making the many thin lines in Figure 11.5.a, we’re going to need to do a bit of data processing. First, we’ll want a variable to index the rows in post (i.e., to index the posterior draws). And we’ll want to convert the b_Intercept to the \(\bar{p}\) metric with the inv_logit_scaled() function. Then we’ll use sample_n() to randomly draw a subset of the posterior draws. Then with the expand() function, we’ll insert a tightly-spaced sequence of x values ranging between 0 and 1–the parameter space of beta distribution. Finally, we’ll use pmap_dbl() to compute the density values for the rethinking::dbeta2 distribution corresponding to the unique combination of x, p_bar, and phi values in each row.

set.seed(11)

lines <-

post %>%

mutate(iter = 1:n(),

p_bar = inv_logit_scaled(b_Intercept)) %>%

sample_n(size = 100) %>%

expand(nesting(iter, p_bar, phi),

x = seq(from = 0, to = 1, by = .005)) %>%

mutate(density = pmap_dbl(list(x, p_bar, phi), rethinking::dbeta2))

str(lines)## Classes 'tbl_df', 'tbl' and 'data.frame': 20100 obs. of 5 variables:

## $ iter : int 6 6 6 6 6 6 6 6 6 6 ...

## $ p_bar : num 0.434 0.434 0.434 0.434 0.434 ...

## $ phi : num 2.81 2.81 2.81 2.81 2.81 ...

## $ x : num 0 0.005 0.01 0.015 0.02 0.025 0.03 0.035 0.04 0.045 ...

## $ density: num 0 0.646 0.75 0.817 0.868 ...All that was just for the thin lines. To make the thicker line for the posterior mean, we’ll get tricky with stat_function().

lines %>%

ggplot(aes(x = x, y = density)) +

stat_function(fun = rethinking::dbeta2,

args = list(prob = mean(inv_logit_scaled(post[, 1])),

theta = mean(post[, 2])),

color = canva_pal("Green fields")(4)[4],

size = 1.5) +

geom_line(aes(group = iter),

alpha = .2, color = canva_pal("Green fields")(4)[4]) +

scale_y_continuous(NULL, breaks = NULL) +

coord_cartesian(ylim = 0:3) +

xlab("probability admit") +

theme_hc() +

theme(plot.background = element_rect(fill = "grey92"))

There are other ways to do this. For ideas, check out my blog post Make rotated Gaussians, Kruschke style.

Before we can do our variant of Figure 11.5.b, we’ll need to define a few more custom functions. The log_lik_beta_binomial2() and predict_beta_binomial2() functions are required for brms::predict() to work with our family = beta_binomial2 brmfit object. Similarly, fitted_beta_binomial2() is required for brms::fitted() to work properly. And before all that, we need to throw in a line with the expose_functions() function. Just go with it.

expose_functions(b11.5, vectorize = TRUE)

# required to use `predict()`

log_lik_beta_binomial2 <-

function(i, draws) {

mu <- draws$dpars$mu[, i]

phi <- draws$dpars$phi

N <- draws$data$trials[i]

y <- draws$data$Y[i]

beta_binomial2_lpmf(y, mu, phi, N)

}

predict_beta_binomial2 <-

function(i, draws, ...) {

mu <- draws$dpars$mu[, i]

phi <- draws$dpars$phi

N <- draws$data$trials[i]

beta_binomial2_rng(mu, phi, N)

}

# required to use `fitted()`

fitted_beta_binomial2 <-

function(draws) {

mu <- draws$dpars$mu

trials <- draws$data$trials

trials <- matrix(trials, nrow = nrow(mu), ncol = ncol(mu), byrow = TRUE)

mu * trials

}With those intermediary steps out of the way, we’re ready to make Figure 11.5.b.

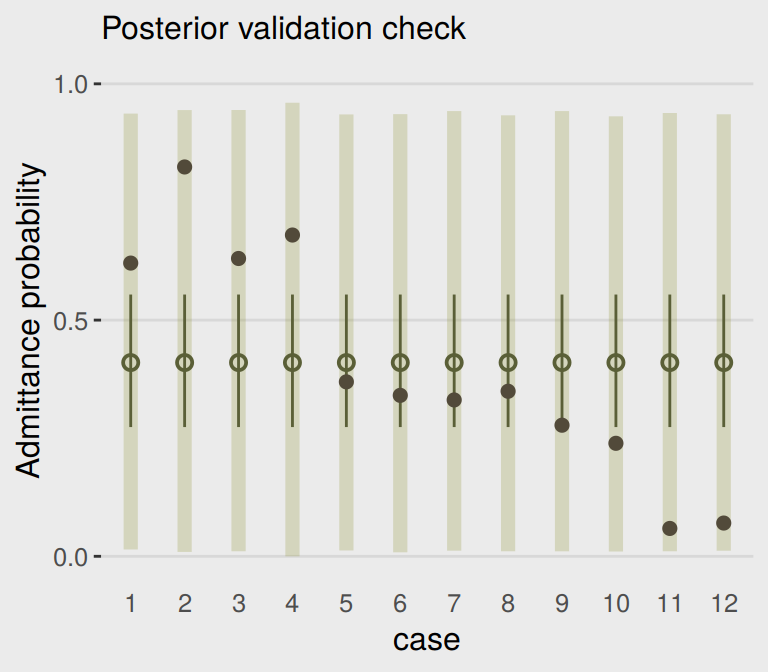

# the prediction intervals

predict(b11.5) %>%

as_tibble() %>%

transmute(ll = Q2.5,

ul = Q97.5) %>%

# the fitted intervals

bind_cols(

fitted(b11.5) %>%

as_tibble()

) %>%

# Tte original data used to fit the model

bind_cols(b11.5$data) %>%

mutate(case = 1:12) %>%

# plot

ggplot(aes(x = case)) +

geom_linerange(aes(ymin = ll / applications,

ymax = ul / applications),

color = canva_pal("Green fields")(4)[1],

size = 2.5, alpha = 1/4) +

geom_pointrange(aes(ymin = Q2.5 / applications,

ymax = Q97.5 / applications,

y = Estimate/applications),

color = canva_pal("Green fields")(4)[4],

size = 1/2, shape = 1) +

geom_point(aes(y = admit/applications),

color = canva_pal("Green fields")(4)[2],

size = 2) +

scale_x_continuous(breaks = 1:12) +

scale_y_continuous(breaks = c(0, .5, 1)) +

coord_cartesian(ylim = 0:1) +

labs(subtitle = "Posterior validation check",

y = "Admittance probability") +

theme_hc() +

theme(plot.background = element_rect(fill = "grey92"),

axis.ticks.x = element_blank(),

legend.position = "none")

As in the text, the raw data are consistent with the prediction intervals. But those intervals are so incredibly wide, they’re hardly an endorsement of the model. Once we learn about hierarchical models, we’ll be able to do much better.

11.3.2 Negative-binomial or gamma-Poisson.

Recall the Poisson distribution presumes \(\sigma^2\) scales with \(\mu\). The negative binomial distribution relaxes this assumption and presumes “each Poisson count observation has its own rate. It estimates the shape of a gamma distribution to describe the Poisson rates across cases” (p. 350).

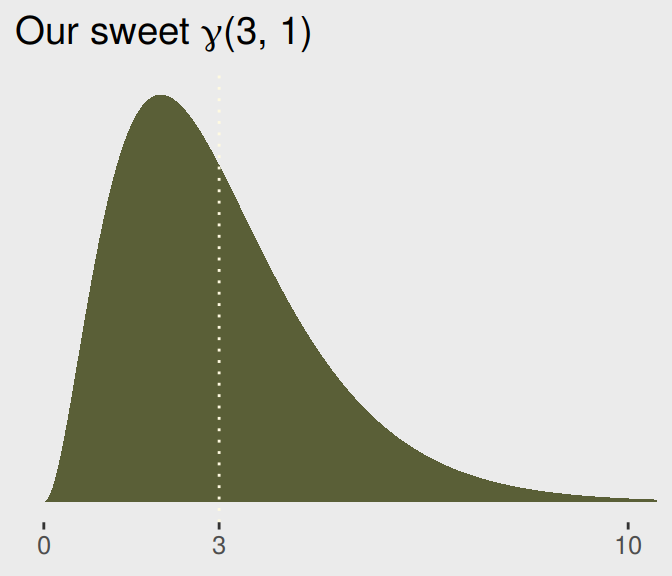

Here’s a look at the \(\gamma\) distribution.

mu <- 3

theta <- 1

ggplot(data = tibble(x = seq(from = 0, to = 12, by = .01)),

aes(x = x)) +

geom_ribbon(aes(ymin = 0,

ymax = rethinking::dgamma2(x, mu, theta)),

color = "transparent",

fill = canva_pal("Green fields")(4)[4]) +

geom_vline(xintercept = mu, linetype = 3,

color = canva_pal("Green fields")(4)[3]) +

scale_x_continuous(NULL, breaks = c(0, mu, 10)) +

scale_y_continuous(NULL, breaks = NULL) +

coord_cartesian(xlim = 0:10) +

ggtitle(expression(paste("Our sweet ", gamma, "(3, 1)"))) +

theme_hc() +

theme(plot.background = element_rect(fill = "grey92"))

11.3.2.1 Bonus: Let’s fit a negative-binomial model.

McElreath didn’t give an example of negative-binomial regression in the text. Here’s one with the UCBadmit data.

brm(data = d, family = negbinomial,

admit ~ 1 + applicant.gender,

prior = c(prior(normal(0, 10), class = Intercept),

prior(normal(0, 1), class = b),

prior(gamma(0.01, 0.01), class = shape)), # this is the brms default

iter = 4000, warmup = 1000, cores = 2, chains = 2,

seed = 11) %>%

print()## Family: negbinomial

## Links: mu = log; shape = identity

## Formula: admit ~ 1 + applicant.gender

## Data: d (Number of observations: 12)

## Samples: 2 chains, each with iter = 4000; warmup = 1000; thin = 1;

## total post-warmup samples = 6000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## Intercept 4.70 0.40 4.00 5.57 3773 1.00

## applicant.gendermale 0.58 0.49 -0.41 1.56 4230 1.00

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## shape 1.23 0.49 0.50 2.37 3852 1.00

##

## Samples were drawn using sampling(NUTS). For each parameter, Eff.Sample

## is a crude measure of effective sample size, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Since the negative-binomial model uses the log link, you need to exponentiate to get the estimates back into the count metric. E.g.,

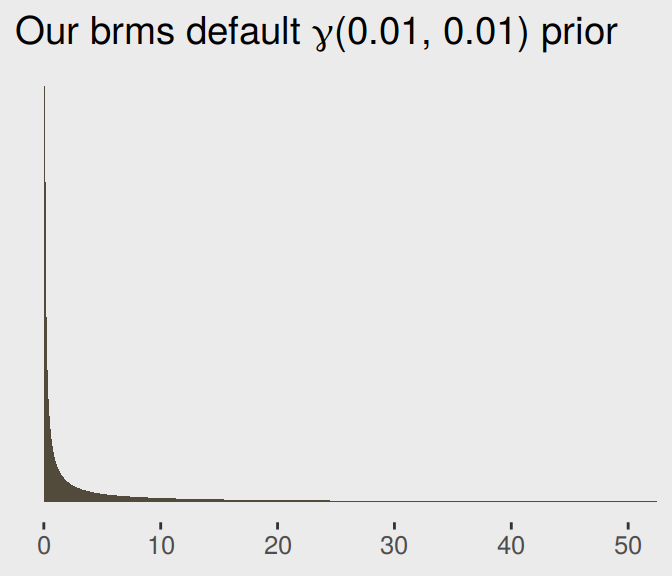

exp(4.7)## [1] 109.9472Also, you may have noticed we used the brms default prior(gamma(0.01, 0.01), class = shape) for the shape parameter. Here’s what that prior looks like.

ggplot(data = tibble(x = seq(from = 0, to = 60, by = .1)),

aes(x = x)) +

geom_ribbon(aes(ymin = 0,

ymax = dgamma(x, 0.01, 0.01)),

color = "transparent",

fill = canva_pal("Green fields")(4)[2]) +

scale_x_continuous(NULL) +

scale_y_continuous(NULL, breaks = NULL) +

coord_cartesian(xlim = 0:50) +

ggtitle(expression(paste("Our brms default ", gamma, "(0.01, 0.01) prior"))) +

theme_hc() +

theme(plot.background = element_rect(fill = "grey92"))

11.3.3 Over-dispersion, entropy, and information criteria.

Both the beta-binomial and the gamma-Poisson models are maximum entropy for the same constraints as the regular binomial and Poisson. They just try to account for unobserved heterogeneity in probabilities and rates. So while they can be a lot harder to fit to data, they can be usefully conceptualized much like ordinary binomial and Poisson GLMs. So in terms of model comparison using information criteria, a beta-binomial model is a binomial model, and a gamma-Poisson (negative-binomial) is a Poisson model. (pp. 350–351)

Session info

sessionInfo()## R version 3.6.0 (2019-04-26)

## Platform: x86_64-pc-linux-gnu (64-bit)

## Running under: Ubuntu 18.04.2 LTS

##

## Matrix products: default

## BLAS: /opt/R/3.6.0/lib/R/lib/libRblas.so

## LAPACK: /opt/R/3.6.0/lib/R/lib/libRlapack.so

##

## locale:

## [1] LC_CTYPE=en_US.UTF-8 LC_NUMERIC=C LC_TIME=en_US.UTF-8

## [4] LC_COLLATE=en_US.UTF-8 LC_MONETARY=en_US.UTF-8 LC_MESSAGES=en_US.UTF-8

## [7] LC_PAPER=en_US.UTF-8 LC_NAME=C LC_ADDRESS=C

## [10] LC_TELEPHONE=C LC_MEASUREMENT=en_US.UTF-8 LC_IDENTIFICATION=C

##

## attached base packages:

## [1] grid parallel stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] rethinking_1.59 loo_2.1.0 wesanderson_0.3.6 ghibli_0.2.0 extrafont_0.17

## [6] hrbrthemes_0.6.0 broom_0.5.2 rcartocolor_2.0.0 GGally_1.4.0 bayesplot_1.7.0

## [11] fiftystater_1.0.1 ggrepel_0.8.1 tidybayes_1.1.0 gridExtra_2.3 ggthemes_4.2.0

## [16] forcats_0.4.0 stringr_1.4.0 dplyr_0.8.1 purrr_0.3.2 readr_1.3.1

## [21] tidyr_0.8.3 tibble_2.1.3 tidyverse_1.2.1 brms_2.9.0 Rcpp_1.0.1

## [26] rstan_2.18.2 StanHeaders_2.18.1 ggplot2_3.1.1

##

## loaded via a namespace (and not attached):

## [1] pacman_0.5.1 utf8_1.1.4 ggstance_0.3.1 tidyselect_0.2.5

## [5] htmlwidgets_1.3 munsell_0.5.0 codetools_0.2-16 DT_0.7

## [9] future_1.13.0 miniUI_0.1.1.1 withr_2.1.2 Brobdingnag_1.2-6

## [13] colorspace_1.4-1 highr_0.8 knitr_1.23 rstudioapi_0.10

## [17] stats4_3.6.0 Rttf2pt1_1.3.7 listenv_0.7.0 labeling_0.3

## [21] mnormt_1.5-5 bridgesampling_0.6-0 rprojroot_1.3-2 coda_0.19-2

## [25] vctrs_0.1.0 generics_0.0.2 xfun_0.7 R6_2.4.0

## [29] markdown_1.0 HDInterval_0.2.0 reshape_0.8.8 assertthat_0.2.1

## [33] promises_1.0.1 scales_1.0.0 gtable_0.3.0 globals_0.12.4

## [37] processx_3.3.1 rlang_0.3.4 zeallot_0.1.0 extrafontdb_1.0

## [41] lazyeval_0.2.2 inline_0.3.15 yaml_2.2.0 reshape2_1.4.3

## [45] abind_1.4-5 modelr_0.1.4 threejs_0.3.1 crosstalk_1.0.0

## [49] backports_1.1.4 httpuv_1.5.1 rsconnect_0.8.13 tools_3.6.0

## [53] psych_1.8.12 bookdown_0.11 RColorBrewer_1.1-2 ggridges_0.5.1

## [57] plyr_1.8.4 base64enc_0.1-3 ps_1.3.0 prettyunits_1.0.2

## [61] zoo_1.8-6 haven_2.1.0 magrittr_1.5 colourpicker_1.0

## [65] mvtnorm_1.0-10 matrixStats_0.54.0 hms_0.4.2 shinyjs_1.0

## [69] mime_0.7 evaluate_0.14 arrayhelpers_1.0-20160527 xtable_1.8-4

## [73] shinystan_2.5.0 readxl_1.3.1 rstantools_1.5.1 compiler_3.6.0

## [77] maps_3.3.0 crayon_1.3.4 htmltools_0.3.6 later_0.8.0

## [81] lubridate_1.7.4 MASS_7.3-51.4 Matrix_1.2-17 cli_1.1.0

## [85] igraph_1.2.4.1 pkgconfig_2.0.2 foreign_0.8-71 xml2_1.2.0

## [89] svUnit_0.7-12 dygraphs_1.1.1.6 rvest_0.3.4 callr_3.2.0

## [93] digest_0.6.19 rmarkdown_1.13 cellranger_1.1.0 gdtools_0.1.8

## [97] shiny_1.3.2 gtools_3.8.1 nlme_3.1-140 jsonlite_1.6

## [101] mapproj_1.2.6 viridisLite_0.3.0 fansi_0.4.0 pillar_1.4.1

## [105] lattice_0.20-38 httr_1.4.0 pkgbuild_1.0.3 glue_1.3.1

## [109] xts_0.11-2 shinythemes_1.1.2 pander_0.6.3 stringi_1.4.3