Chapter 5 Tensors

Last update: Sun Oct 25 13:00:41 2020 -0500 (265c0b3c1)

In this chapter, we describe the most important PyTorch methods.

5.1 Tensor data types

#> torch.float32# change default data type to float64

torch$set_default_dtype(torch$float64)

torch$tensor(list(1.2, 3))$dtype # a new floating point tensor#> torch.float645.1.1 Major tensor types

There are five major type of tensors in PyTorch: byte, float, double, long, and boolean.

library(rTorch)

byte <- torch$ByteTensor(3L, 3L)

float <- torch$FloatTensor(3L, 3L)

double <- torch$DoubleTensor(3L, 3L)

long <- torch$LongTensor(3L, 3L)

boolean <- torch$BoolTensor(5L, 5L)message("byte tensor")

#> byte tensor

byte

#> tensor([[0, 0, 0],

#> [0, 0, 0],

#> [0, 0, 0]], dtype=torch.uint8)message("float tensor")

#> float tensor

float

#> tensor([[0., 0., 0.],

#> [0., 0., 0.],

#> [0., 0., 0.]], dtype=torch.float32)5.1.2 Example: A 4D tensor

A 4D tensor like in MNIST hand-written digits recognition dataset:

message("size")

#> size

mnist_4d$size()

#> torch.Size([60000, 3, 28, 28])

message("length")

#> length

length(mnist_4d)

#> [1] 141120000

message("shape, like in numpy")

#> shape, like in numpy

mnist_4d$shape

#> torch.Size([60000, 3, 28, 28])

message("number of elements")

#> number of elements

mnist_4d$numel()

#> [1] 1411200005.1.3 Example: A 3D tensor

Given a 3D tensor:

#> tensor([[[1.1390e+12, 3.0700e-41],

#> [1.4555e+12, 3.0700e-41],

#> [1.1344e+12, 3.0700e-41]],

#>

#> [[4.7256e+10, 3.0700e-41],

#> [4.7258e+10, 3.0700e-41],

#> [1.0075e+12, 3.0700e-41]],

#>

#> [[1.0075e+12, 3.0700e-41],

#> [1.0075e+12, 3.0700e-41],

#> [1.0075e+12, 3.0700e-41]],

#>

#> [[1.0075e+12, 3.0700e-41],

#> [4.7259e+10, 3.0700e-41],

#> [4.7263e+10, 3.0700e-41]]], dtype=torch.float32)5.2 Arithmetic of tensors

5.2.1 Add tensors

# add a scalar to a tensor

# 3x5 matrix uniformly distributed between 0 and 1

mat0 <- torch$FloatTensor(3L, 5L)$uniform_(0L, 1L)

mat0 + 0.1#> tensor([[0.9645, 0.6238, 0.9326, 0.3023, 0.1448],

#> [0.2610, 0.1987, 0.5089, 0.9776, 0.5261],

#> [0.2727, 0.5670, 0.8338, 0.4297, 0.7935]], dtype=torch.float32)5.2.2 Add tensor elements

# fill a 3x5 matrix with 0.1

mat1 <- torch$FloatTensor(3L, 5L)$uniform_(0.1, 0.1)

print(mat1)

#> tensor([[0.1000, 0.1000, 0.1000, 0.1000, 0.1000],

#> [0.1000, 0.1000, 0.1000, 0.1000, 0.1000],

#> [0.1000, 0.1000, 0.1000, 0.1000, 0.1000]], dtype=torch.float32)

# a vector with all ones

mat2 <- torch$FloatTensor(5L)$uniform_(1, 1)

print(mat2)

#> tensor([1., 1., 1., 1., 1.], dtype=torch.float32)

# add element (1,1) to another tensor

mat1[1, 1] + mat2

#> tensor([1.1000, 1.1000, 1.1000, 1.1000, 1.1000], dtype=torch.float32)Add two tensors using the function add():

#> tensor([[0.4604, 0.8114, 0.9630, 0.8070],

#> [0.6829, 0.4612, 0.1546, 1.1180],

#> [0.3134, 0.9399, 1.1217, 1.2846],

#> [1.9212, 1.3897, 0.5217, 0.3508],

#> [0.5801, 1.1733, 0.6494, 0.6771]])Add two tensors using the generic +:

#> tensor([[0.4604, 0.8114, 0.9630, 0.8070],

#> [0.6829, 0.4612, 0.1546, 1.1180],

#> [0.3134, 0.9399, 1.1217, 1.2846],

#> [1.9212, 1.3897, 0.5217, 0.3508],

#> [0.5801, 1.1733, 0.6494, 0.6771]])5.2.3 Multiply a tensor by a scalar

# Multiply tensor by scalar

tensor = torch$ones(4L, dtype=torch$float64)

scalar = np$float64(4.321)

print(scalar)

print(torch$scalar_tensor(scalar))#> [1] 4.32

#> tensor(4.3210)Notice that we used a NumPy function to create the scalar object

np$float64().

Multiply two tensors using the function mul:

#> tensor([4.3210, 4.3210, 4.3210, 4.3210])Short version using R generics:

#> tensor([4.3210, 4.3210, 4.3210, 4.3210])5.3 NumPy and PyTorch

numpy has been made available as a module in rTorch, which means that as soon as rTorch is loaded, you also get all the numpy functions available to you. We can call functions from numpy referring to it as np$_a_function. Examples:

#> [,1] [,2] [,3] [,4] [,5]

#> [1,] 0.303 0.475 0.00956 0.812 0.210

#> [2,] 0.546 0.607 0.19421 0.989 0.276

#> [3,] 0.240 0.158 0.53997 0.718 0.849#> [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] [,9] [,10]

#> [1,] 0 0 0 0 0 0 0 0 0 0

#> [2,] 0 0 0 0 0 0 0 0 0 0

#> [3,] 0 0 0 0 0 0 0 0 0 0

#> [4,] 0 0 0 0 0 0 0 0 0 0

#> [5,] 0 0 0 0 0 0 0 0 0 0#> [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] [,9] [,10]

#> [1,] 0.1 0.1 0.1 0.1 0.1 0.1 0.1 0.1 0.1 0.1

#> [2,] 0.1 0.1 0.1 0.1 0.1 0.1 0.1 0.1 0.1 0.1

#> [3,] 0.1 0.1 0.1 0.1 0.1 0.1 0.1 0.1 0.1 0.1

#> [4,] 0.1 0.1 0.1 0.1 0.1 0.1 0.1 0.1 0.1 0.1

#> [5,] 0.1 0.1 0.1 0.1 0.1 0.1 0.1 0.1 0.1 0.1And the dot product of both:

#> [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] [,9] [,10]

#> [1,] 0.181 0.181 0.181 0.181 0.181 0.181 0.181 0.181 0.181 0.181

#> [2,] 0.261 0.261 0.261 0.261 0.261 0.261 0.261 0.261 0.261 0.261

#> [3,] 0.250 0.250 0.250 0.250 0.250 0.250 0.250 0.250 0.250 0.2505.3.1 Python tuples and R vectors

In numpy the shape of a multidimensional array needs to be defined using a tuple. in R we do it instead with a vector. There are not tuples in R.

In Python, we use a tuple, (5, 5) to indicate the shape of the array:

#> [[1. 1. 1. 1. 1.]

#> [1. 1. 1. 1. 1.]

#> [1. 1. 1. 1. 1.]

#> [1. 1. 1. 1. 1.]

#> [1. 1. 1. 1. 1.]]In R, we use a vector c(5L, 5L). The L indicates an integer.

#> [,1] [,2] [,3] [,4] [,5]

#> [1,] 1 1 1 1 1

#> [2,] 1 1 1 1 1

#> [3,] 1 1 1 1 1

#> [4,] 1 1 1 1 1

#> [5,] 1 1 1 1 15.3.2 A numpy array from R vectors

For this matrix, or 2D tensor, we use three R vectors:

#> [,1] [,2] [,3]

#> [1,] 1 2 3

#> [2,] 4 5 6

#> [3,] 7 8 9And we could transpose the array using numpy as well:

#> [,1] [,2] [,3]

#> [1,] 1 4 7

#> [2,] 2 5 8

#> [3,] 3 6 95.3.3 numpy arrays to tensors

#> tensor([1., 2., 3.])5.3.4 Create and fill a tensor

We can create the tensor directly from R using tensor():

#> tensor([1., 2., 3.])

#> tensor(-1.)

#> [1] -1 2 35.3.5 Tensor to array, and viceversa

This is a very common operation in machine learning:

#> [,1] [,2] [,3] [,4]

#> [1,] 0.5596 0.1791 0.0149 0.568

#> [2,] 0.0946 0.0738 0.9916 0.685

#> [3,] 0.4065 0.1239 0.2190 0.905

#> [4,] 0.2055 0.0958 0.0788 0.193

#> [5,] 0.6578 0.8162 0.2609 0.097# convert a numpy array to a tensor

np_a = np$array(c(c(3, 4), c(3, 6)))

t_a = torch$from_numpy(np_a)

print(t_a)#> tensor([3., 4., 3., 6.])5.4 Create tensors

A random 1D tensor:

#> tensor([0.5074, 0.2779, 0.1923, 0.8058, 0.3472], dtype=torch.float32)Force a tensor as a float of 64-bits:

#> tensor([0.0704, 0.9035, 0.6435, 0.5640, 0.0108])Convert the tensor to a float of 16-bits:

#> tensor([0.0704, 0.9033, 0.6436, 0.5640, 0.0108], dtype=torch.float16)Create a tensor of size (5 x 7) with uninitialized memory:

#> tensor([[0.0000e+00, 0.0000e+00, 1.1811e+16, 3.0700e-41, 0.0000e+00, 0.0000e+00,

#> 1.4013e-45],

#> [0.0000e+00, 0.0000e+00, 0.0000e+00, 0.0000e+00, 0.0000e+00, 0.0000e+00,

#> 0.0000e+00],

#> [4.9982e+14, 3.0700e-41, 0.0000e+00, 0.0000e+00, 4.6368e+14, 3.0700e-41,

#> 0.0000e+00],

#> [0.0000e+00, 1.4013e-45, 0.0000e+00, 0.0000e+00, 0.0000e+00, 0.0000e+00,

#> 0.0000e+00],

#> [0.0000e+00, 0.0000e+00, 0.0000e+00, 0.0000e+00, 0.0000e+00, 0.0000e+00,

#> 0.0000e+00]], dtype=torch.float32)Using arange to create a tensor. arange starts at 0.

#> tensor([0, 1, 2, 3, 4, 5, 6, 7, 8])#> tensor([[0, 1, 2],

#> [3, 4, 5],

#> [6, 7, 8]])5.4.1 Tensor fill

On this tensor:

#> tensor([[1., 1., 1.],

#> [1., 1., 1.],

#> [1., 1., 1.]])Fill row 1 with 2s:

#> tensor([[2., 2., 2.],

#> [1., 1., 1.],

#> [1., 1., 1.]])Fill row 2 with 3s:

#> tensor([[2., 2., 2.],

#> [3., 3., 3.],

#> [1., 1., 1.]])Fill column 3 with fours (4):

#> tensor([[2., 2., 4.],

#> [3., 3., 4.],

#> [1., 1., 4.]])5.4.2 Tensor with a range of values

# Initialize Tensor with a range of value

v = torch$arange(10L) # similar to range(5) but creating a Tensor

(v = torch$arange(0L, 10L, step = 1L)) # Size 5. Similar to range(0, 5, 1)#> tensor([0, 1, 2, 3, 4, 5, 6, 7, 8, 9])5.4.3 Linear or log scale Tensor

Create a tensor with 10 linear points for (1, 10) inclusive:

#> tensor([ 1., 2., 3., 4., 5., 6., 7., 8., 9., 10.])Create a tensor with 10 logarithmic points for (1, 10) inclusive:

#> tensor([1.0000e-10, 1.0000e-05, 1.0000e+00, 1.0000e+05, 1.0000e+10])5.4.4 In-place / Out-of-place fill

On this uninitialized tensor:

#> tensor([[0., 0., 0., 0., 0., 0., 0.],

#> [0., 0., 0., 0., 0., 0., 0.],

#> [0., 0., 0., 0., 0., 0., 0.],

#> [0., 0., 0., 0., 0., 0., 0.],

#> [0., 0., 0., 0., 0., 0., 0.]], dtype=torch.float32)Fill the tensor with the value 3.5:

#> tensor([[3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000],

#> [3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000],

#> [3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000],

#> [3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000],

#> [3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000]],

#> dtype=torch.float32)Add a scalar to the tensor:

The tensor a is still filled with 3.5. A new tensor b is returned with values 3.5 + 4.0 = 7.5

#> tensor([[3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000],

#> [3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000],

#> [3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000],

#> [3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000],

#> [3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000, 3.5000]],

#> dtype=torch.float32)

#> tensor([[7.5000, 7.5000, 7.5000, 7.5000, 7.5000, 7.5000, 7.5000],

#> [7.5000, 7.5000, 7.5000, 7.5000, 7.5000, 7.5000, 7.5000],

#> [7.5000, 7.5000, 7.5000, 7.5000, 7.5000, 7.5000, 7.5000],

#> [7.5000, 7.5000, 7.5000, 7.5000, 7.5000, 7.5000, 7.5000],

#> [7.5000, 7.5000, 7.5000, 7.5000, 7.5000, 7.5000, 7.5000]],

#> dtype=torch.float32)5.5 Tensor resizing

x = torch$randn(2L, 3L) # Size 2x3

print(x)

#> tensor([[-0.4375, 1.2873, -0.5258],

#> [ 0.7870, -0.8505, -1.2215]])

y = x$view(6L) # Resize x to size 6

print(y)

#> tensor([-0.4375, 1.2873, -0.5258, 0.7870, -0.8505, -1.2215])

z = x$view(-1L, 2L) # Size 3x2

print(z)

#> tensor([[-0.4375, 1.2873],

#> [-0.5258, 0.7870],

#> [-0.8505, -1.2215]])

print(z$shape)

#> torch.Size([3, 2])5.5.1 Exercise

Reproduce this tensor:

0 1 2

3 4 5

6 7 8# create a vector with the number of elements

v = torch$arange(9L)

# resize to a 3x3 tensor

(v = v$view(3L, 3L))#> tensor([[0, 1, 2],

#> [3, 4, 5],

#> [6, 7, 8]])5.6 Concatenate tensors

#> tensor([[-0.3954, 1.4149, 0.2381],

#> [-1.2126, 0.7869, 0.0826]])

#> torch.Size([2, 3])5.6.1 Concatenate by rows

#> tensor([[-0.3954, 1.4149, 0.2381],

#> [-1.2126, 0.7869, 0.0826],

#> [-0.3954, 1.4149, 0.2381],

#> [-1.2126, 0.7869, 0.0826],

#> [-0.3954, 1.4149, 0.2381],

#> [-1.2126, 0.7869, 0.0826]])

#> torch.Size([6, 3])5.6.2 Concatenate by columns

#> tensor([[-0.3954, 1.4149, 0.2381, -0.3954, 1.4149, 0.2381, -0.3954, 1.4149,

#> 0.2381],

#> [-1.2126, 0.7869, 0.0826, -1.2126, 0.7869, 0.0826, -1.2126, 0.7869,

#> 0.0826]])

#> torch.Size([2, 9])5.7 Reshape tensors

5.7.1 With chunk():

Let’s say this is an image tensor with the 3-channels and 28x28 pixels

# ----- Reshape tensors -----

img <- torch$ones(3L, 28L, 28L) # Create the tensor of ones

print(img$size())#> torch.Size([3, 28, 28])On the first dimension dim = 0L, reshape the tensor:

img_chunks <- torch$chunk(img, chunks = 3L, dim = 0L)

print(length(img_chunks))

print(class(img_chunks))#> [1] 3

#> [1] "list"img_chunks is a list of three members.

The first chunk member:

# 1st chunk member

img_chunk <- img_chunks[[1]]

print(img_chunk$size())

print(img_chunk$sum()) # if the tensor had all ones, what is the sum?#> torch.Size([1, 28, 28])

#> tensor(784.)The second chunk member:

# 2nd chunk member

img_chunk <- img_chunks[[2]]

print(img_chunk$size())

print(img_chunk$sum()) # if the tensor had all ones, what is the sum?#> torch.Size([1, 28, 28])

#> tensor(784.)# 3rd chunk member

img_chunk <- img_chunks[[3]]

print(img_chunk$size())

print(img_chunk$sum()) # if the tensor had all ones, what is the sum?#> torch.Size([1, 28, 28])

#> tensor(784.)5.7.1.1 Exercise

- Create a tensor of shape 3x28x28 filled with values 0.25 on the first channel

- The second channel with 0.5

- The third chanel with 0.75

- Find the sum for ecah separate channel

- Find the sum of all channels

5.7.2 With index_select():

#> torch.Size([3, 28, 28])This is the layer 1:

# index_select. get layer 1

indices = torch$tensor(c(0L))

img_layer_1 <- torch$index_select(img, dim = 0L, index = indices)The size of the layer:

#> torch.Size([1, 28, 28])The sum of all elements in that layer:

#> tensor(784.)This is the layer 2:

# index_select. get layer 2

indices = torch$tensor(c(1L))

img_layer_2 <- torch$index_select(img, dim = 0L, index = indices)

print(img_layer_2$size())

print(img_layer_2$sum())#> torch.Size([1, 28, 28])

#> tensor(784.)This is the layer 3:

# index_select. get layer 3

indices = torch$tensor(c(2L))

img_layer_3 <- torch$index_select(img, dim = 0L, index = indices)

print(img_layer_3$size())

print(img_layer_3$sum())#> torch.Size([1, 28, 28])

#> tensor(784.)5.8 Special tensors

5.8.1 Identity matrix

#> tensor([[1., 0., 0.],

#> [0., 1., 0.],

#> [0., 0., 1.]])#> tensor([[1., 0., 0., 0., 0.],

#> [0., 1., 0., 0., 0.],

#> [0., 0., 1., 0., 0.],

#> [0., 0., 0., 1., 0.],

#> [0., 0., 0., 0., 1.]])5.8.2 Ones

(v = torch$ones(10L)) # A tensor of size 10 containing all ones

# reshape

(v = torch$ones(2L, 1L, 2L, 1L)) # Size 2x1x2x1, a 4D tensor#> tensor([1., 1., 1., 1., 1., 1., 1., 1., 1., 1.])

#> tensor([[[[1.],

#> [1.]]],

#>

#>

#> [[[1.],

#> [1.]]]])The matrix of ones is also called `unitary matrix. This is a 4x4 unitary matrix.

#> tensor([[1., 1., 1., 1.],

#> [1., 1., 1., 1.],

#> [1., 1., 1., 1.],

#> [1., 1., 1., 1.]])# eye tensor

eye = torch$eye(3L)

print(eye)

# like eye tensor

v = torch$ones_like(eye) # A tensor with same shape as eye. Fill it with 1.

v#> tensor([[1., 0., 0.],

#> [0., 1., 0.],

#> [0., 0., 1.]])

#> tensor([[1., 1., 1.],

#> [1., 1., 1.],

#> [1., 1., 1.]])5.8.3 Zeros

#> tensor([0., 0., 0., 0., 0., 0., 0., 0., 0., 0.])#> tensor([[0., 0., 0., 0.],

#> [0., 0., 0., 0.],

#> [0., 0., 0., 0.],

#> [0., 0., 0., 0.]])#> tensor([[[0., 0.],

#> [0., 0.],

#> [0., 0.],

#> [0., 0.]],

#>

#> [[0., 0.],

#> [0., 0.],

#> [0., 0.],

#> [0., 0.]],

#>

#> [[0., 0.],

#> [0., 0.],

#> [0., 0.],

#> [0., 0.]]])5.8.4 Diagonal operations

Given the 1D tensor

#> tensor([1, 2, 3])5.8.4.1 Diagonal matrix

We want to fill the main diagonal with the vector:

#> tensor([[1, 0, 0],

#> [0, 2, 0],

#> [0, 0, 3]])What about filling the diagonal above the main:

#> tensor([[0, 1, 0, 0],

#> [0, 0, 2, 0],

#> [0, 0, 0, 3],

#> [0, 0, 0, 0]])Or the diagonal below the main:

#> tensor([[0, 0, 0, 0],

#> [1, 0, 0, 0],

#> [0, 2, 0, 0],

#> [0, 0, 3, 0]])5.9 Access to tensor elements

#> tensor([[1., 2.],

#> [3., 4.]])Print element at position 1,1:

#> tensor(1.)Fill element at position 1,1 with 5:

#> tensor(5.)Show the modified tensor:

#> tensor([[5., 2.],

#> [3., 4.]])Access an element at position 1, 0:

#> tensor(3.)

#> [1] 35.9.1 Indices to tensor elements

On this tensor:

#> tensor([[ 0.7076, 0.0816, -0.0431, 2.0698],

#> [ 0.6320, 0.5760, 0.1177, -1.9255],

#> [ 0.1964, -0.1771, -2.2976, -0.1239]])Select indices, dim=0:

#> tensor([[ 0.7076, 0.0816, -0.0431, 2.0698],

#> [ 0.1964, -0.1771, -2.2976, -0.1239]])Select indices, dim=1:

#> tensor([[ 0.7076, -0.0431],

#> [ 0.6320, 0.1177],

#> [ 0.1964, -2.2976]])5.9.2 Using the take function

# Take by indices

src = torch$tensor(list(list(4, 3, 5),

list(6, 7, 8)) )

print(src)

print( torch$take(src, torch$tensor(list(0L, 2L, 5L))) )#> tensor([[4., 3., 5.],

#> [6., 7., 8.]])

#> tensor([4., 5., 8.])5.10 Other tensor operations

5.10.1 Cross product

m1 = torch$ones(3L, 5L)

m2 = torch$ones(3L, 5L)

v1 = torch$ones(3L)

# Cross product

# Size 3x5

(r = torch$cross(m1, m2))#> tensor([[0., 0., 0., 0., 0.],

#> [0., 0., 0., 0., 0.],

#> [0., 0., 0., 0., 0.]])5.11 Logical operations

m0 = torch$zeros(3L, 5L)

m1 = torch$ones(3L, 5L)

m2 = torch$eye(3L, 5L)

print(m1 == m0)

#> tensor([[False, False, False, False, False],

#> [False, False, False, False, False],

#> [False, False, False, False, False]])print(m1 != m1)

#> tensor([[False, False, False, False, False],

#> [False, False, False, False, False],

#> [False, False, False, False, False]])print(m2 == m2)

#> tensor([[True, True, True, True, True],

#> [True, True, True, True, True],

#> [True, True, True, True, True]])# AND

m1 & m1

#> tensor([[1, 1, 1, 1, 1],

#> [1, 1, 1, 1, 1],

#> [1, 1, 1, 1, 1]], dtype=torch.uint8)# OR

m0 | m2

#> tensor([[1, 0, 0, 0, 0],

#> [0, 1, 0, 0, 0],

#> [0, 0, 1, 0, 0]], dtype=torch.uint8)# OR

m1 | m2

#> tensor([[1, 1, 1, 1, 1],

#> [1, 1, 1, 1, 1],

#> [1, 1, 1, 1, 1]], dtype=torch.uint8)5.11.1 Extract a unique logical result

With all:

# tensor is less than

A <- torch$ones(60000L, 1L, 28L, 28L)

C <- A * 0.5

# is C < A

all(torch$lt(C, A))

#> tensor(1, dtype=torch.uint8)

all(C < A)

#> tensor(1, dtype=torch.uint8)

# is A < C

all(A < C)

#> tensor(0, dtype=torch.uint8)With function all_boolean:

5.11.2 Greater than (gt)

5.11.3 Less than or equal (le)

# tensor is less than or equal

A1 <- torch$ones(60000L, 1L, 28L, 28L)

all(torch$le(A1, A1))

#> tensor(1, dtype=torch.uint8)

all(A1 <= A1)

#> tensor(1, dtype=torch.uint8)

# tensor is greater than or equal

A0 <- torch$zeros(60000L, 1L, 28L, 28L)

all(torch$ge(A0, A0))

#> tensor(1, dtype=torch.uint8)

all(A0 >= A0)

#> tensor(1, dtype=torch.uint8)

all(A1 >= A0)

#> tensor(1, dtype=torch.uint8)

all(A1 <= A0)

#> tensor(0, dtype=torch.uint8)5.11.4 Logical NOT (!)

all_true <- torch$BoolTensor(list(TRUE, TRUE, TRUE, TRUE))

all_true

#> tensor([True, True, True, True])

# logical NOT

not_all_true <- !all_true

not_all_true

#> tensor([False, False, False, False])diag <- torch$eye(5L)

diag

#> tensor([[1., 0., 0., 0., 0.],

#> [0., 1., 0., 0., 0.],

#> [0., 0., 1., 0., 0.],

#> [0., 0., 0., 1., 0.],

#> [0., 0., 0., 0., 1.]])

# logical NOT

not_diag <- !diag

# convert to integer

not_diag$to(dtype=torch$uint8)

#> tensor([[0, 1, 1, 1, 1],

#> [1, 0, 1, 1, 1],

#> [1, 1, 0, 1, 1],

#> [1, 1, 1, 0, 1],

#> [1, 1, 1, 1, 0]], dtype=torch.uint8)5.12 Distributions

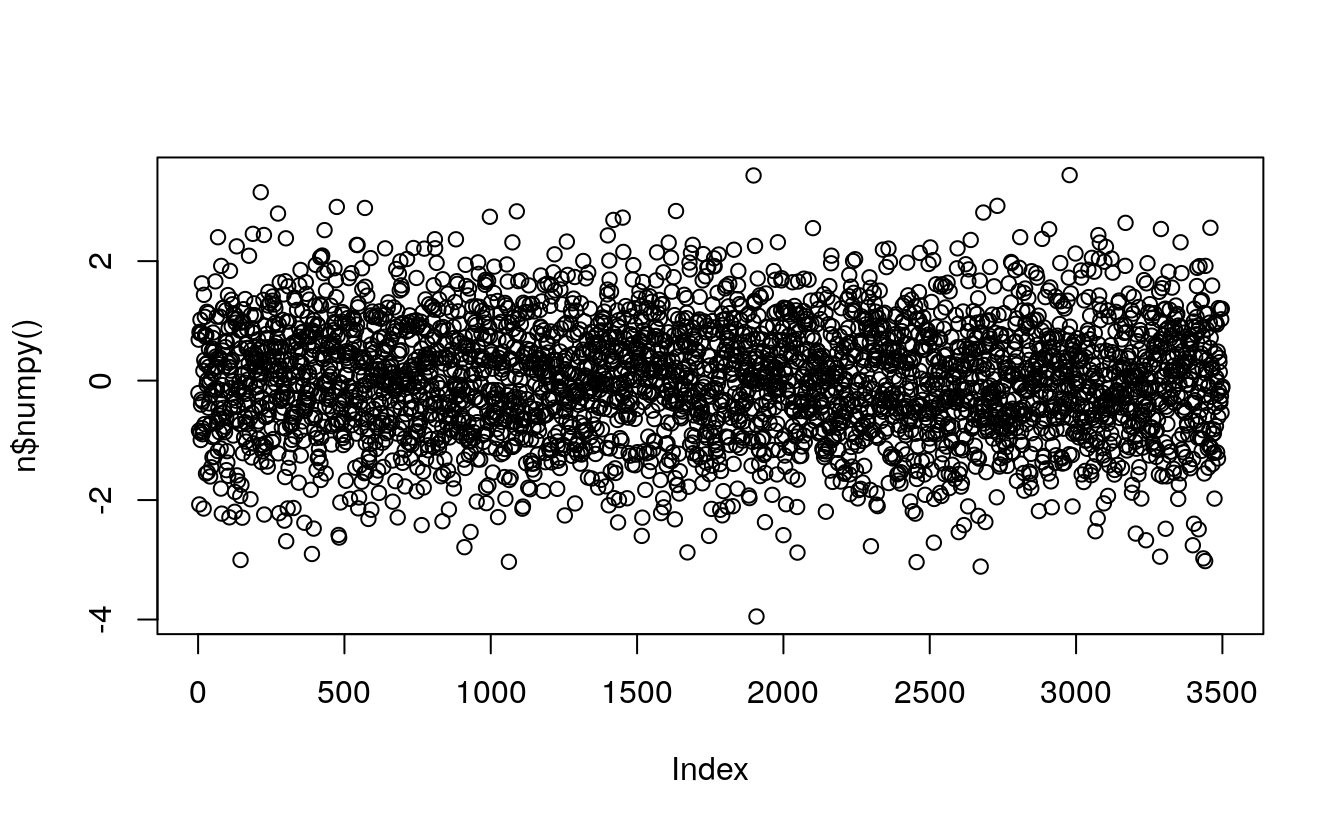

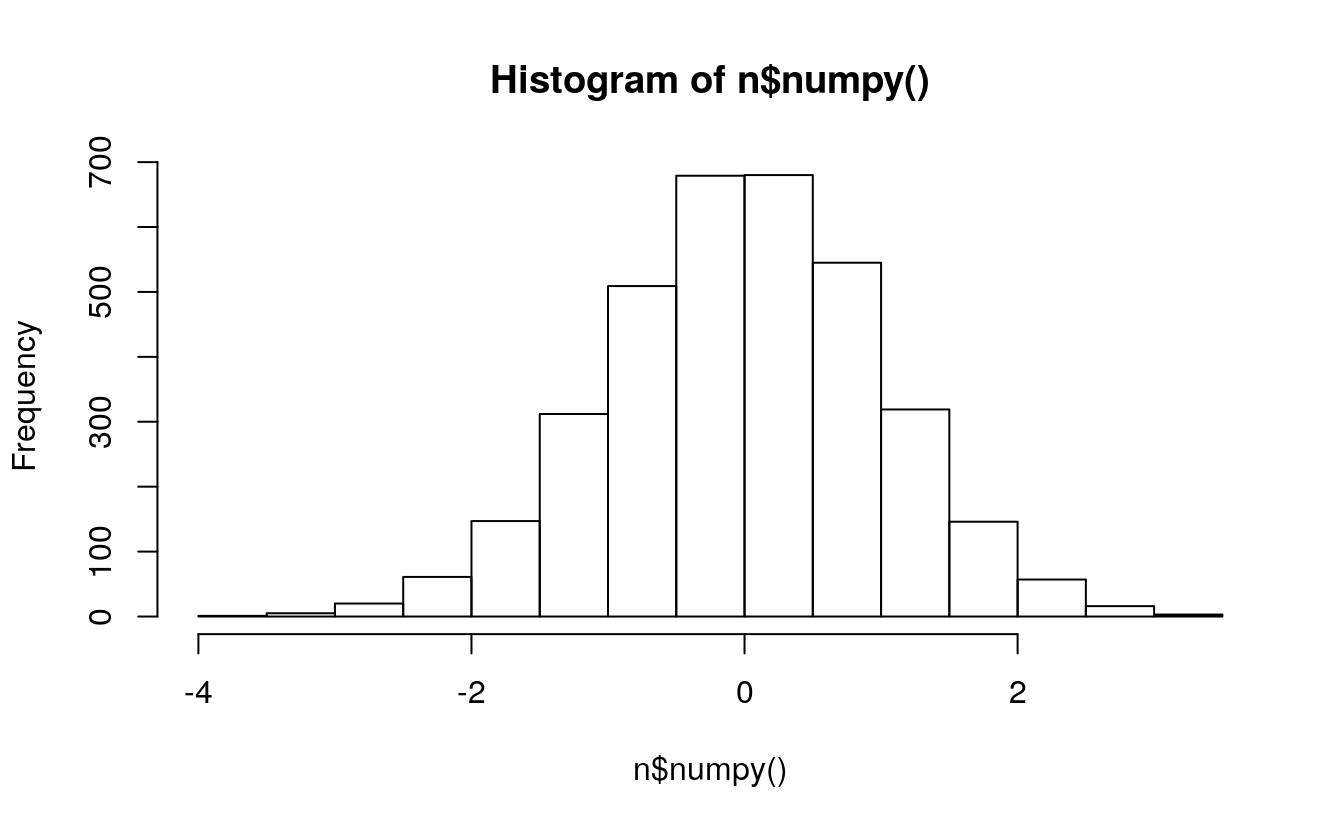

Initialize a tensor randomized with a normal distribution with mean=0, var=1:

n <- torch$randn(3500L)

n

#> tensor([-0.2087, 0.6850, -0.8386, ..., 1.2029, -0.1329, -0.0998])

plot(n$numpy())

hist(n$numpy())

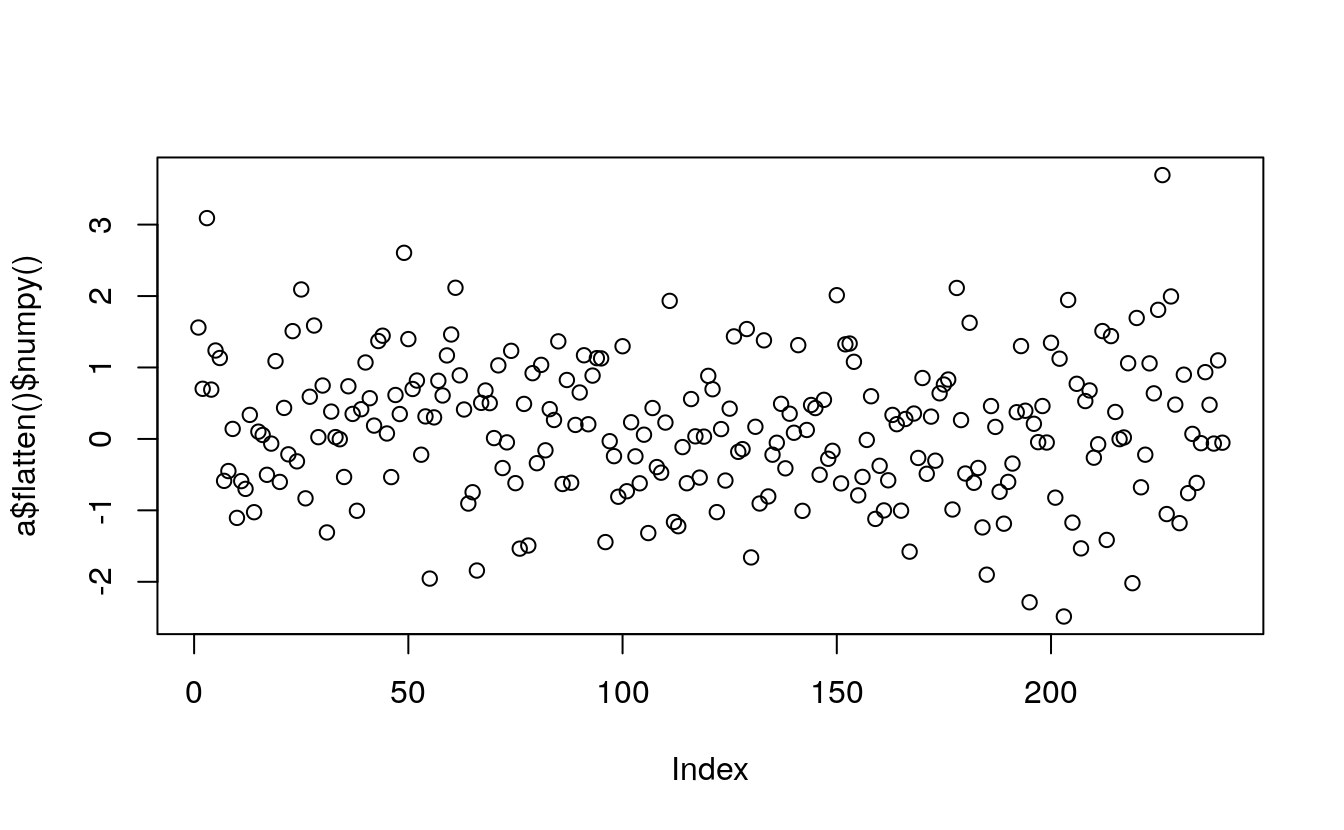

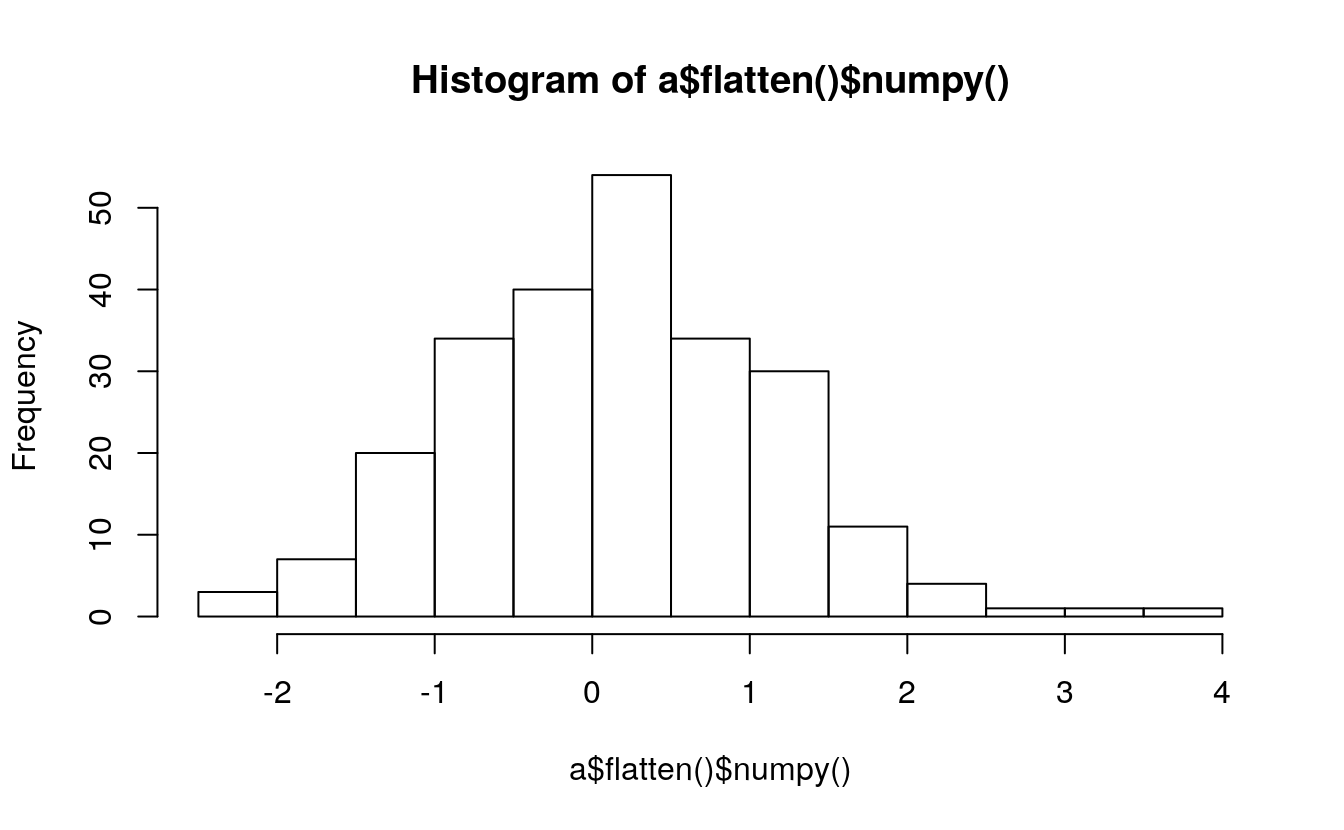

a <- torch$randn(8L, 5L, 6L)

# print(a)

print(a$size())

#> torch.Size([8, 5, 6])

plot(a$flatten()$numpy())

hist(a$flatten()$numpy())

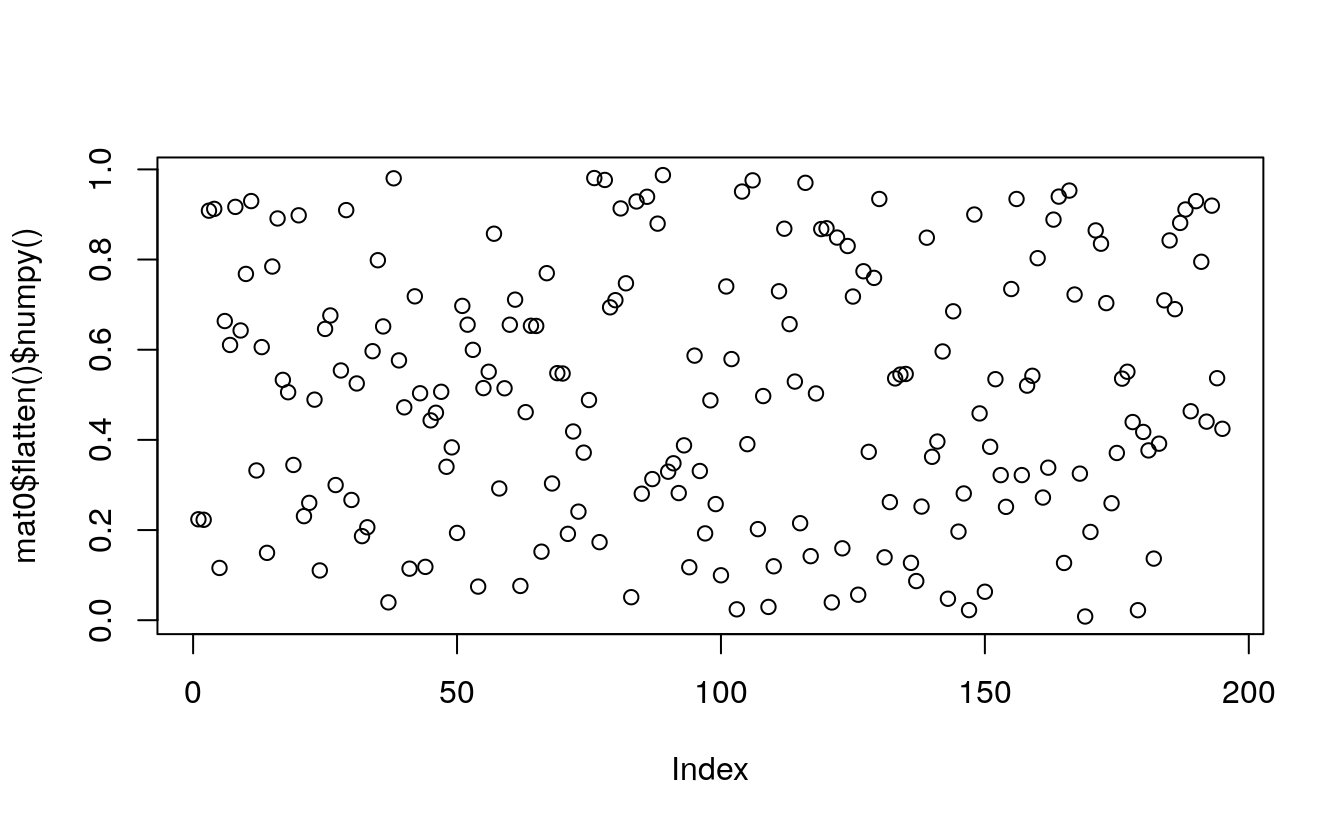

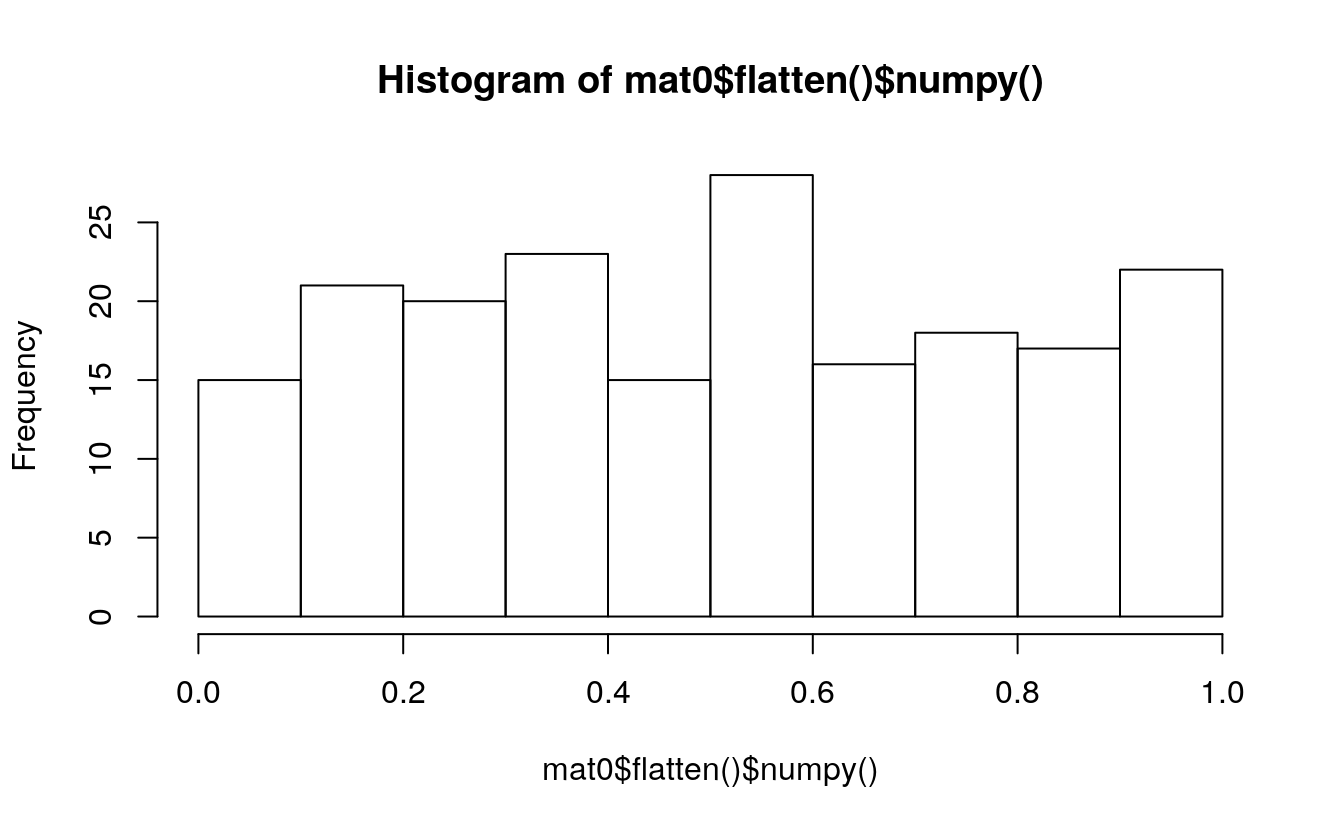

5.12.1 Uniform matrix

library(rTorch)

# 3x5 matrix uniformly distributed between 0 and 1

mat0 <- torch$FloatTensor(13L, 15L)$uniform_(0L, 1L)

plot(mat0$flatten()$numpy())

hist(mat0$flatten()$numpy())

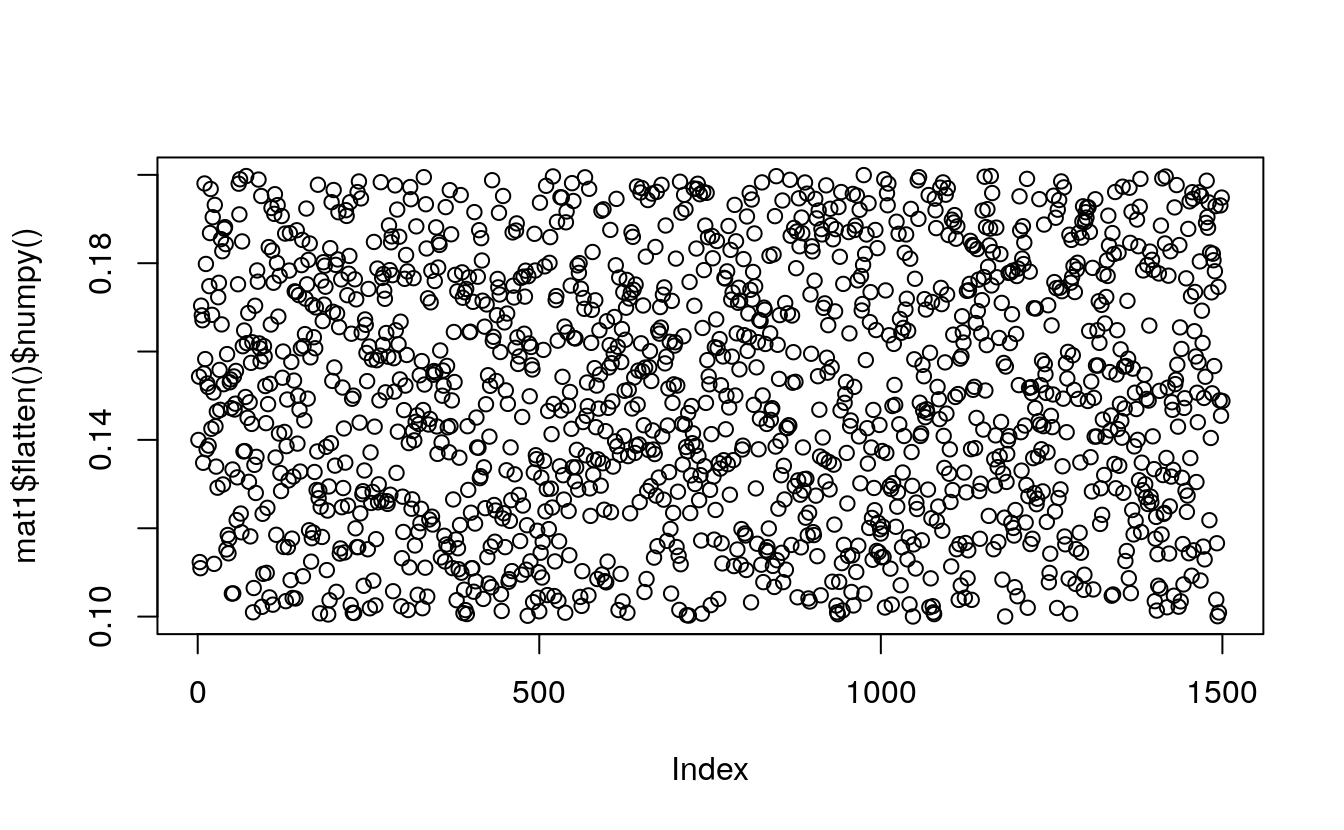

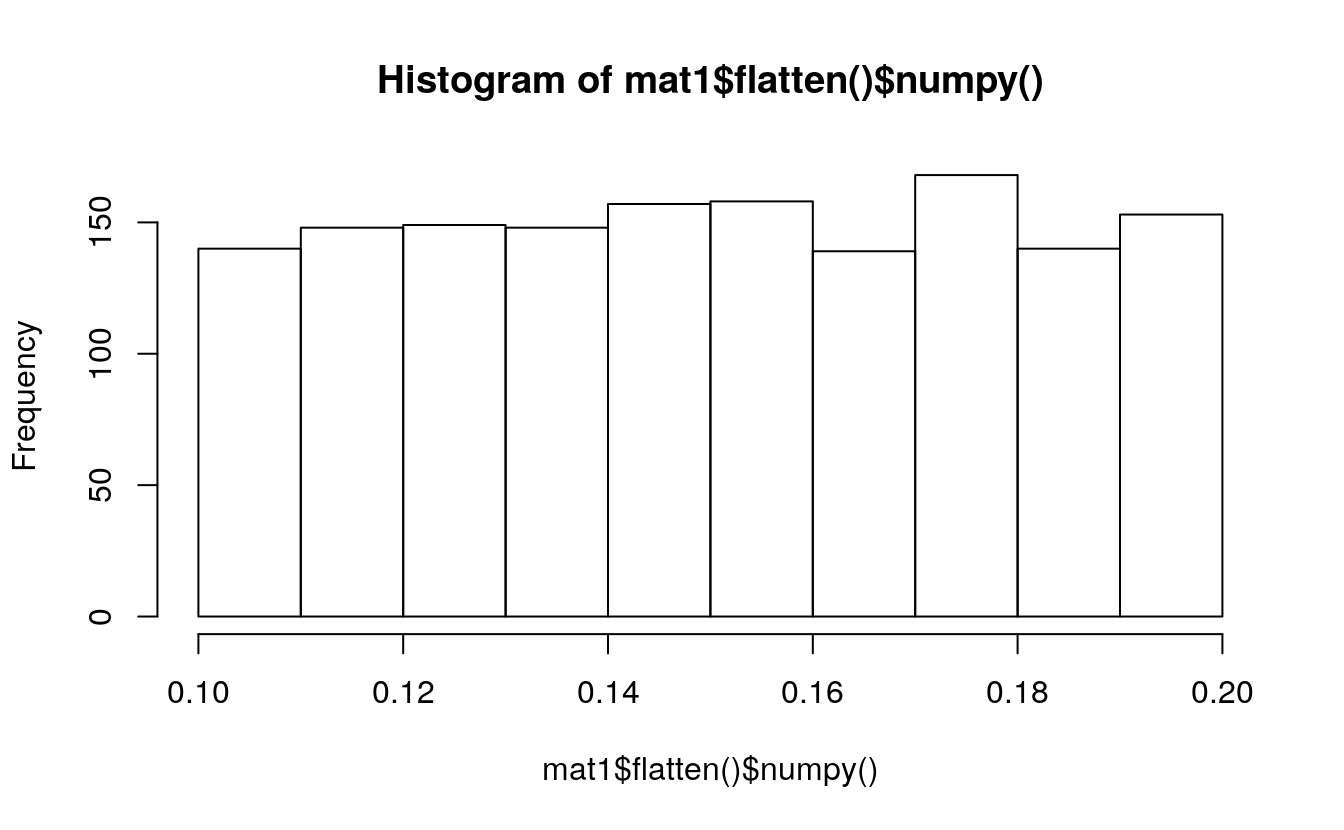

# fill a 3x5 matrix with 0.1

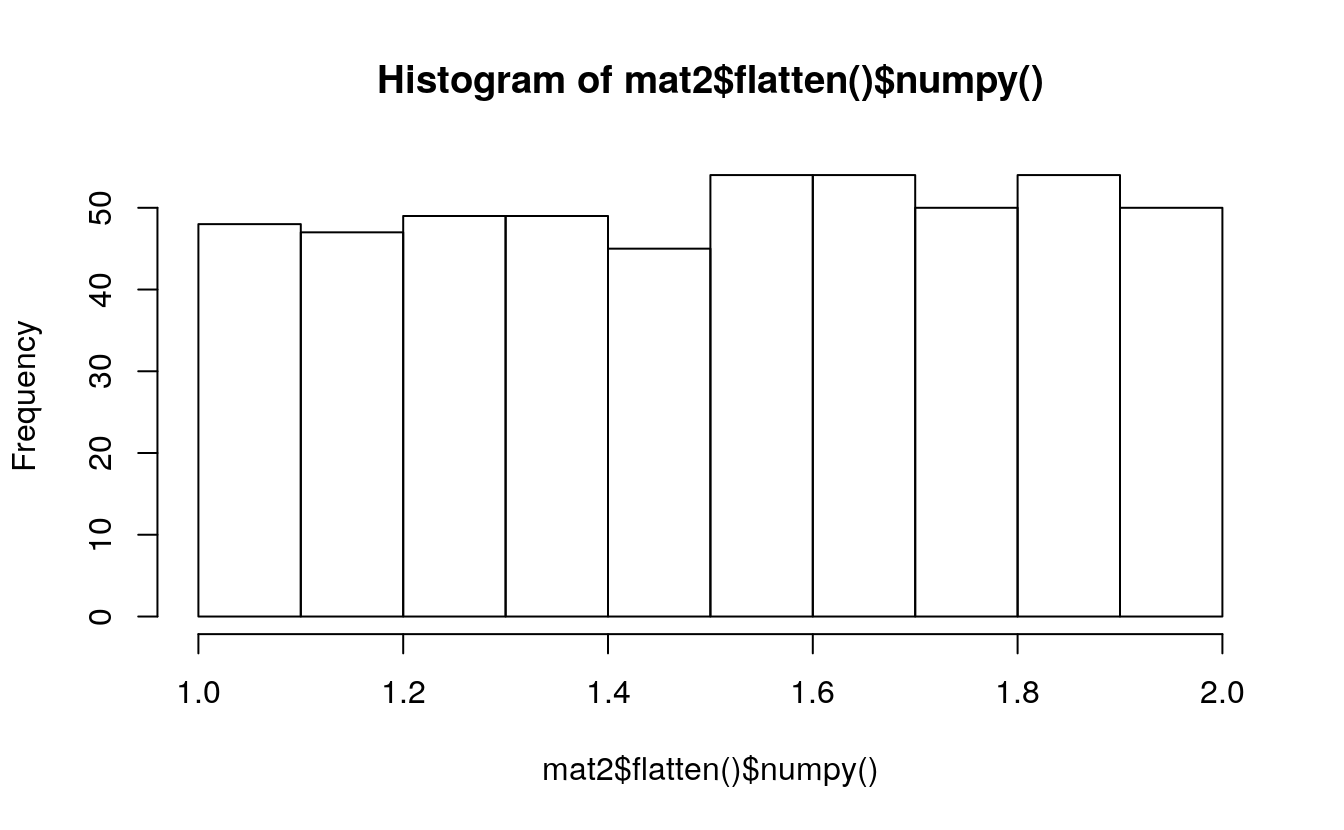

mat1 <- torch$FloatTensor(30L, 50L)$uniform_(0.1, 0.2)

plot(mat1$flatten()$numpy())

hist(mat1$flatten()$numpy())

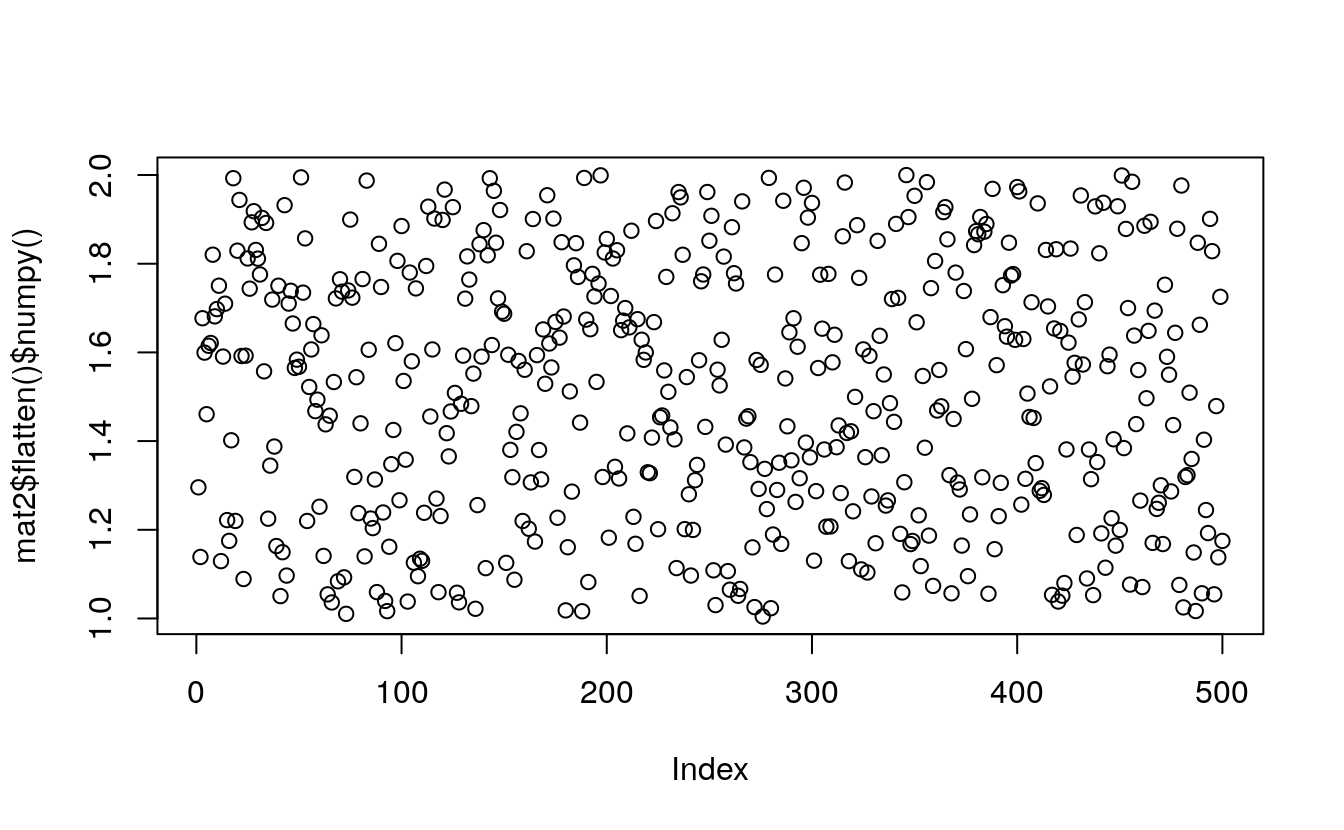

# a vector with all ones

mat2 <- torch$FloatTensor(500L)$uniform_(1, 2)

plot(mat2$flatten()$numpy())

hist(mat2$flatten()$numpy())

5.12.2 Binomial distribution

Binomial <- torch$distributions$binomial$Binomial

m = Binomial(100, torch$tensor(list(0 , .2, .8, 1)))

(x = m$sample())

#> tensor([ 0., 23., 78., 100.])m = Binomial(torch$tensor(list(list(5.), list(10.))),

torch$tensor(list(0.5, 0.8)))

(x = m$sample())

#> tensor([[3., 4.],

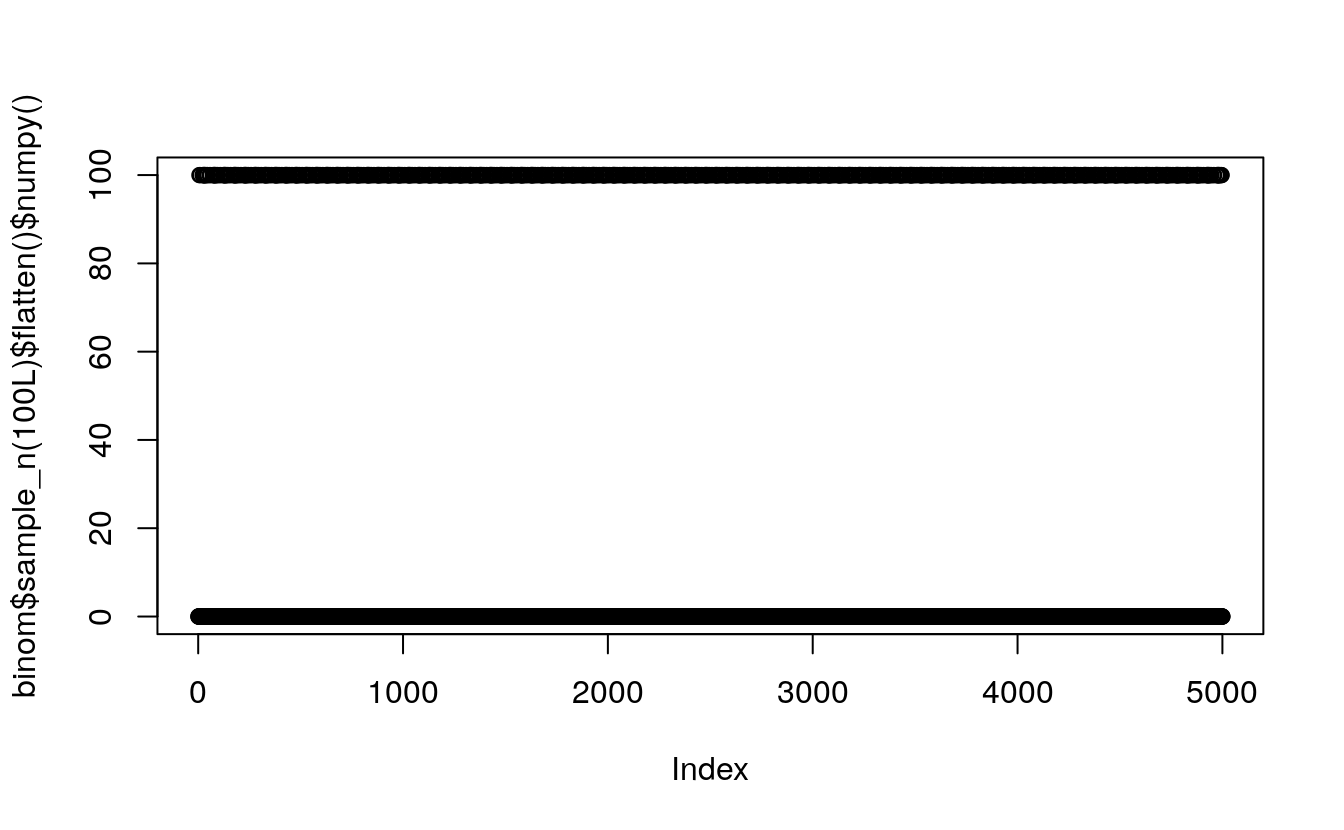

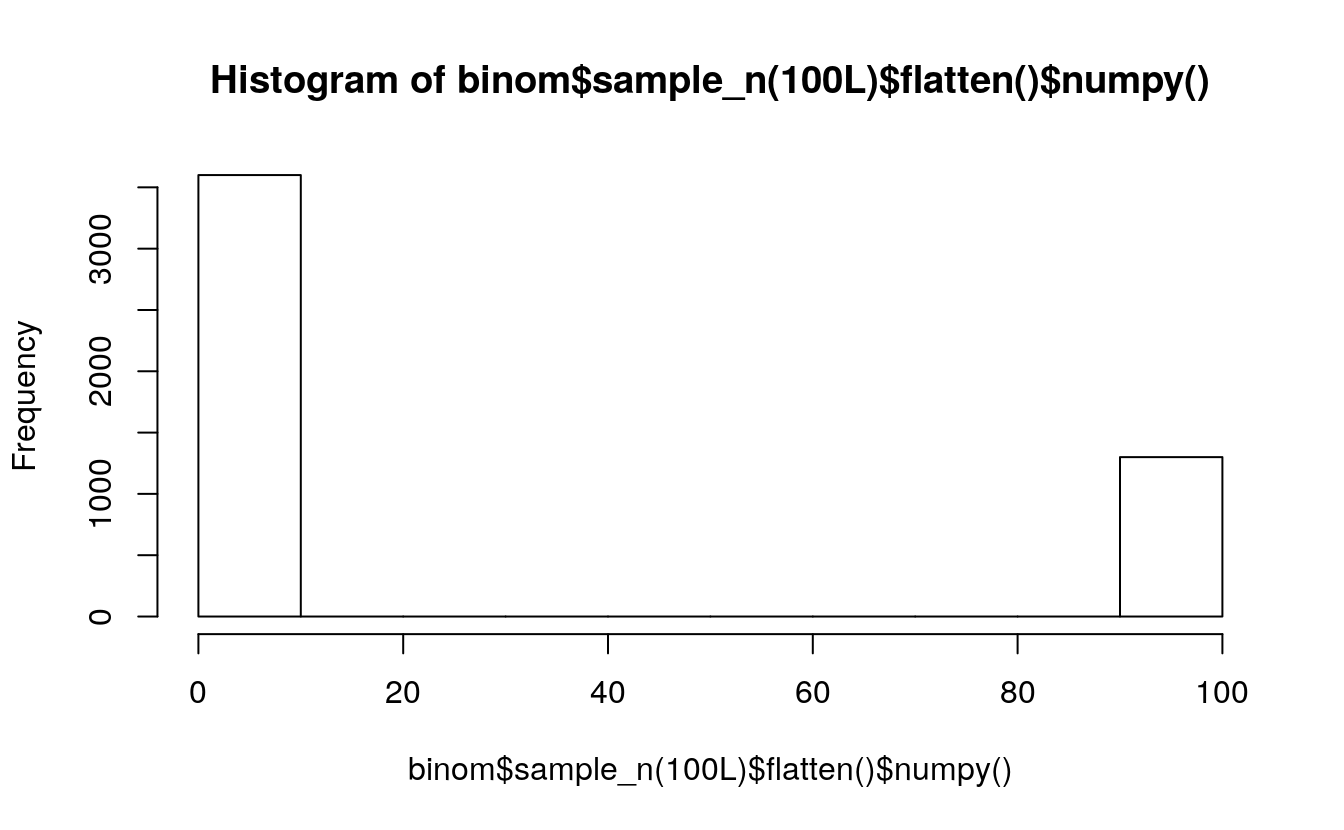

#> [6., 8.]])binom <- Binomial(100, torch$FloatTensor(5L, 10L))

print(binom)

#> Binomial(total_count: torch.Size([5, 10]), probs: torch.Size([5, 10]), logits: torch.Size([5, 10]))print(binom$sample_n(100L)$shape)

#> torch.Size([100, 5, 10])

plot(binom$sample_n(100L)$flatten()$numpy())

hist(binom$sample_n(100L)$flatten()$numpy())

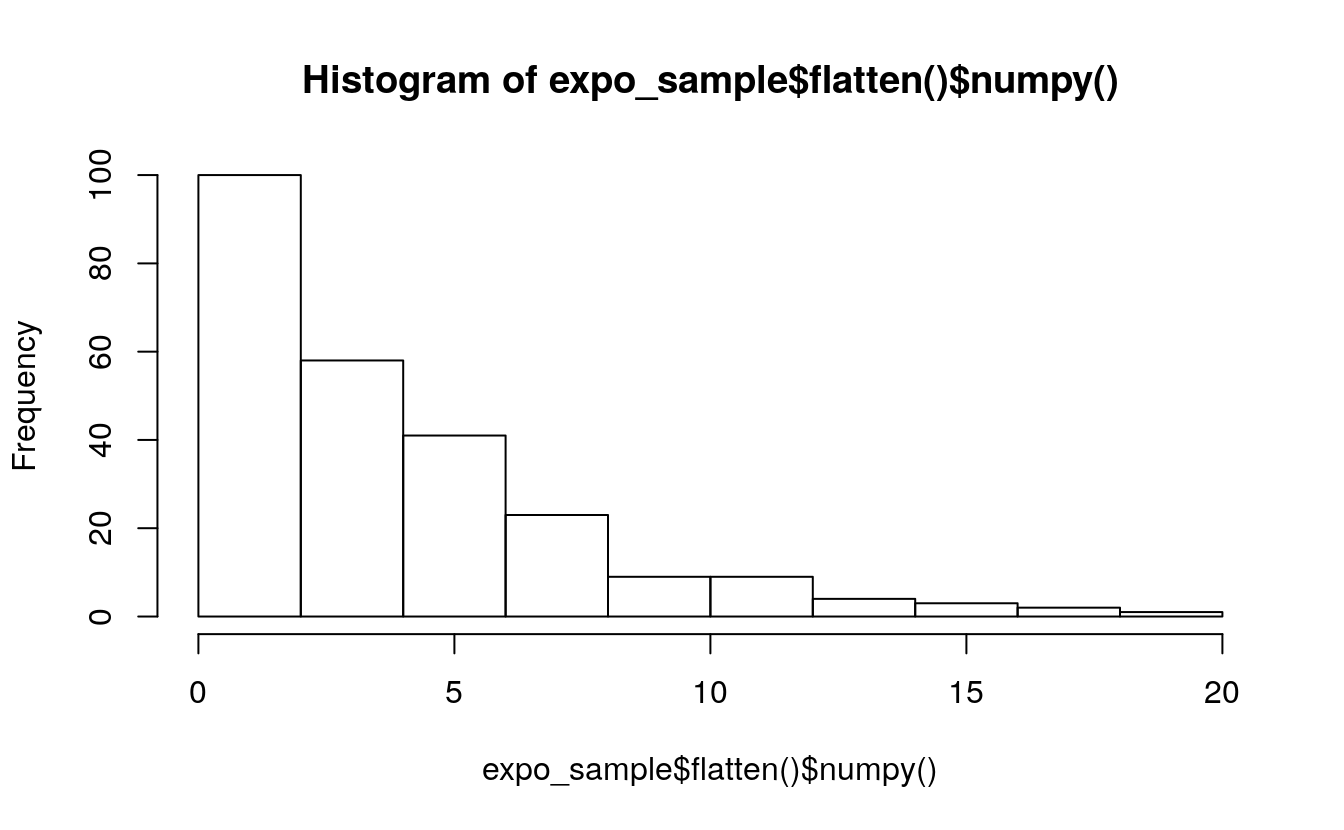

5.12.3 Exponential distribution

Exponential <- torch$distributions$exponential$Exponential

m = Exponential(torch$tensor(list(1.0)))

m

#> Exponential(rate: tensor([1.]))

m$sample() # Exponential distributed with rate=1

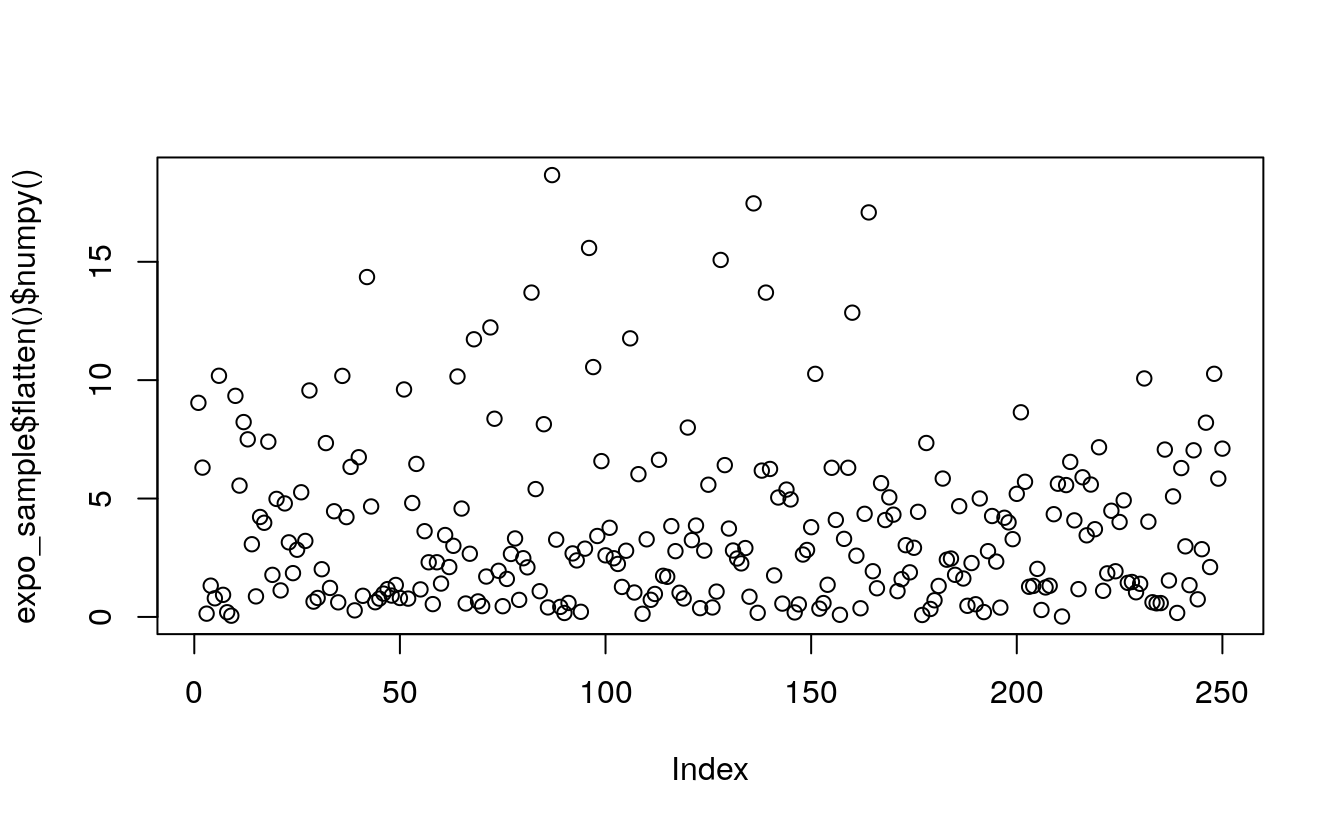

#> tensor([0.4171])expo <- Exponential(rate=0.25)

expo_sample <- expo$sample_n(250L) # generate 250 samples

print(expo_sample$shape)

#> torch.Size([250])

plot(expo_sample$flatten()$numpy())

hist(expo_sample$flatten()$numpy())

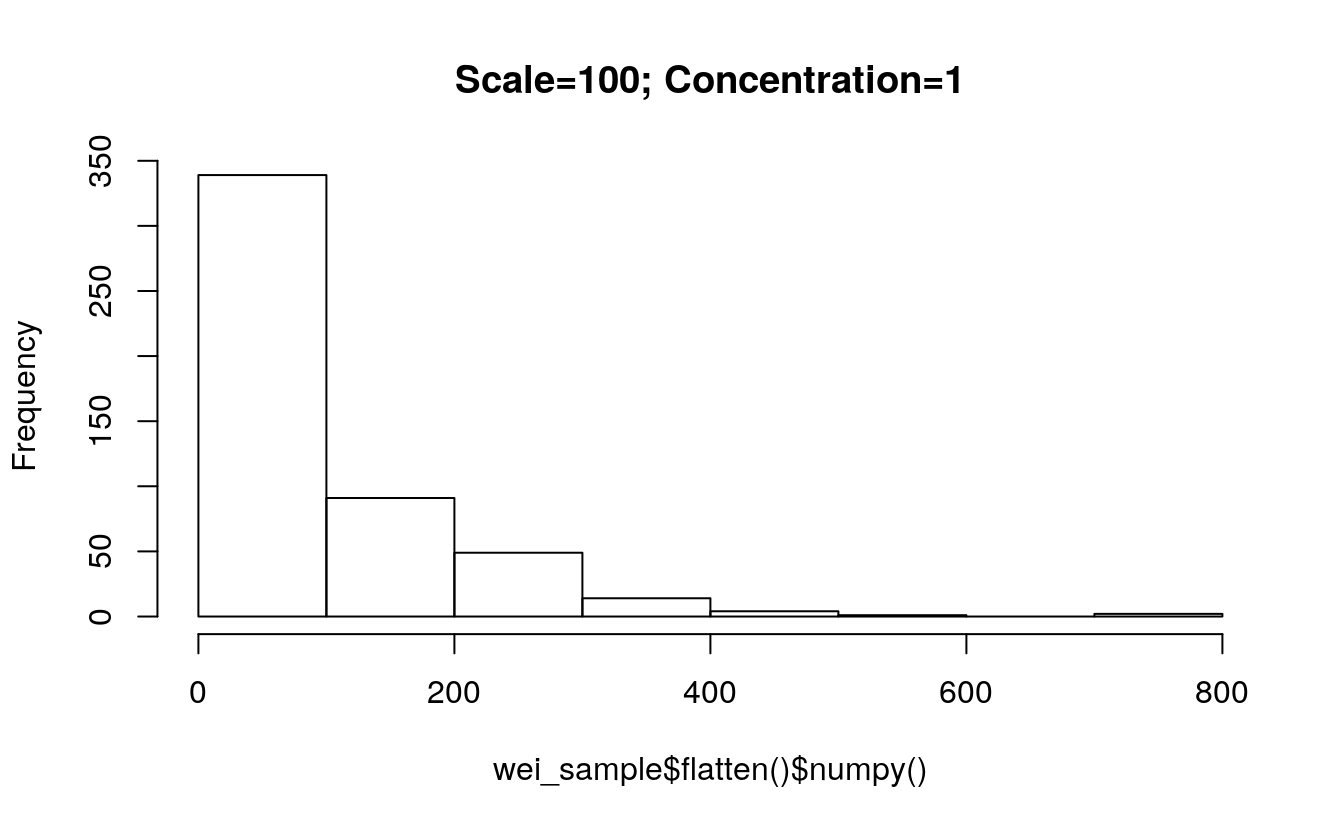

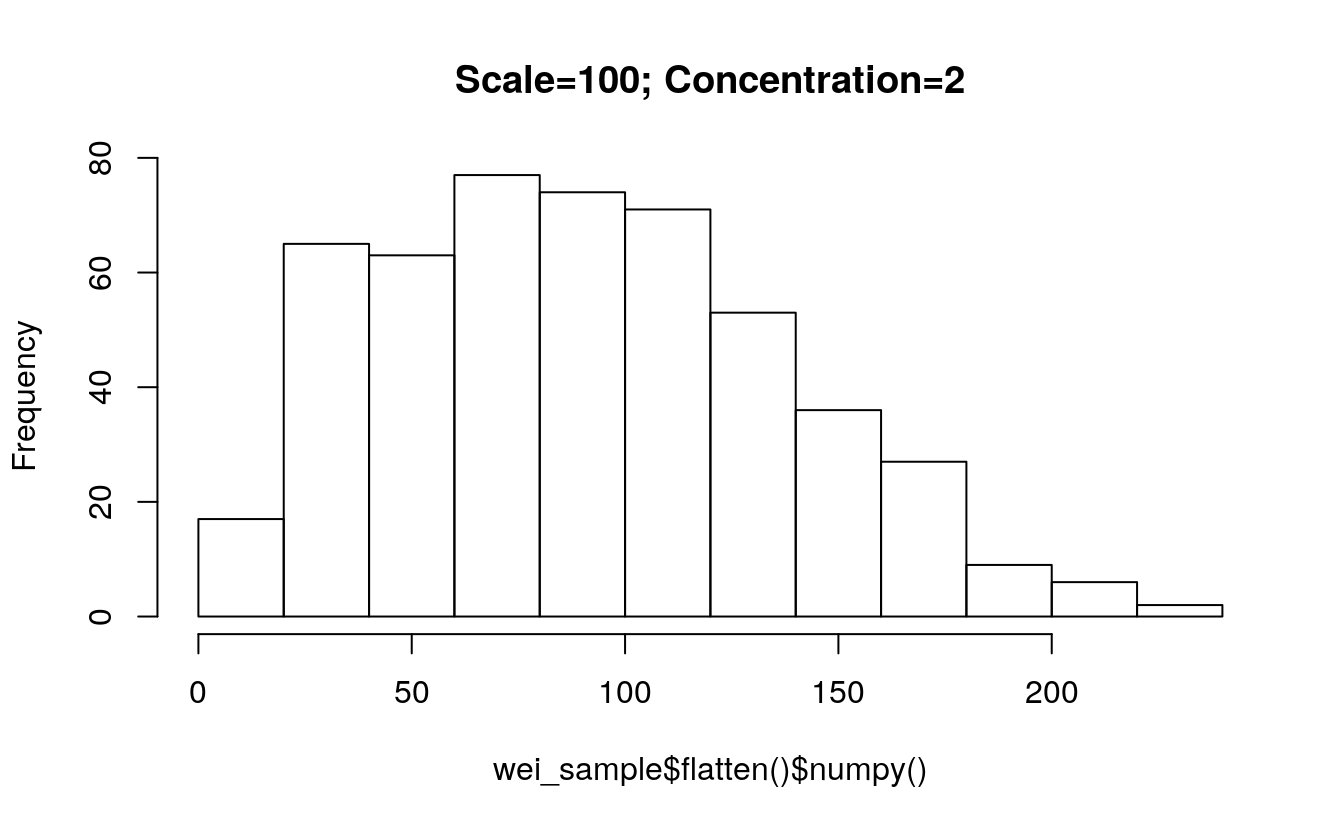

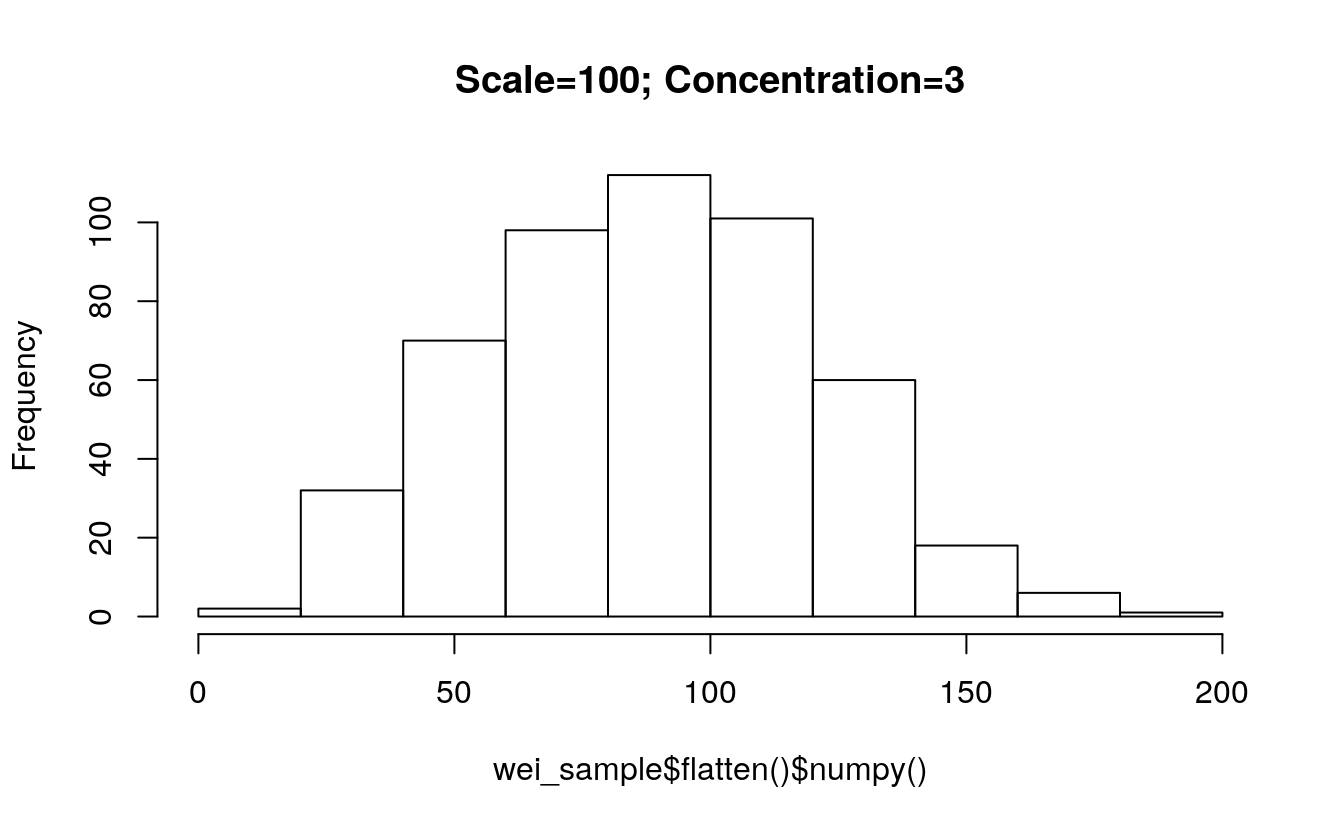

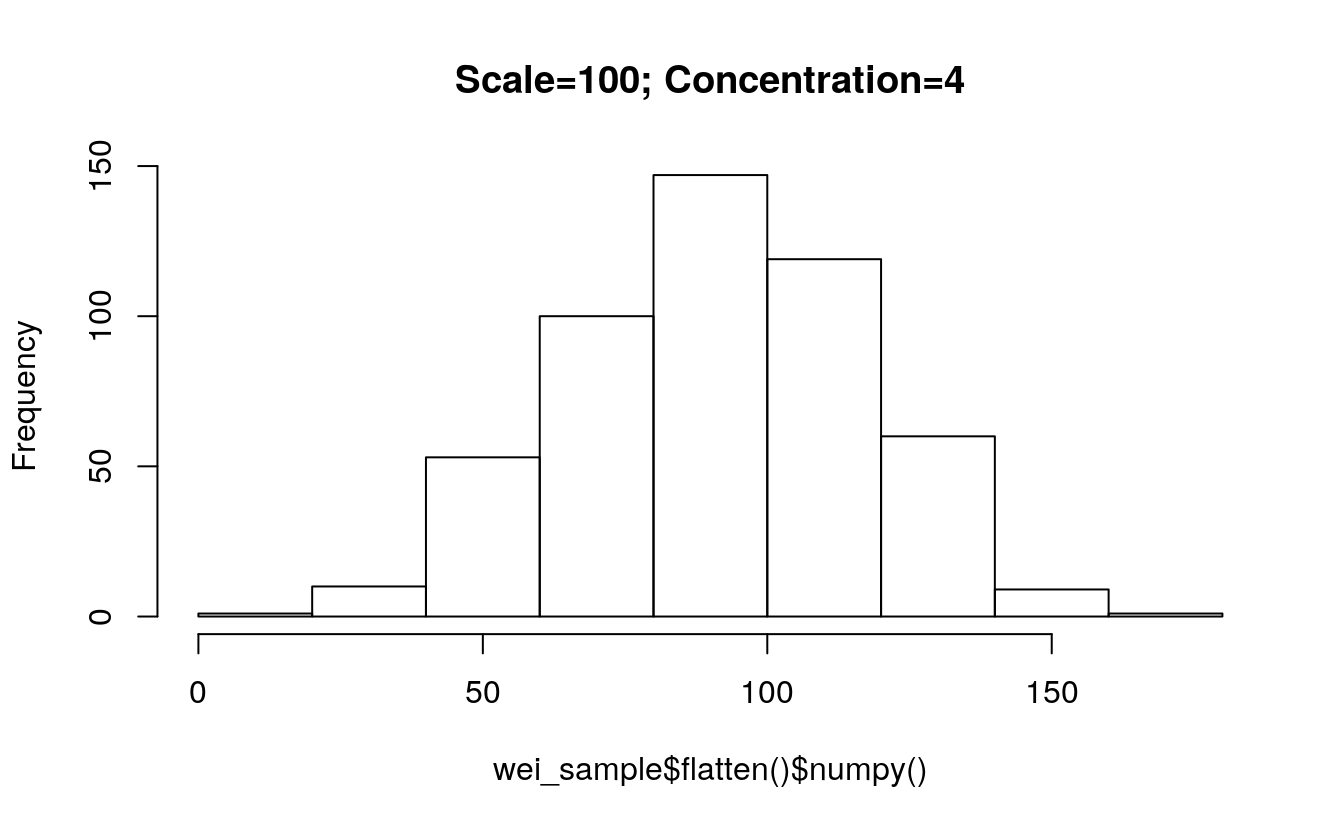

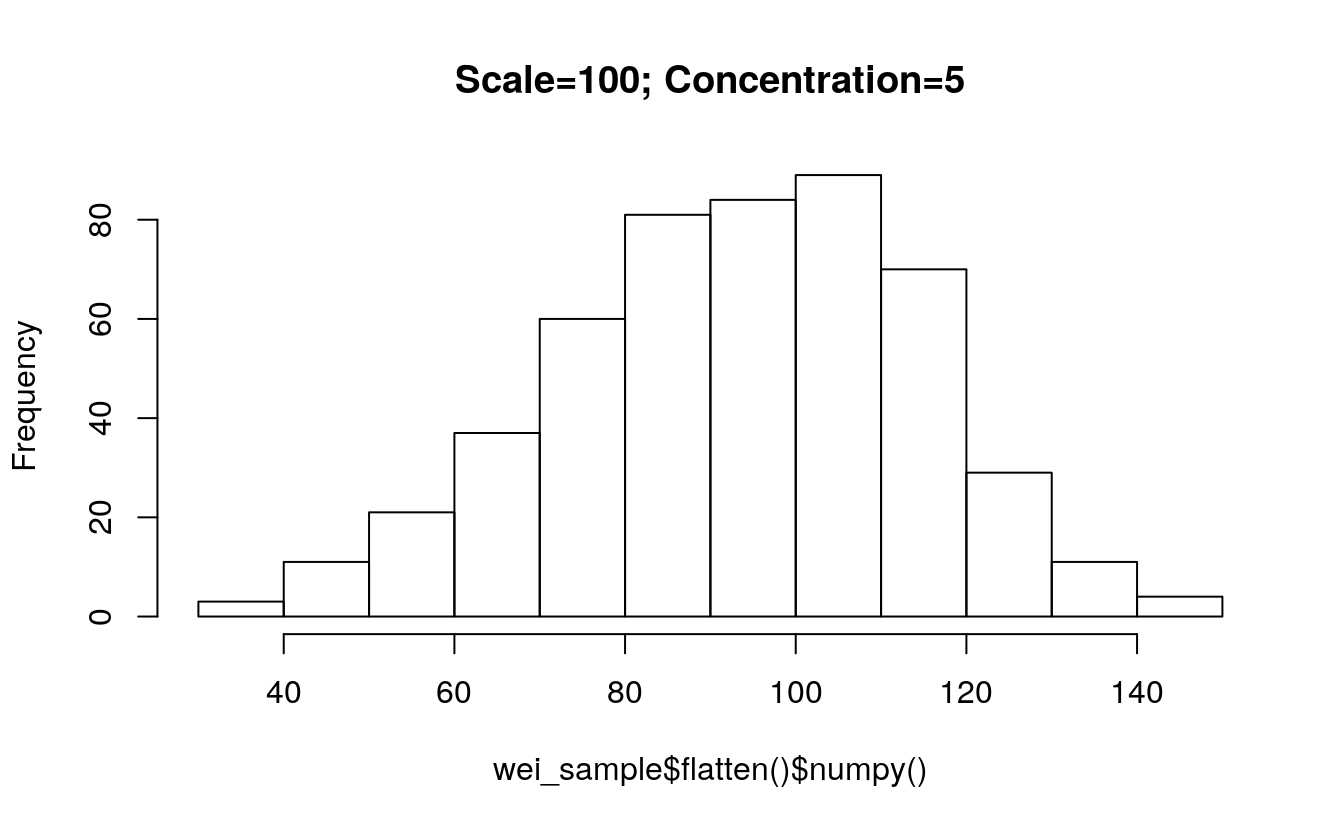

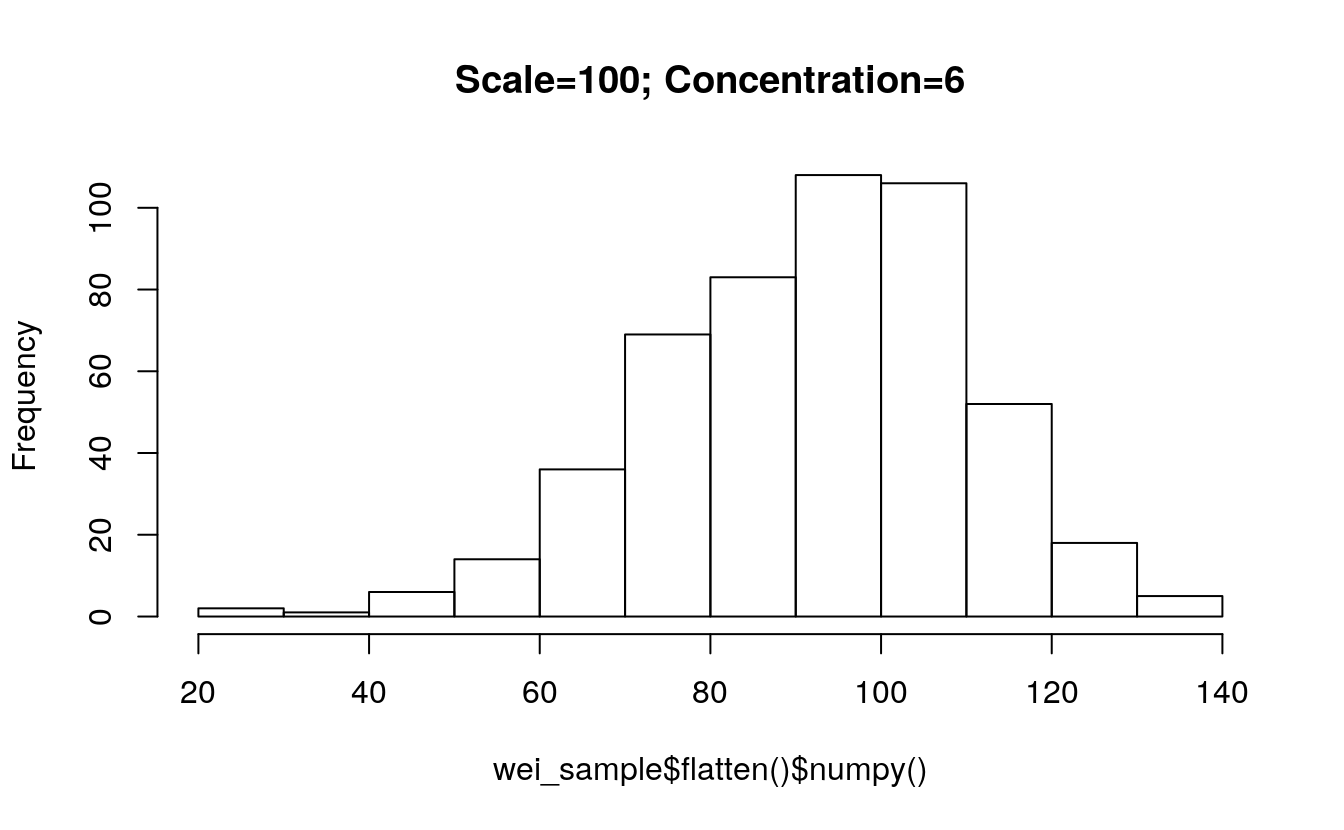

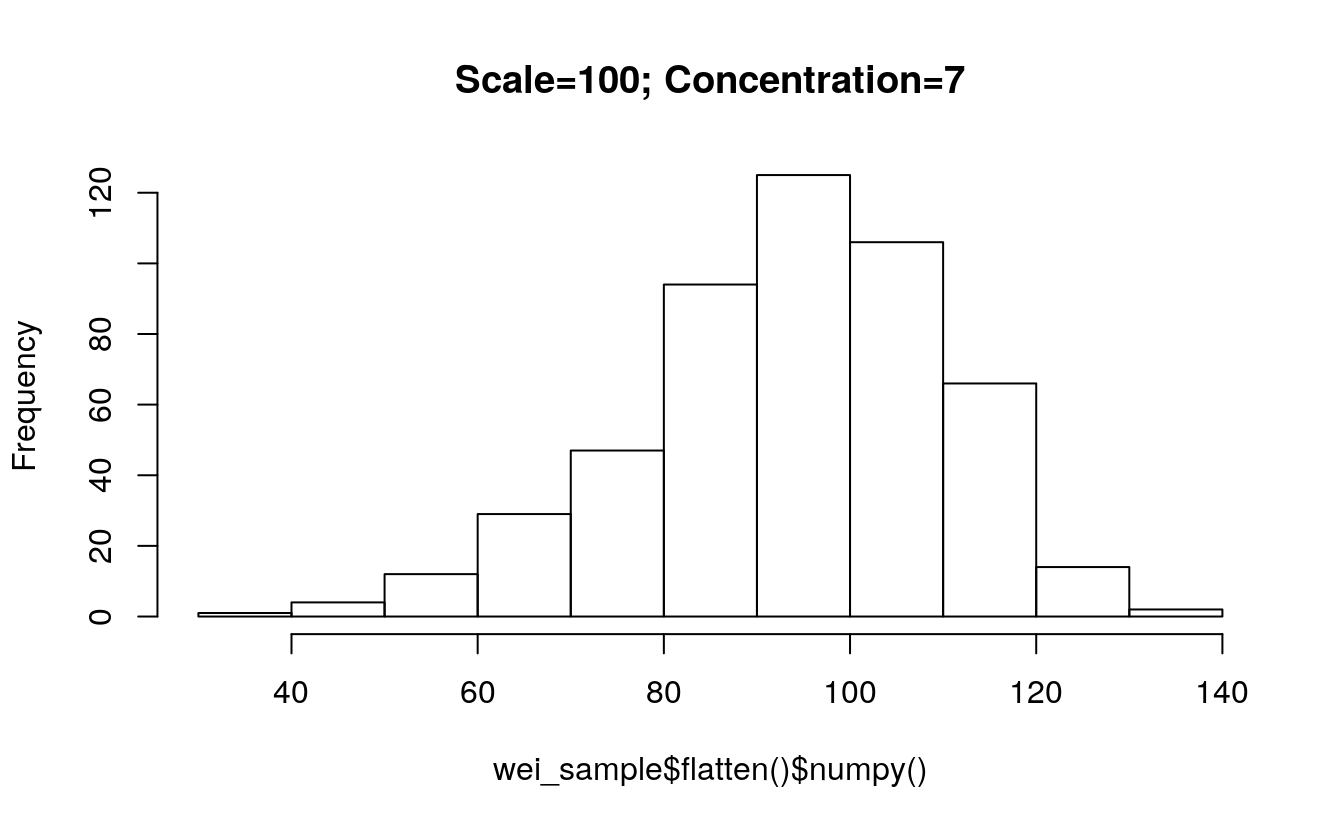

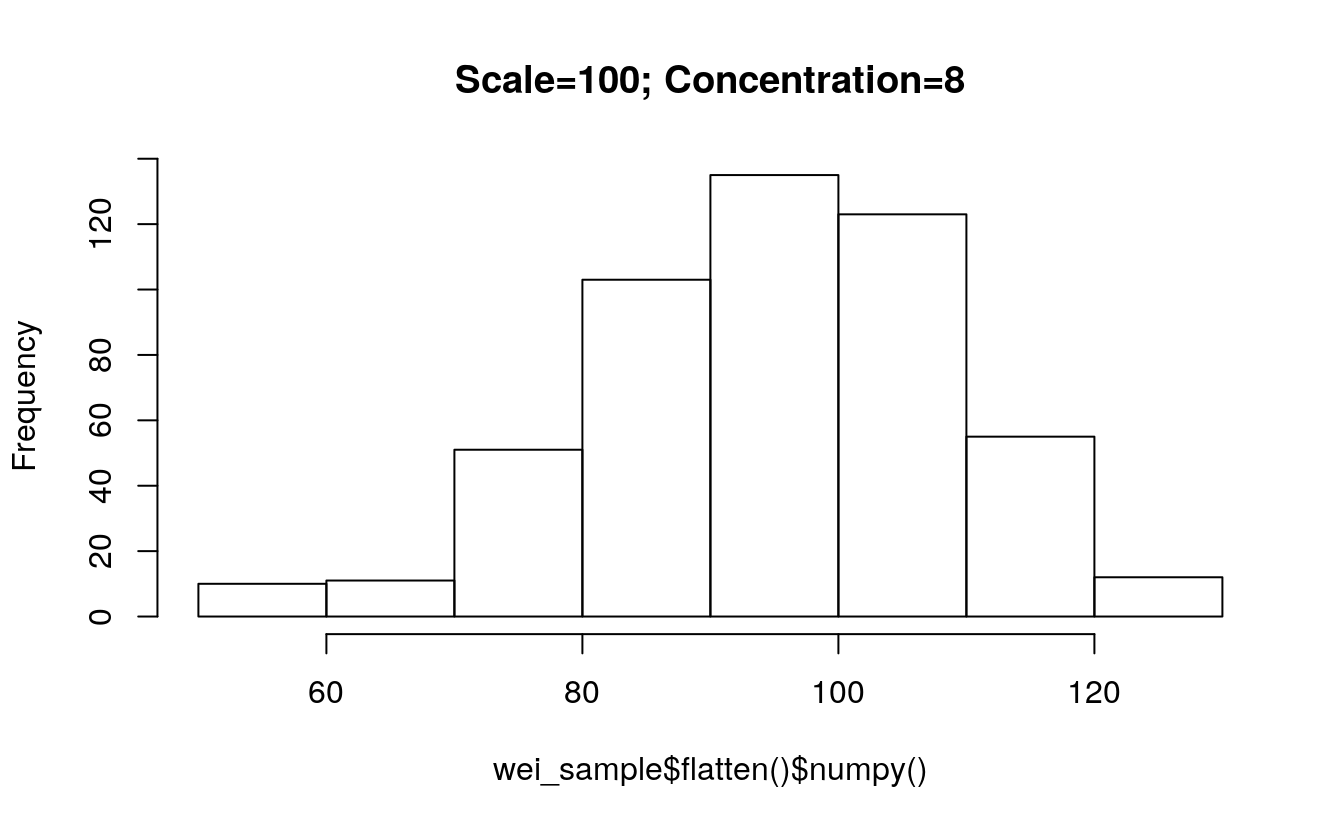

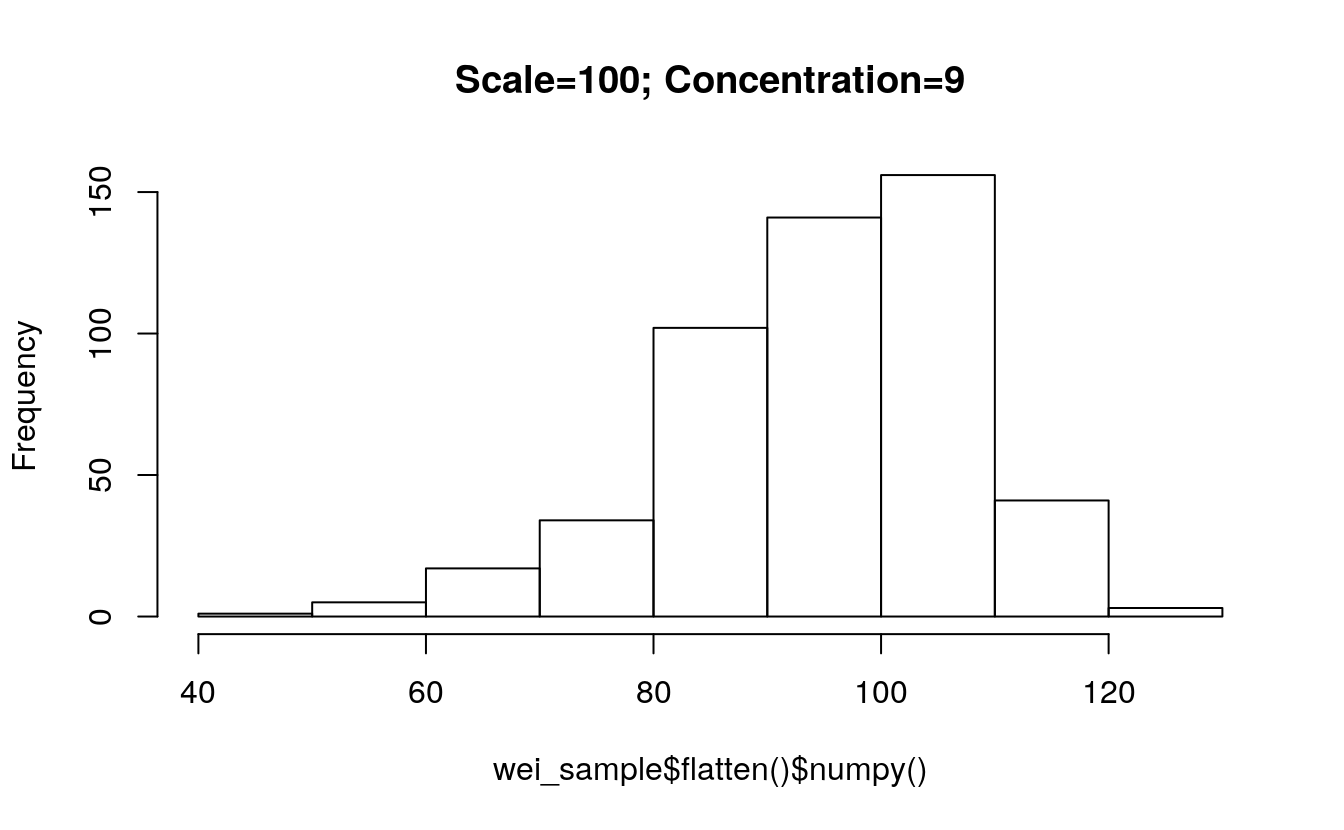

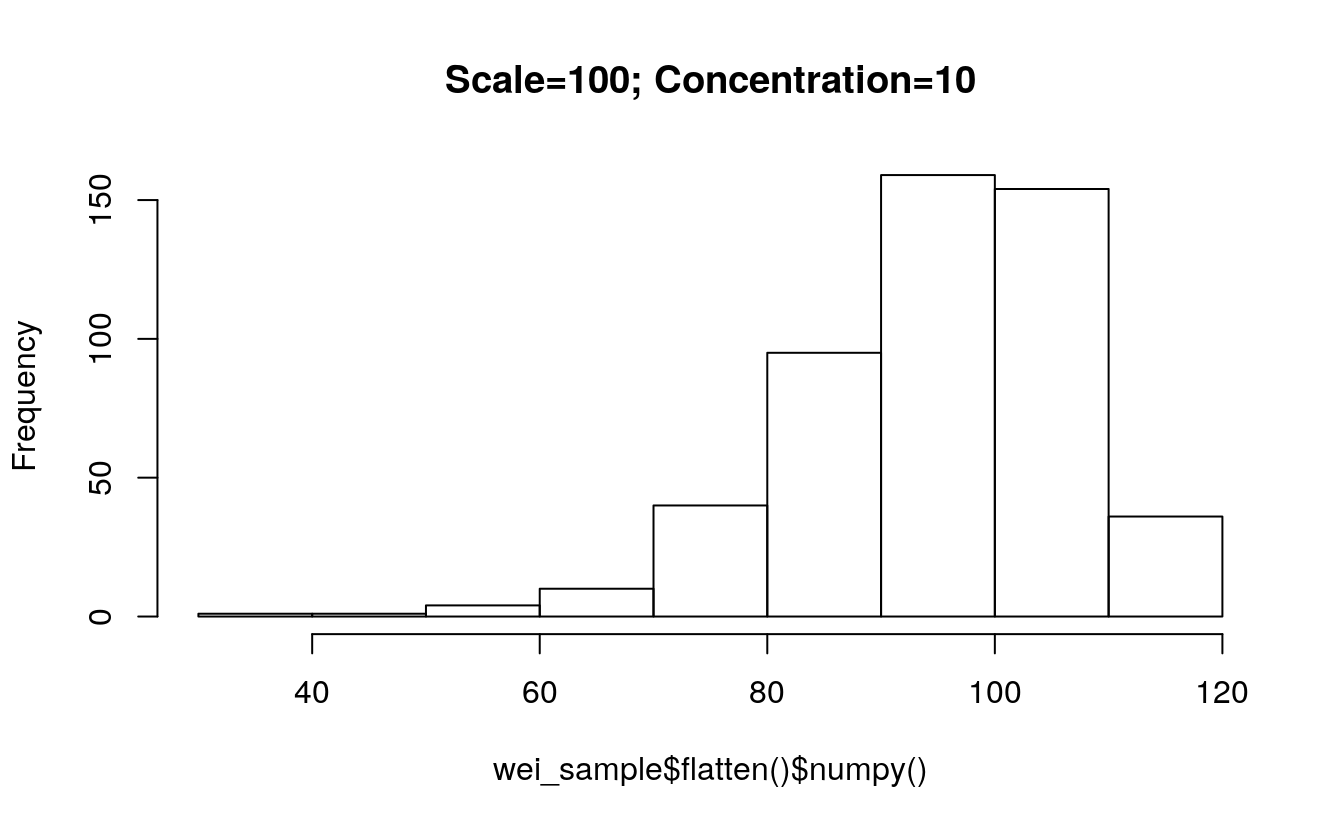

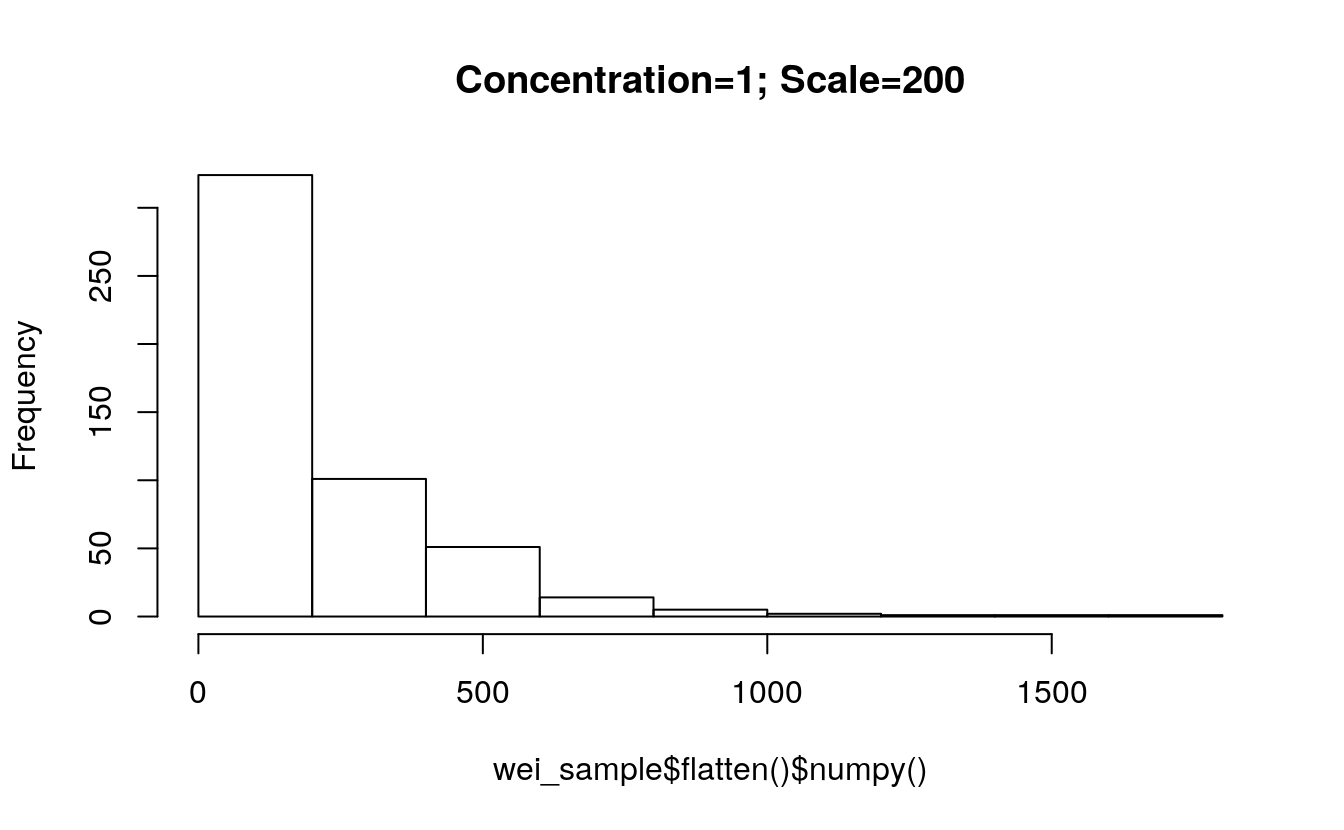

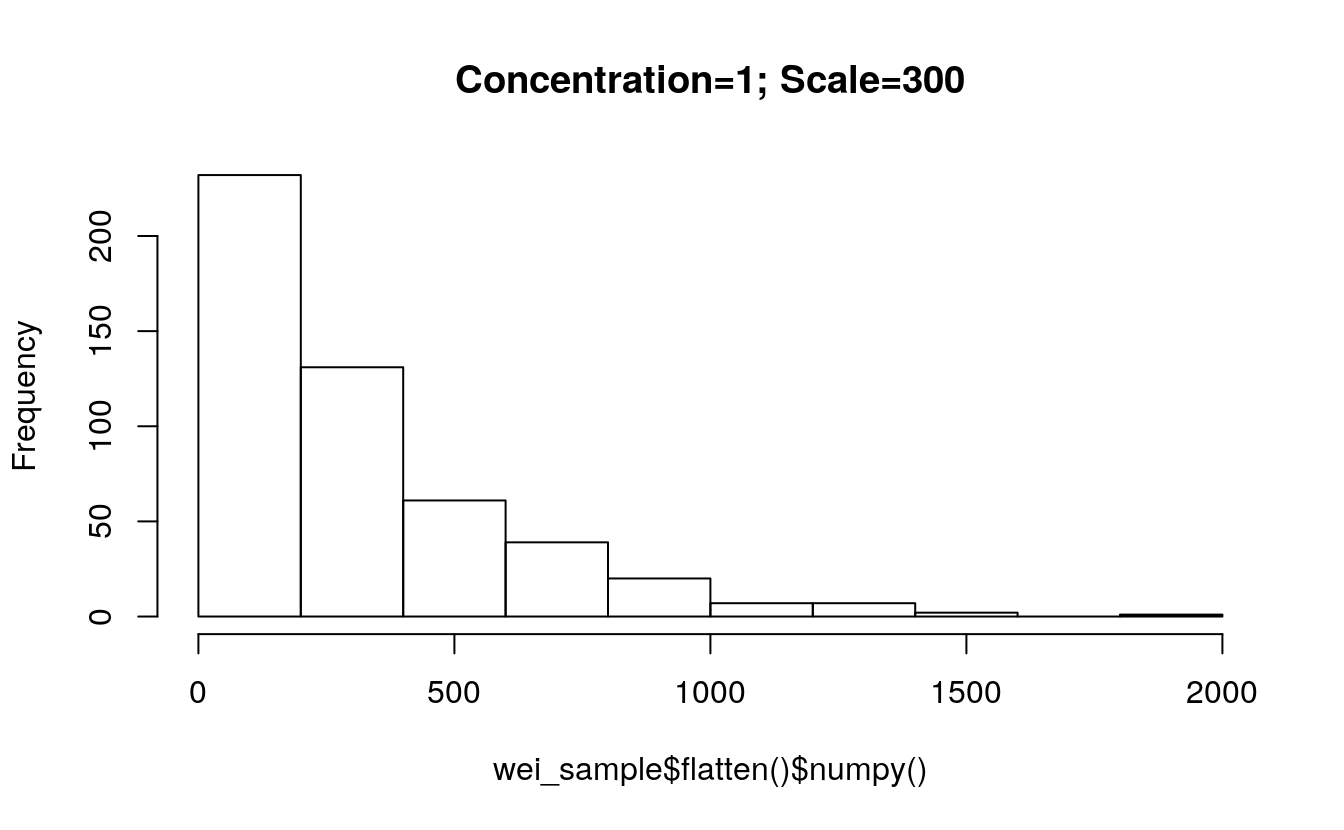

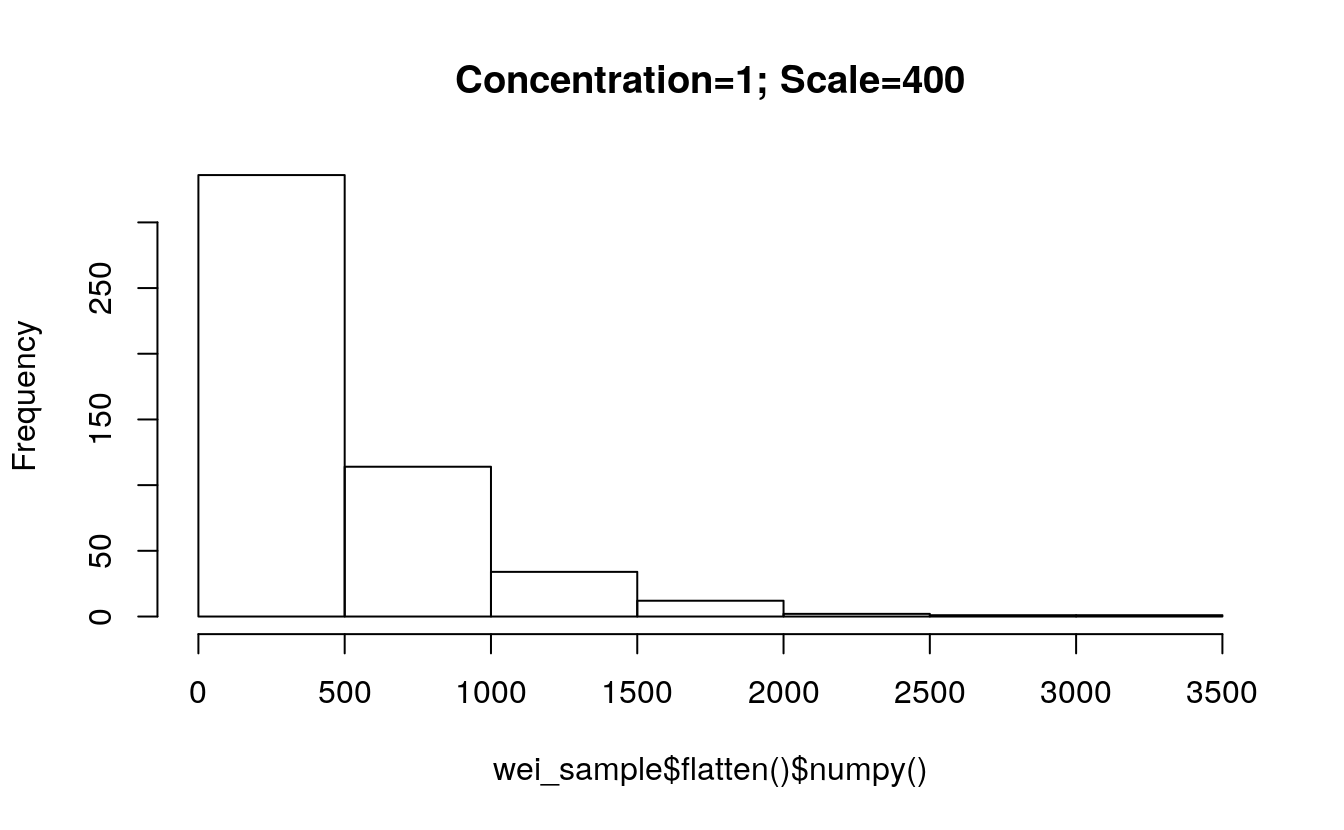

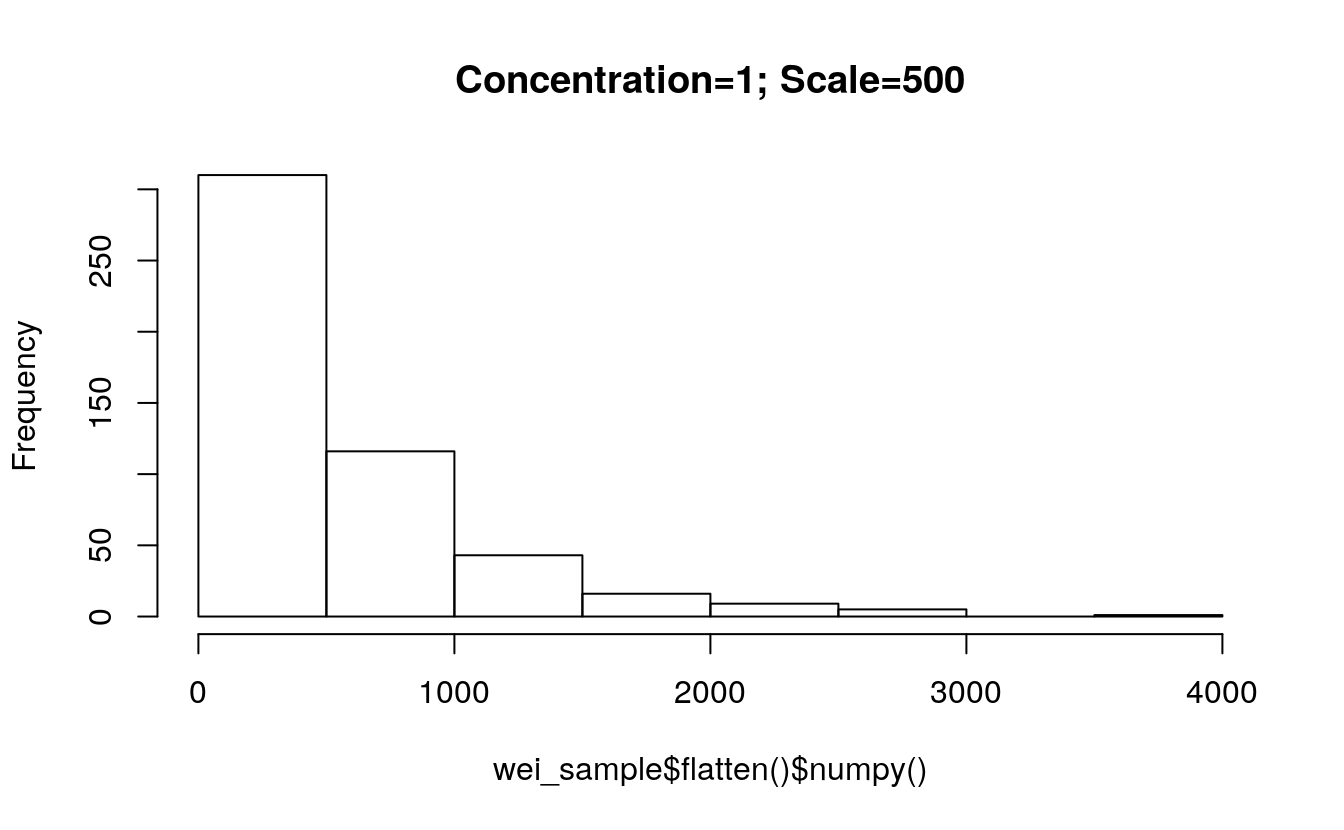

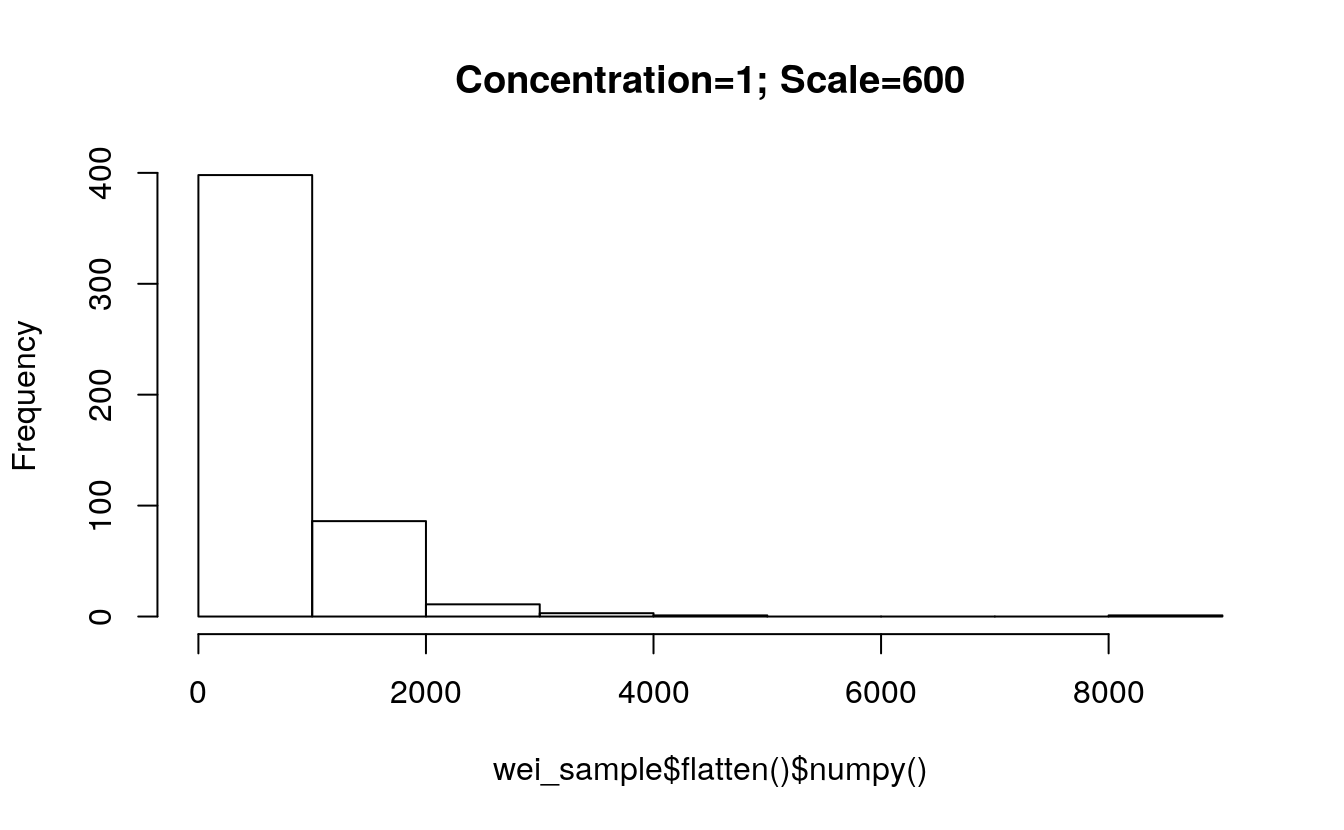

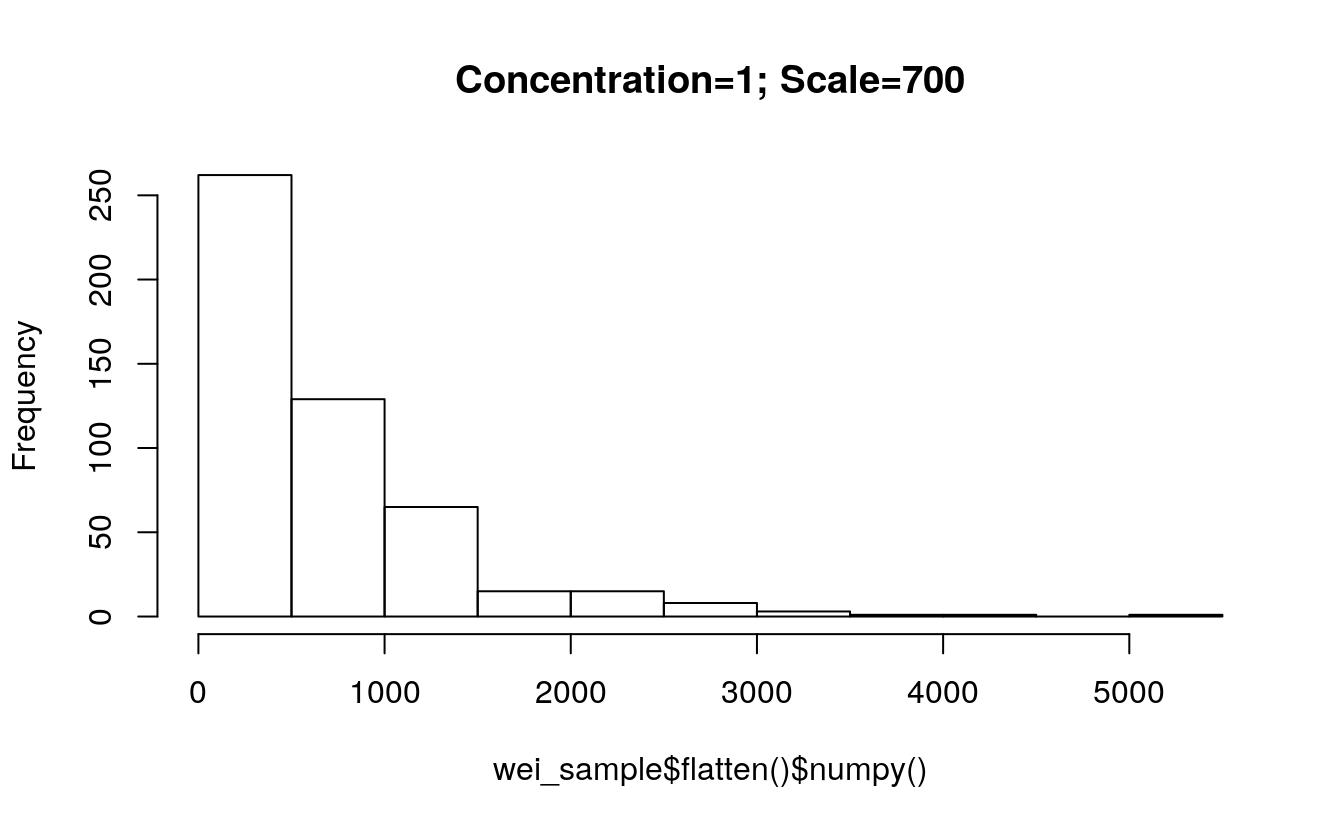

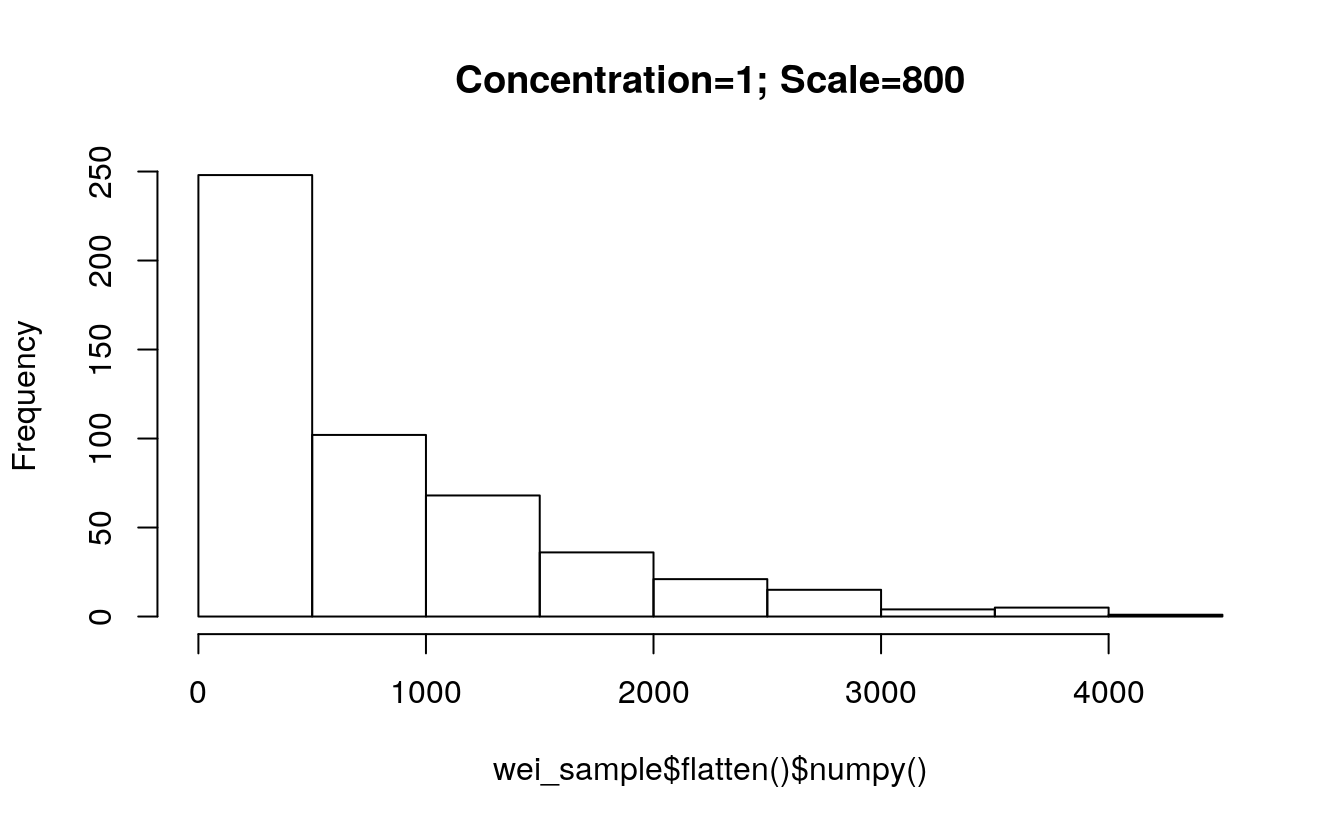

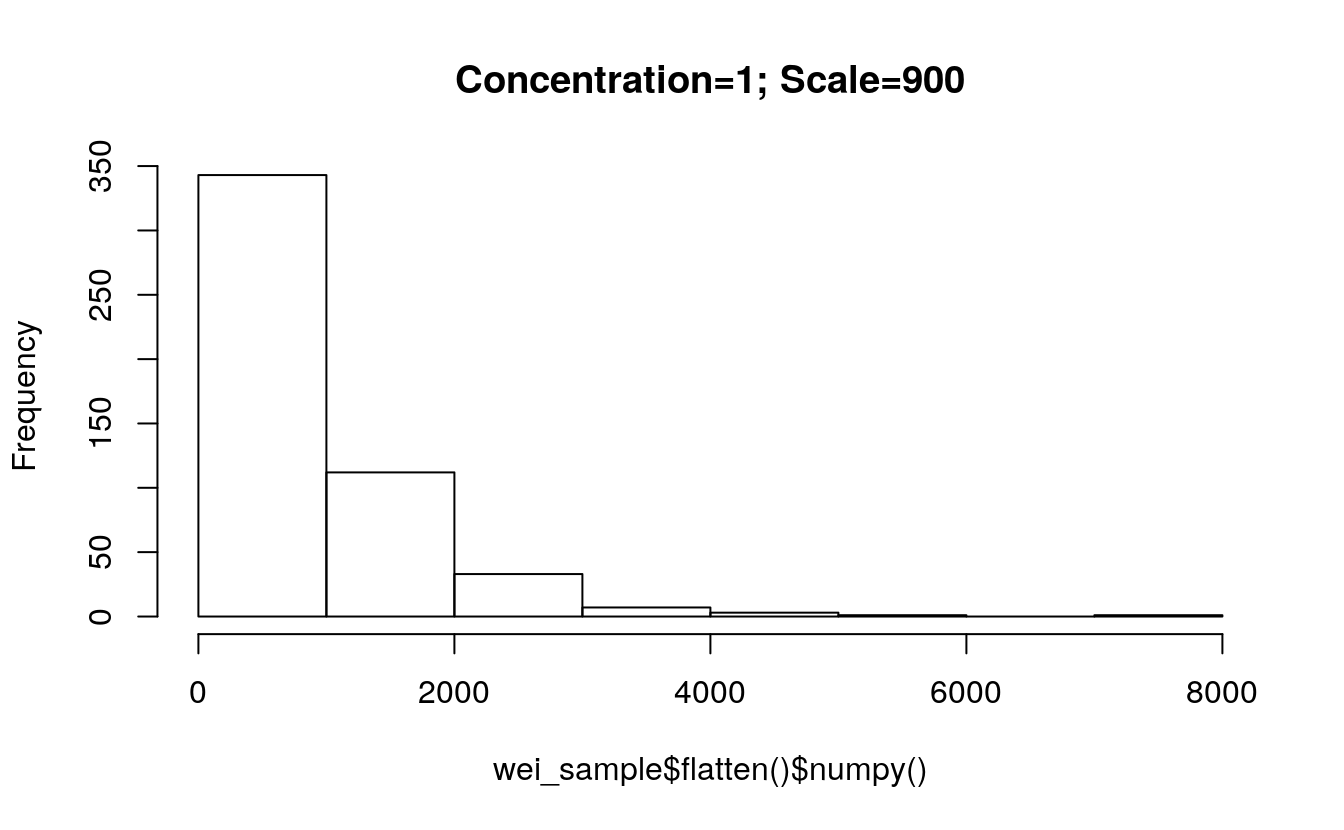

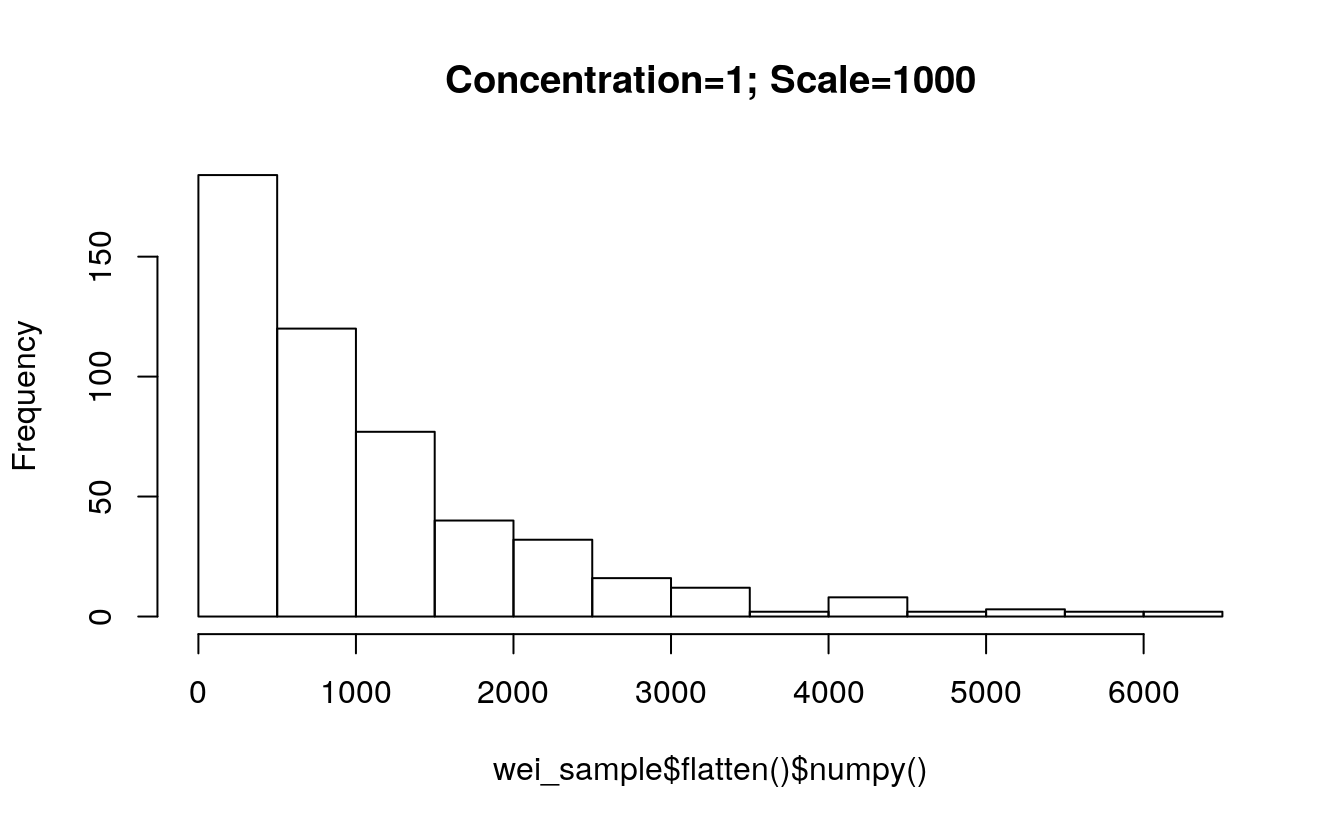

5.12.4 Weibull distribution

Weibull <- torch$distributions$weibull$Weibull

m = Weibull(torch$tensor(list(1.0)), torch$tensor(list(1.0)))

m$sample() # sample from a Weibull distribution with scale=1, concentration=1

#> tensor([1.7026])